Richard C. Wilson

Towards Simple Machine Learning Baselines for GNSS RFI Detection

Apr 14, 2025Abstract:Machine learning research in GNSS radio frequency interference (RFI) detection often lacks a clear empirical justification for the choice of deep learning architectures over simpler machine learning approaches. In this work, we argue for a change in research direction-from developing ever more complex deep learning models to carefully assessing their real-world effectiveness in comparison to interpretable and lightweight machine learning baselines. Our findings reveal that state-of-the-art deep learning models frequently fail to outperform simple, well-engineered machine learning methods in the context of GNSS RFI detection. Leveraging a unique large-scale dataset collected by the Swiss Air Force and Swiss Air-Rescue (Rega), and preprocessed by Swiss Air Navigation Services Ltd. (Skyguide), we demonstrate that a simple baseline model achieves 91\% accuracy in detecting GNSS RFI, outperforming more complex deep learning counterparts. These results highlight the effectiveness of pragmatic solutions and offer valuable insights to guide future research in this critical application domain.

LBONet: Supervised Spectral Descriptors for Shape Analysis

Nov 13, 2024Abstract:The Laplace-Beltrami operator has established itself in the field of non-rigid shape analysis due to its many useful properties such as being invariant under isometric transformation, having a countable eigensystem forming an orthonormal basis, and fully characterizing geodesic distances of the manifold. However, this invariancy only applies under isometric deformations, which leads to a performance breakdown in many real-world applications. In recent years emphasis has been placed upon extracting optimal features using deep learning methods, however spectral signatures play a crucial role and still add value. In this paper we take a step back, revisiting the LBO and proposing a supervised way to learn several operators on a manifold. Depending on the task, by applying these functions, we can train the LBO eigenbasis to be more task-specific. The optimization of the LBO leads to enormous improvements to established descriptors such as the heat kernel signature in various tasks such as retrieval, classification, segmentation, and correspondence, proving the adaption of the LBO eigenbasis to both global and highly local learning settings.

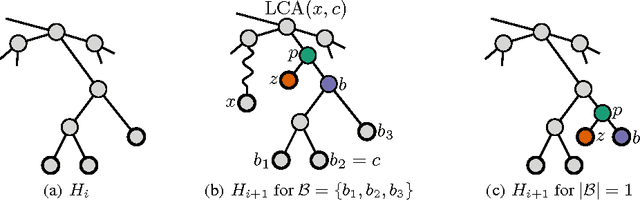

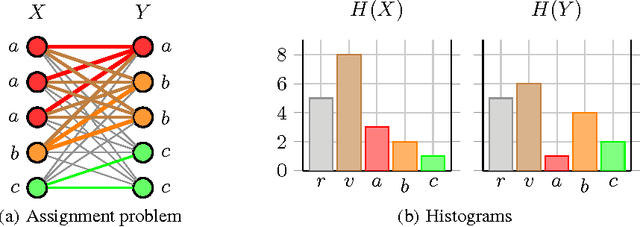

Computing Optimal Assignments in Linear Time for Graph Matching

Jan 29, 2019

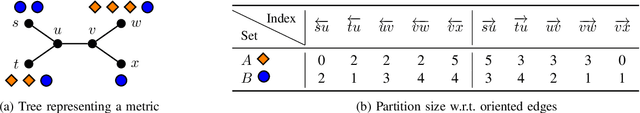

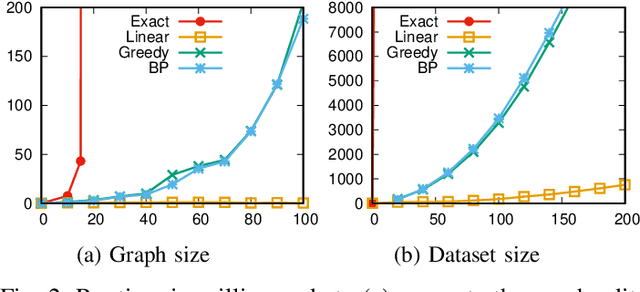

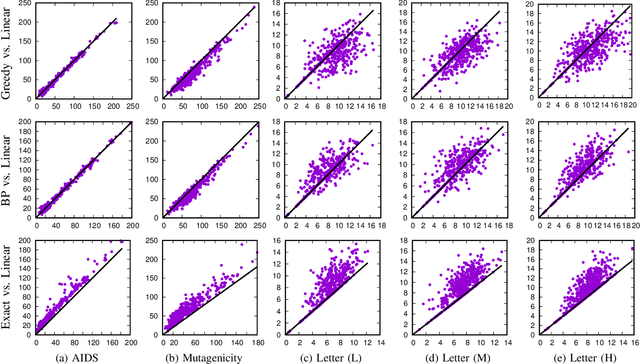

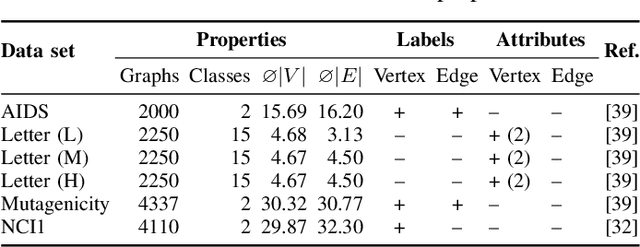

Abstract:Finding an optimal assignment between two sets of objects is a fundamental problem arising in many applications, including the matching of `bag-of-words' representations in natural language processing and computer vision. Solving the assignment problem typically requires cubic time and its pairwise computation is expensive on large datasets. In this paper, we develop an algorithm which can find an optimal assignment in linear time when the cost function between objects is represented by a tree distance. We employ the method to approximate the edit distance between two graphs by matching their vertices in linear time. To this end, we propose two tree distances, the first of which reflects discrete and structural differences between vertices, and the second of which can be used to compare continuous labels. We verify the effectiveness and efficiency of our methods using synthetic and real-world datasets.

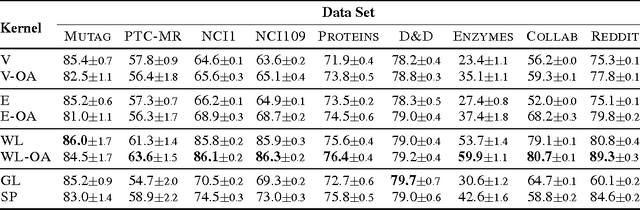

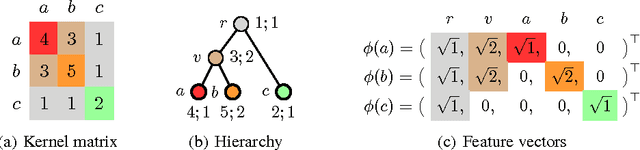

On Valid Optimal Assignment Kernels and Applications to Graph Classification

Jan 31, 2017

Abstract:The success of kernel methods has initiated the design of novel positive semidefinite functions, in particular for structured data. A leading design paradigm for this is the convolution kernel, which decomposes structured objects into their parts and sums over all pairs of parts. Assignment kernels, in contrast, are obtained from an optimal bijection between parts, which can provide a more valid notion of similarity. In general however, optimal assignments yield indefinite functions, which complicates their use in kernel methods. We characterize a class of base kernels used to compare parts that guarantees positive semidefinite optimal assignment kernels. These base kernels give rise to hierarchies from which the optimal assignment kernels are computed in linear time by histogram intersection. We apply these results by developing the Weisfeiler-Lehman optimal assignment kernel for graphs. It provides high classification accuracy on widely-used benchmark data sets improving over the original Weisfeiler-Lehman kernel.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge