Reza Sarshogh

Quantifying Challenges in the Application of Graph Representation Learning

Jun 18, 2020

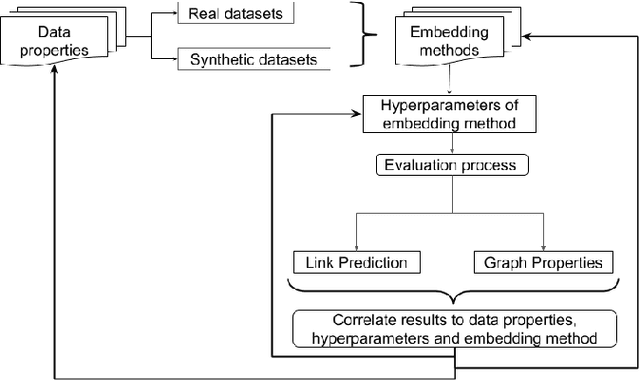

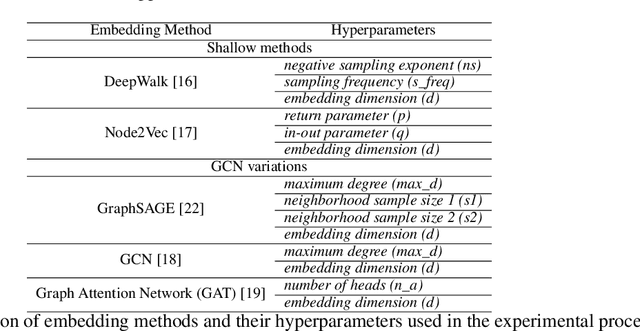

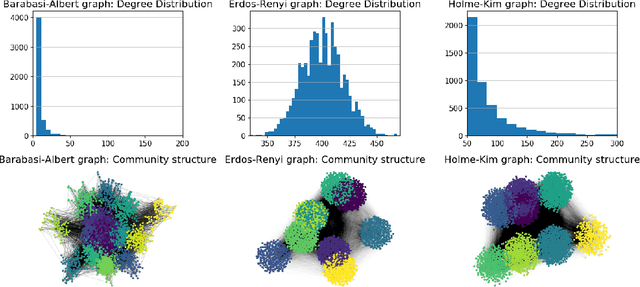

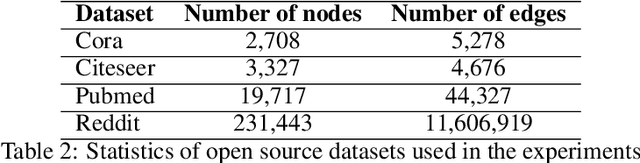

Abstract:Graph Representation Learning (GRL) has experienced significant progress as a means to extract structural information in a meaningful way for subsequent learning tasks. Current approaches including shallow embeddings and Graph Neural Networks have mostly been tested with node classification and link prediction tasks. In this work, we provide an application oriented perspective to a set of popular embedding approaches and evaluate their representational power with respect to real-world graph properties. We implement an extensive empirical data-driven framework to challenge existing norms regarding the expressive power of embedding approaches in graphs with varying patterns along with a theoretical analysis of the limitations we discovered in this process. Our results suggest that "one-to-fit-all" GRL approaches are hard to define in real-world scenarios and as new methods are being introduced they should be explicit about their ability to capture graph properties and their applicability in datasets with non-trivial structural differences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge