Reza Mohammadi

Graph-based Tabular Deep Learning Should Learn Feature Interactions, Not Just Make Predictions

Oct 06, 2025Abstract:Despite recent progress, deep learning methods for tabular data still struggle to compete with traditional tree-based models. A key challenge lies in modeling complex, dataset-specific feature interactions that are central to tabular data. Graph-based tabular deep learning (GTDL) methods aim to address this by representing features and their interactions as graphs. However, existing methods predominantly optimize predictive accuracy, neglecting accurate modeling of the graph structure. This position paper argues that GTDL should move beyond prediction-centric objectives and prioritize the explicit learning and evaluation of feature interactions. Using synthetic datasets with known ground-truth graph structures, we show that existing GTDL methods fail to recover meaningful feature interactions. Moreover, enforcing the true interaction structure improves predictive performance. This highlights the need for GTDL methods to prioritize quantitative evaluation and accurate structural learning. We call for a shift toward structure-aware modeling as a foundation for building GTDL systems that are not only accurate but also interpretable, trustworthy, and grounded in domain understanding.

High-Dimensional Bayesian Structure Learning in Gaussian Graphical Models using Marginal Pseudo-Likelihood

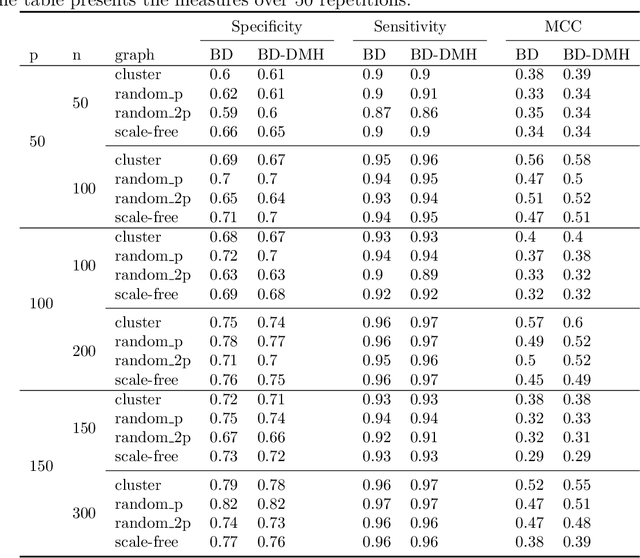

Jun 30, 2023Abstract:Gaussian graphical models depict the conditional dependencies between variables within a multivariate normal distribution in a graphical format. The identification of these graph structures is an area known as structure learning. However, when utilizing Bayesian methodologies in structure learning, computational complexities can arise, especially with high-dimensional graphs surpassing 250 nodes. This paper introduces two innovative search algorithms that employ marginal pseudo-likelihood to address this computational challenge. These methods can swiftly generate reliable estimations for problems encompassing 1000 variables in just a few minutes on standard computers. For those interested in practical applications, the code supporting this new approach is made available through the R package BDgraph.

Continuous-Time Birth-Death MCMC for Bayesian Regression Tree Models

Apr 19, 2019

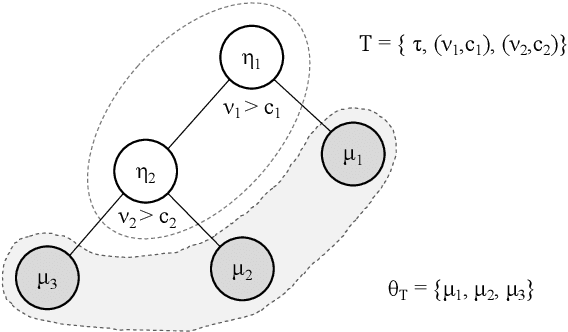

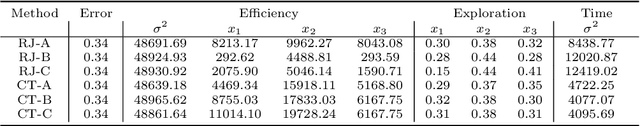

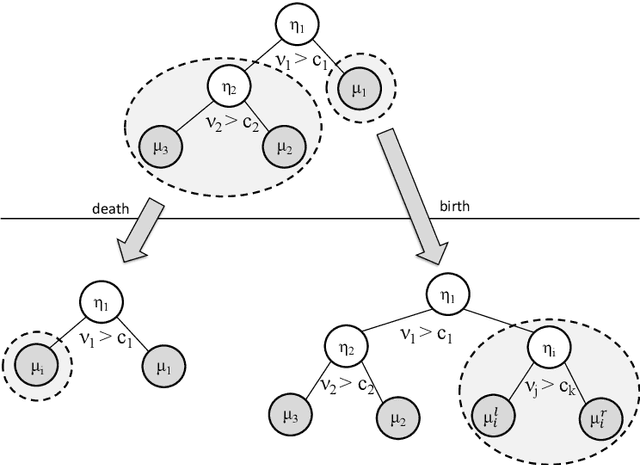

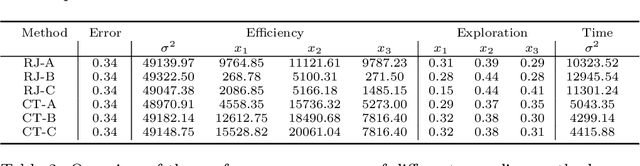

Abstract:Decision trees are flexible models that are well suited for many statistical regression problems. In a Bayesian framework for regression trees, Markov Chain Monte Carlo (MCMC) search algorithms are required to generate samples of tree models according to their posterior probabilities. The critical component of such an MCMC algorithm is to construct good Metropolis-Hastings steps for updating the tree topology. However, such algorithms frequently suffering from local mode stickiness and poor mixing. As a result, the algorithms are slow to converge. Hitherto, authors have primarily used discrete-time birth/death mechanisms for Bayesian (sums of) regression tree models to explore the model space. These algorithms are efficient only if the acceptance rate is high which is not always the case. Here we overcome this issue by developing a new search algorithm which is based on a continuous-time birth-death Markov process. This search algorithm explores the model space by jumping between parameter spaces corresponding to different tree structures. In the proposed algorithm, the moves between models are always accepted which can dramatically improve the convergence and mixing properties of the MCMC algorithm. We provide theoretical support of the algorithm for Bayesian regression tree models and demonstrate its performance.

The ratio of normalizing constants for Bayesian graphical Gaussian model selection

Oct 12, 2018

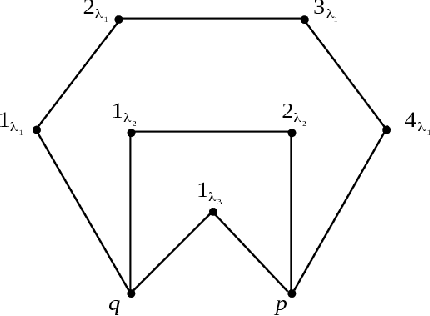

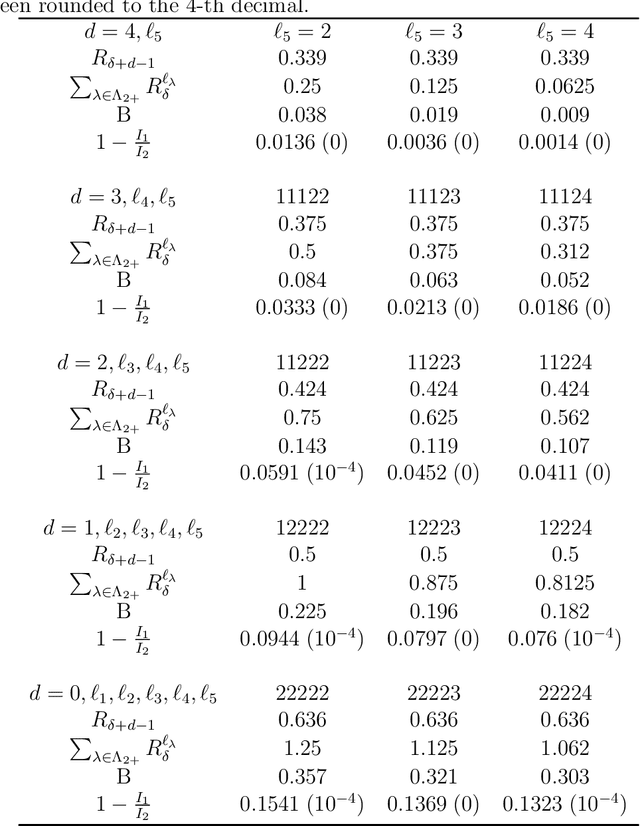

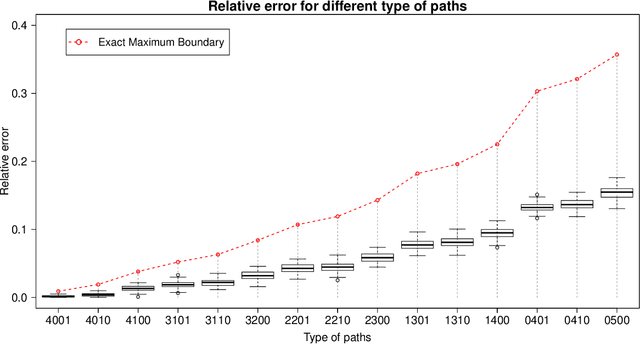

Abstract:Many graphical Gaussian selection methods in a Bayesian framework use the G-Wishart as the conjugate prior on the precision matrix. The Bayes factor to compare a model governed by a graph G and a model governed by the neighboring graph G-e, derived from G by deleting an edge e, is a function of the ratios of prior and posterior normalizing constants of the G-Wishart for G and G-e. While more recent methods avoid the computation of the posterior ratio, computing the ratio of prior normalizing constants, (2) below, has remained a computational stumbling block. In this paper, we propose an explicit analytic approximation to (2) which is equal to the ratio of two Gamma functions evaluated at (delta+d)/2 and (delta+d+1)/2 respectively, where delta is the shape parameter of the G-Wishart and d is the number of paths of length two between the endpoints of e. This approximation allows us to avoid Monte Carlo methods, is computationally inexpensive and is scalable to high-dimensional problems. We show that the ratio of the approximation to the true value is always between zero and one and so, one cannot incur wild errors. In the particular case where the paths between the endpoints of e are disjoint, we show that the approximation is very good. When the paths between these two endpoints are not disjoint we give a sufficient condition for the approximation to be good. Numerical results show that the ratio of the approximation to the true value of the prior ratio is always between .55 and 1 and very often close to 1. We compare the results obtained with a model search using our approximation and a search using the double Metropolis-Hastings algorithm to compute the prior ratio. The results are extremely close.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge