Reza Akhavian

BIM-Discrepancy-Driven Active Sensing for Risk-Aware UAV-UGV Navigation

Nov 18, 2025Abstract:This paper presents a BIM-discrepancy-driven active sensing framework for cooperative navigation between unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) in dynamic construction environments. Traditional navigation approaches rely on static Building Information Modeling (BIM) priors or limited onboard perception. In contrast, our framework continuously fuses real-time LiDAR data from aerial and ground robots with BIM priors to maintain an evolving 2D occupancy map. We quantify navigation safety through a unified corridor-risk metric integrating occupancy uncertainty, BIM-map discrepancy, and clearance. When risk exceeds safety thresholds, the UAV autonomously re-scans affected regions to reduce uncertainty and enable safe replanning. Validation in PX4-Gazebo simulation with Robotec GPU LiDAR demonstrates that risk-triggered re-scanning reduces mean corridor risk by 58% and map entropy by 43% compared to static BIM navigation, while maintaining clearance margins above 0.4 m. Compared to frontier-based exploration, our approach achieves similar uncertainty reduction in half the mission time. These results demonstrate that integrating BIM priors with risk-adaptive aerial sensing enables scalable, uncertainty-aware autonomy for construction robotics.

Bootstrapped LLM Semantics for Context-Aware Path Planning

Nov 15, 2025Abstract:Prompting robots with natural language (NL) has largely been studied as what task to execute (goal selection, skill sequencing) rather than how to execute that task safely and efficiently in semantically rich, human-centric spaces. We address this gap with a framework that turns a large language model (LLM) into a stochastic semantic sensor whose outputs modulate a classical planner. Given a prompt and a semantic map, we draw multiple LLM "danger" judgments and apply a Bayesian bootstrap to approximate a posterior over per-class risk. Using statistics from the posterior, we create a potential cost to formulate a path planning problem. Across simulated environments and a BIM-backed digital twin, our method adapts how the robot moves in response to explicit prompts and implicit contextual information. We present qualitative and quantitative results.

Bayesian BIM-Guided Construction Robot Navigation with NLP Safety Prompts in Dynamic Environments

Jan 29, 2025

Abstract:Construction robotics increasingly relies on natural language processing for task execution, creating a need for robust methods to interpret commands in complex, dynamic environments. While existing research primarily focuses on what tasks robots should perform, less attention has been paid to how these tasks should be executed safely and efficiently. This paper presents a novel probabilistic framework that uses sentiment analysis from natural language commands to dynamically adjust robot navigation policies in construction environments. The framework leverages Building Information Modeling (BIM) data and natural language prompts to create adaptive navigation strategies that account for varying levels of environmental risk and uncertainty. We introduce an object-aware path planning approach that combines exponential potential fields with a grid-based representation of the environment, where the potential fields are dynamically adjusted based on the semantic analysis of user prompts. The framework employs Bayesian inference to consolidate multiple information sources: the static data from BIM, the semantic content of natural language commands, and the implied safety constraints from user prompts. We demonstrate our approach through experiments comparing three scenarios: baseline shortest-path planning, safety-oriented navigation, and risk-aware routing. Results show that our method successfully adapts path planning based on natural language sentiment, achieving a 50\% improvement in minimum distance to obstacles when safety is prioritized, while maintaining reasonable path lengths. Scenarios with contrasting prompts, such as "dangerous" and "safe", demonstrate the framework's ability to modify paths. This approach provides a flexible foundation for integrating human knowledge and safety considerations into construction robot navigation.

BIM-based Safe and Trustworthy Robot Pathfinding using Scalable MHA* Algorithms and Natural Language Processing

Nov 22, 2024

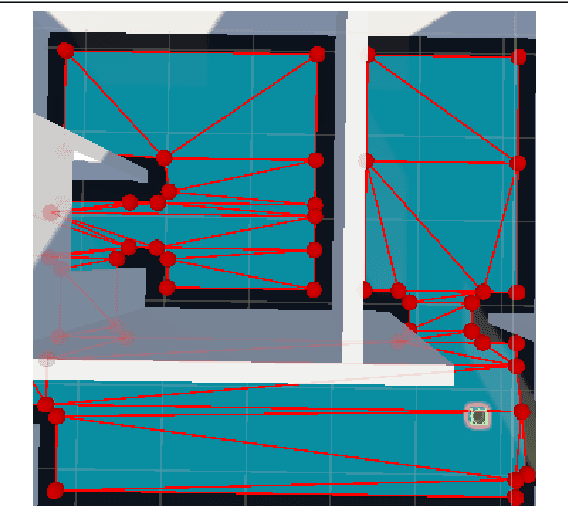

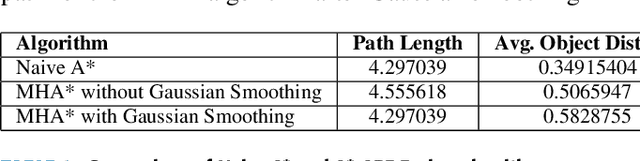

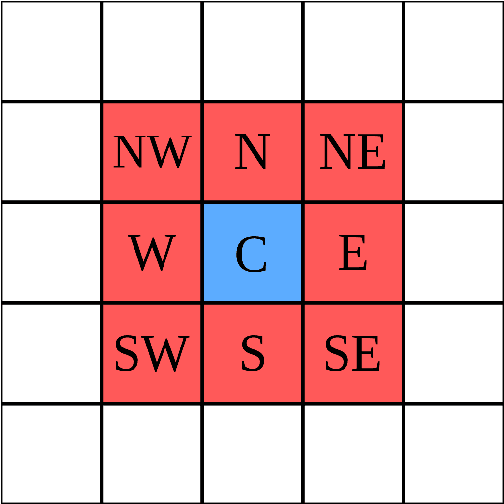

Abstract:Construction robots have gained significant traction in recent years in research and development. However, the application of industrial robots has unique challenges. Dynamic environments, domain-specific tasks, and complex localization and mapping are significant obstacles in their development. In construction job sites, moving objects and complex machinery can make pathfinding a difficult task due to the possibility of object collisions. Existing methods such as simultaneous localization and mapping are viable solutions to this problem, however, due to the precision and data quality required by the sensors and the processing of the information, they can be very computationally expensive. We propose using spatial and semantic information in building information modeling (BIM) to develop domain-specific pathfinding strategies. In this work, we integrate a multi-heuristic A* (MHA*) algorithm using APFs from the BIM spatial information and process textual information from the BIM using large language models (LLMs) to adjust the algorithm for dynamic object avoidance. We show increased robot object proximity by 80% while maintaining similar path lengths.

Adaptive Robot Perception in Construction Environments using 4D BIM

Sep 20, 2024Abstract:Human Activity Recognition (HAR) is a pivotal component of robot perception for physical Human Robot Interaction (pHRI) tasks. In construction robotics, it is vital that robots have an accurate and robust perception of worker activities. This enhanced perception is the foundation of trustworthy and safe Human-Robot Collaboration (HRC) in an industrial setting. Many developed HAR algorithms lack the robustness and adaptability to ensure seamless HRC. Recent works have employed multi-modal approaches to increase feature considerations. This paper further expands previous research to include 4D building information modeling (BIM) schedule data. We created a pipeline that transforms high-level BIM schedule activities into a set of low-level tasks in real-time. The framework then utilizes this subset as a tool to restrict the solution space that the HAR algorithm can predict activities from. By limiting this subspace through 4D BIM schedule data, the algorithm has a higher chance of predicting the true possible activities from a smaller pool of possibilities in a localized setting as compared to calculating all global possibilities at every point. Results indicate that the proposed approach achieves higher confidence predictions over the base model without leveraging the BIM data.

Ergonomic Optimization in Worker-Robot Bimanual Object Handover: Implementing REBA Using Reinforcement Learning in Virtual Reality

Mar 18, 2024Abstract:Robots can serve as safety catalysts on construction job sites by taking over hazardous and repetitive tasks while alleviating the risks associated with existing manual workflows. Research on the safety of physical human-robot interaction (pHRI) is traditionally focused on addressing the risks associated with potential collisions. However, it is equally important to ensure that the workflows involving a collaborative robot are inherently safe, even though they may not result in an accident. For example, pHRI may require the human counterpart to use non-ergonomic body postures to conform to the robot hardware and physical configurations. Frequent and long-term exposure to such situations may result in chronic health issues. Safety and ergonomics assessment measures can be understood by robots if they are presented in algorithmic fashions so optimization for body postures is attainable. While frameworks such as Rapid Entire Body Assessment (REBA) have been an industry standard for many decades, they lack a rigorous mathematical structure which poses challenges in using them immediately for pHRI safety optimization purposes. Furthermore, learnable approaches have limited robustness outside of their training data, reducing generalizability. In this paper, we propose a novel framework that approaches optimization through Reinforcement Learning, ensuring precise, online ergonomic scores as compared to approximations, while being able to generalize and tune the regiment to any human and any task. To ensure practicality, the training is done in virtual reality utilizing Inverse Kinematics to simulate human movement mechanics. Experimental findings are compared to ergonomically naive object handover heuristics and indicate promising results where the developed framework can find the optimal object handover coordinates in pHRI contexts for manual material handling exemplary situations.

Expanding Frozen Vision-Language Models without Retraining: Towards Improved Robot Perception

Aug 31, 2023

Abstract:Vision-language models (VLMs) have shown powerful capabilities in visual question answering and reasoning tasks by combining visual representations with the abstract skill set large language models (LLMs) learn during pretraining. Vision, while the most popular modality to augment LLMs with, is only one representation of a scene. In human-robot interaction scenarios, robot perception requires accurate scene understanding by the robot. In this paper, we define and demonstrate a method of aligning the embedding spaces of different modalities (in this case, inertial measurement unit (IMU) data) to the vision embedding space through a combination of supervised and contrastive training, enabling the VLM to understand and reason about these additional modalities without retraining. We opt to give the model IMU embeddings directly over using a separate human activity recognition model that feeds directly into the prompt to allow for any nonlinear interactions between the query, image, and IMU signal that would be lost by mapping the IMU data to a discrete activity label. Further, we demonstrate our methodology's efficacy through experiments involving human activity recognition using IMU data and visual inputs. Our results show that using multiple modalities as input improves the VLM's scene understanding and enhances its overall performance in various tasks, thus paving the way for more versatile and capable language models in multi-modal contexts.

Trust in Construction AI-Powered Collaborative Robots: A Qualitative Empirical Analysis

Aug 28, 2023

Abstract:Construction technology researchers and forward-thinking companies are experimenting with collaborative robots (aka cobots), powered by artificial intelligence (AI), to explore various automation scenarios as part of the digital transformation of the industry. Intelligent cobots are expected to be the dominant type of robots in the future of work in construction. However, the black-box nature of AI-powered cobots and unknown technical and psychological aspects of introducing them to job sites are precursors to trust challenges. By analyzing the results of semi-structured interviews with construction practitioners using grounded theory, this paper investigates the characteristics of trustworthy AI-powered cobots in construction. The study found that while the key trust factors identified in a systematic literature review -- conducted previously by the authors -- resonated with the field experts and end users, other factors such as financial considerations and the uncertainty associated with change were also significant barriers against trusting AI-powered cobots in construction.

Robust Activity Recognition for Adaptive Worker-Robot Interaction using Transfer Learning

Aug 28, 2023

Abstract:Human activity recognition (HAR) using machine learning has shown tremendous promise in detecting construction workers' activities. HAR has many applications in human-robot interaction research to enable robots' understanding of human counterparts' activities. However, many existing HAR approaches lack robustness, generalizability, and adaptability. This paper proposes a transfer learning methodology for activity recognition of construction workers that requires orders of magnitude less data and compute time for comparable or better classification accuracy. The developed algorithm transfers features from a model pre-trained by the original authors and fine-tunes them for the downstream task of activity recognition in construction. The model was pre-trained on Kinetics-400, a large-scale video-based human activity recognition dataset with 400 distinct classes. The model was fine-tuned and tested using videos captured from manual material handling (MMH) activities found on YouTube. Results indicate that the fine-tuned model can recognize distinct MMH tasks in a robust and adaptive manner which is crucial for the widespread deployment of collaborative robots in construction.

Assessing Trust in Construction AI-Powered Collaborative Robots using Structural Equation Modeling

Aug 28, 2023Abstract:This study aimed to investigate the key technical and psychological factors that impact the architecture, engineering, and construction (AEC) professionals' trust in collaborative robots (cobots) powered by artificial intelligence (AI). The study employed a nationwide survey of 600 AEC industry practitioners to gather in-depth responses and valuable insights into the future opportunities for promoting the adoption, cultivation, and training of a skilled workforce to leverage this technology effectively. A Structural Equation Modeling (SEM) analysis revealed that safety and reliability are significant factors for the adoption of AI-powered cobots in construction. Fear of being replaced resulting from the use of cobots can have a substantial effect on the mental health of the affected workers. A lower error rate in jobs involving cobots, safety measurements, and security of data collected by cobots from jobsites significantly impact reliability, while the transparency of cobots' inner workings can benefit accuracy, robustness, security, privacy, and communication, and results in higher levels of automation, all of which demonstrated as contributors to trust. The study's findings provide critical insights into the perceptions and experiences of AEC professionals towards adoption of cobots in construction and help project teams determine the adoption approach that aligns with the company's goals workers' welfare.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge