Rene Gröbner

Characterizing Stereotypical Bias from Privacy-preserving Pre-Training

Jun 30, 2024Abstract:Differential Privacy (DP) can be applied to raw text by exploiting the spatial arrangement of words in an embedding space. We investigate the implications of such text privatization on Language Models (LMs) and their tendency towards stereotypical associations. Since previous studies documented that linguistic proficiency correlates with stereotypical bias, one could assume that techniques for text privatization, which are known to degrade language modeling capabilities, would cancel out undesirable biases. By testing BERT models trained on texts containing biased statements primed with varying degrees of privacy, our study reveals that while stereotypical bias generally diminishes when privacy is tightened, text privatization does not uniformly equate to diminishing bias across all social domains. This highlights the need for careful diagnosis of bias in LMs that undergo text privatization.

Documentation Practices of Artificial Intelligence

Jun 26, 2024

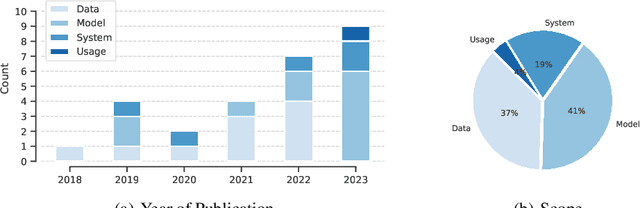

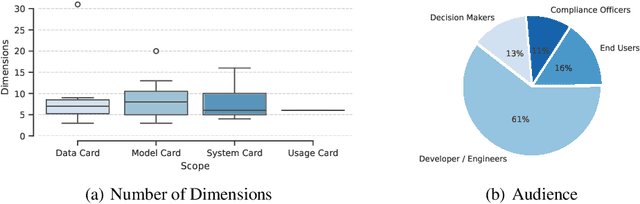

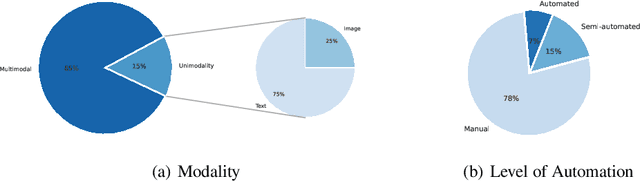

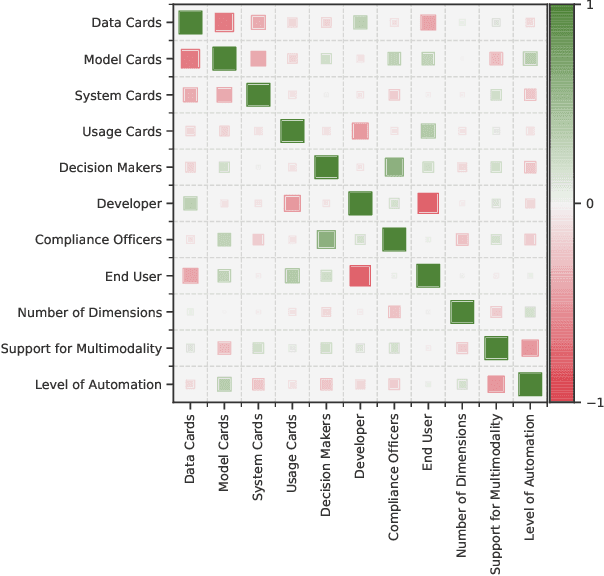

Abstract:Artificial Intelligence (AI) faces persistent challenges in terms of transparency and accountability, which requires rigorous documentation. Through a literature review on documentation practices, we provide an overview of prevailing trends, persistent issues, and the multifaceted interplay of factors influencing the documentation. Our examination of key characteristics such as scope, target audiences, support for multimodality, and level of automation, highlights a dynamic evolution in documentation practices, underscored by a shift towards a more holistic, engaging, and automated documentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge