Ratna Khatri

OCTANE -- Optimal Control for Tensor-based Autoencoder Network Emergence: Explicit Case

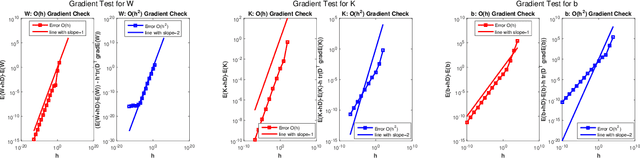

Sep 09, 2025Abstract:This paper presents a novel, mathematically rigorous framework for autoencoder-type deep neural networks that combines optimal control theory and low-rank tensor methods to yield memory-efficient training and automated architecture discovery. The learning task is formulated as an optimization problem constrained by differential equations representing the encoder and decoder components of the network and the corresponding optimality conditions are derived via a Lagrangian approach. Efficient memory compression is enabled by approximating differential equation solutions on low-rank tensor manifolds using an adaptive explicit integration scheme. These concepts are combined to form OCTANE (Optimal Control for Tensor-based Autoencoder Network Emergence) -- a unified training framework that yields compact autoencoder architectures, reduces memory usage, and enables effective learning, even with limited training data. The framework's utility is illustrated with application to image denoising and deblurring tasks and recommendations regarding governing hyperparameters are provided.

Fractional Deep Neural Network via Constrained Optimization

Apr 01, 2020

Abstract:This paper introduces a novel algorithmic framework for a deep neural network (DNN), which in a mathematically rigorous manner, allows us to incorporate history (or memory) into the network -- it ensures all layers are connected to one another. This DNN, called Fractional-DNN, can be viewed as a time-discretization of a fractional in time nonlinear ordinary differential equation (ODE). The learning problem then is a minimization problem subject to that fractional ODE as constraints. We emphasize that an analogy between the existing DNN and ODEs, with standard time derivative, is well-known by now. The focus of our work is the Fractional-DNN. Using the Lagrangian approach, we provide a derivation of the backward propagation and the design equations. We test our network on several datasets for classification problems. Fractional-DNN offers various advantages over the existing DNN. The key benefits are a significant improvement to the vanishing gradient issue due to the memory effect, and better handling of nonsmooth data due to the network's ability to approximate non-smooth functions.

Bilevel Optimization, Deep Learning and Fractional Laplacian Regularization with Applications in Tomography

Jul 22, 2019

Abstract:In this work we consider a generalized bilevel optimization framework for solving inverse problems. We introduce fractional Laplacian as a regularizer to improve the reconstruction quality, and compare it with the total variation regularization. We emphasize that the key advantage of using fractional Laplacian as a regularizer is that it leads to a linear operator, as opposed to the total variation regularization which results in a nonlinear degenerate operator. Inspired by residual neural networks, to learn the optimal strength of regularization and the exponent of fractional Laplacian, we develop a dedicated bilevel optimization neural network with a variable depth for a general regularized inverse problem. We also draw some parallels between an activation function in a neural network and regularization. We illustrate how to incorporate various regularizer choices into our proposed network. As an example, we consider tomographic reconstruction as a model problem and show an improvement in reconstruction quality, especially for limited data, via fractional Laplacian regularization. We successfully learn the regularization strength and the fractional exponent via our proposed bilevel optimization neural network. We observe that the fractional Laplacian regularization outperforms total variation regularization. This is specially encouraging, and important, in the case of limited and noisy data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge