Ranwa Al Mallah

A Comparative Evaluation of Teacher-Guided Reinforcement Learning Techniques for Autonomous Cyber Operations

Aug 20, 2025

Abstract:Autonomous Cyber Operations (ACO) rely on Reinforcement Learning (RL) to train agents to make effective decisions in the cybersecurity domain. However, existing ACO applications require agents to learn from scratch, leading to slow convergence and poor early-stage performance. While teacher-guided techniques have demonstrated promise in other domains, they have not yet been applied to ACO. In this study, we implement four distinct teacher-guided techniques in the simulated CybORG environment and conduct a comparative evaluation. Our results demonstrate that teacher integration can significantly improve training efficiency in terms of early policy performance and convergence speed, highlighting its potential benefits for autonomous cybersecurity.

Blockchain-based Monitoring for Poison Attack Detection in Decentralized Federated Learning

Sep 30, 2022

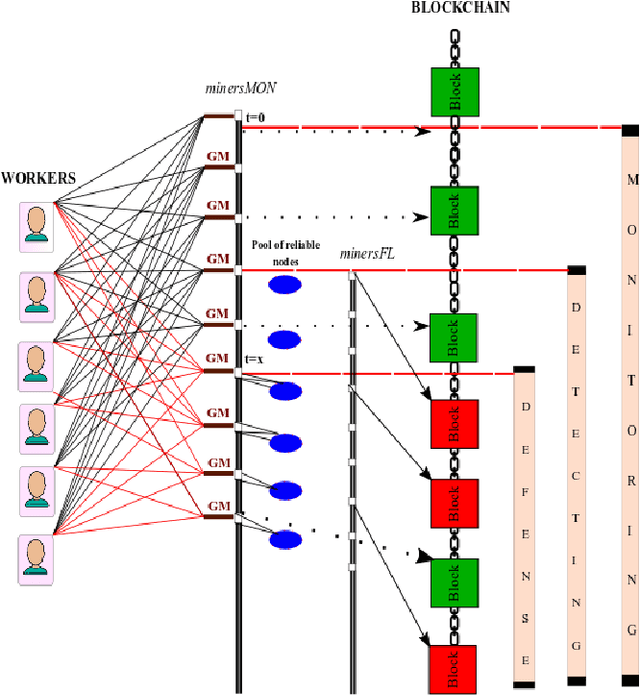

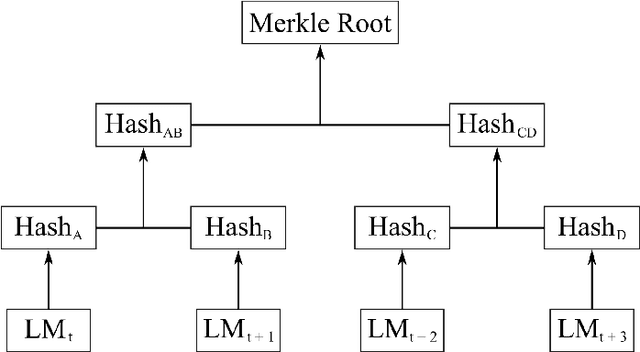

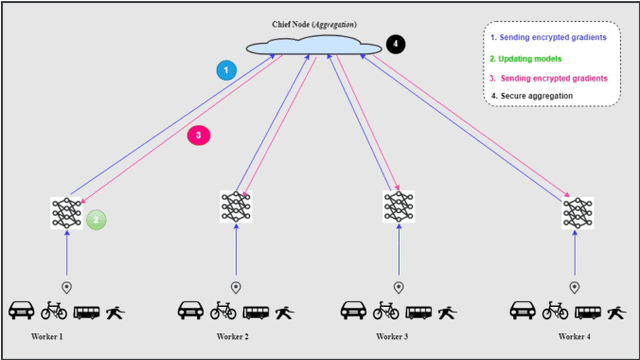

Abstract:Federated Learning (FL) is a machine learning technique that addresses the privacy challenges in terms of access rights of local datasets by enabling the training of a model across nodes holding their data samples locally. To achieve decentralized federated learning, blockchain-based FL was proposed as a distributed FL architecture. In decentralized FL, the chief is eliminated from the learning process as workers collaborate between each other to train the global model. Decentralized FL applications need to account for the additional delay incurred by blockchain-based FL deployments. Particularly in this setting, to detect targeted/untargeted poisoning attacks, we investigate the end-to-end learning completion latency of a realistic decentralized FL process protected against poisoning attacks. We propose a technique which consists in decoupling the monitoring phase from the detection phase in defenses against poisoning attacks in a decentralized federated learning deployment that aim at monitoring the behavior of the workers. We demonstrate that our proposed blockchain-based monitoring improved network scalability, robustness and time efficiency. The parallelization of operations results in minimized latency over the end-to-end communication, computation, and consensus delays incurred during the FL and blockchain operations.

eFedDNN: Ensemble based Federated Deep Neural Networks for Trajectory Mode Inference

May 11, 2022

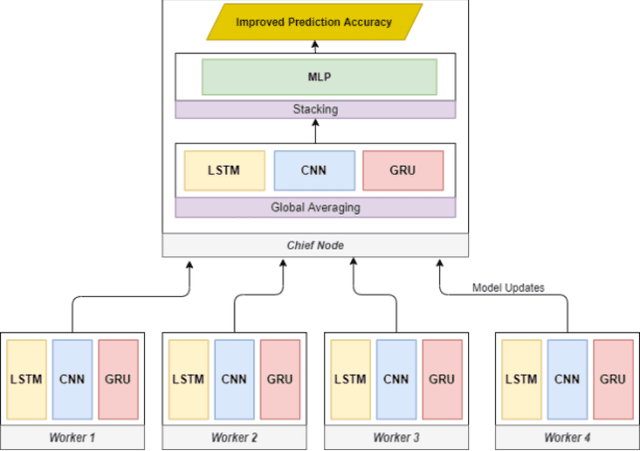

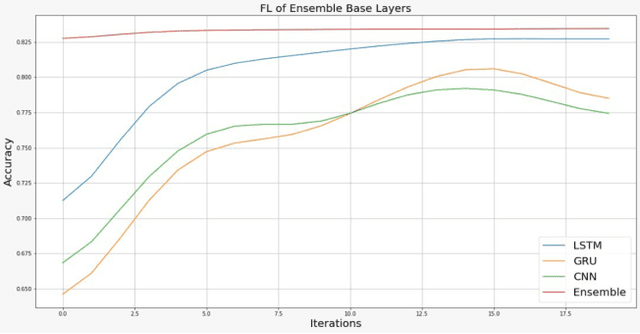

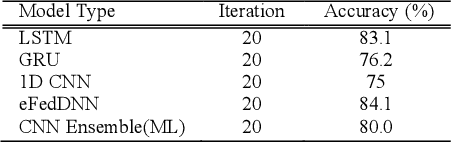

Abstract:As the most significant data source in smart mobility systems, GPS trajectories can help identify user travel mode. However, these GPS datasets may contain users' private information (e.g., home location), preventing many users from sharing their private information with a third party. Hence, identifying travel modes while protecting users' privacy is a significant issue. To address this challenge, we use federated learning (FL), a privacy-preserving machine learning technique that aims at collaboratively training a robust global model by accessing users' locally trained models but not their raw data. Specifically, we designed a novel ensemble-based Federated Deep Neural Network (eFedDNN). The ensemble method combines the outputs of the different models learned via FL by the users and shows an accuracy that surpasses comparable models reported in the literature. Extensive experimental studies on a real-world open-access dataset from Montreal demonstrate that the proposed inference model can achieve accurate identification of users' mode of travel without compromising privacy.

On the Initial Behavior Monitoring Issues in Federated Learning

Sep 11, 2021

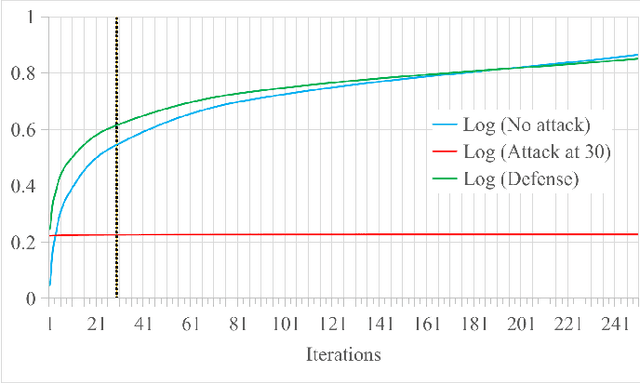

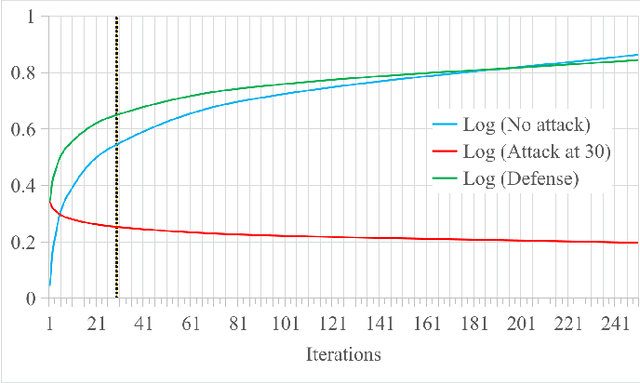

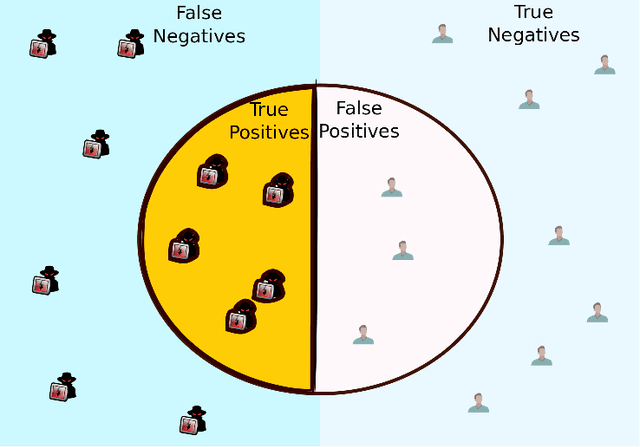

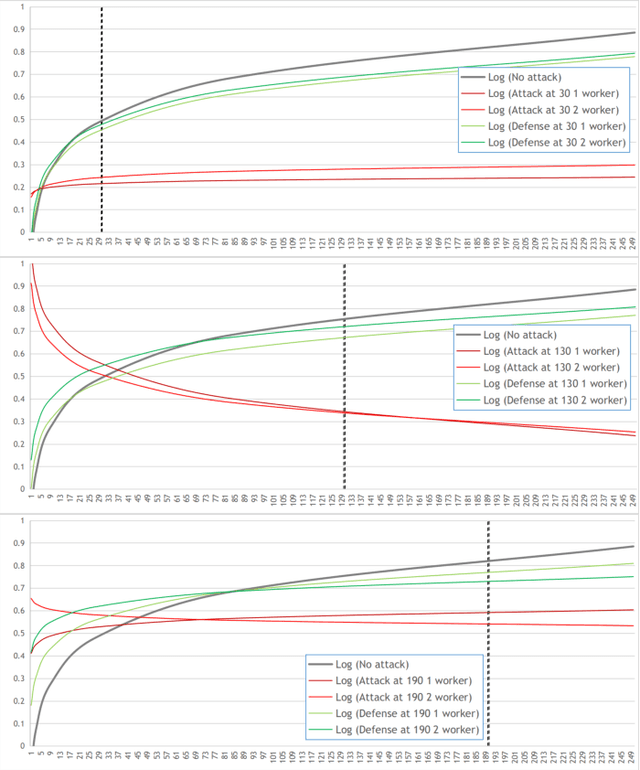

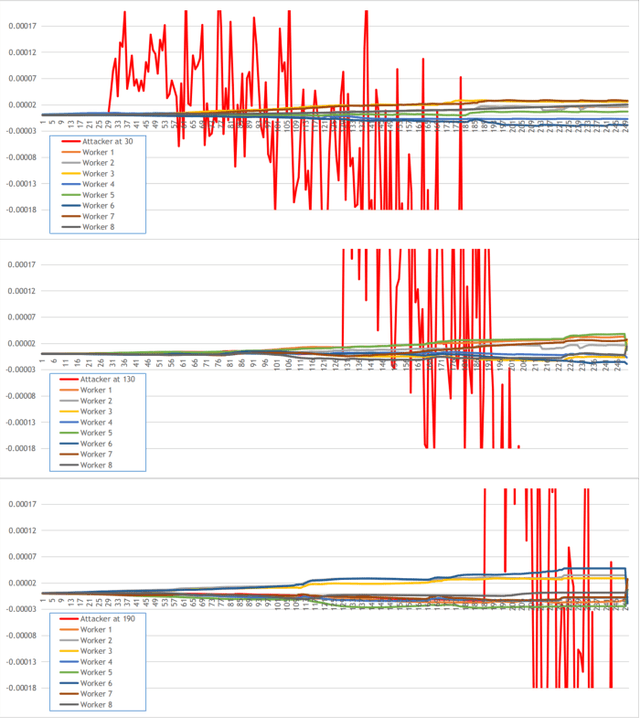

Abstract:In Federated Learning (FL), a group of workers participate to build a global model under the coordination of one node, the chief. Regarding the cybersecurity of FL, some attacks aim at injecting the fabricated local model updates into the system. Some defenses are based on malicious worker detection and behavioral pattern analysis. In this context, without timely and dynamic monitoring methods, the chief cannot detect and remove the malicious or unreliable workers from the system. Our work emphasize the urgency to prepare the federated learning process for monitoring and eventually behavioral pattern analysis. We study the information inside the learning process in the early stages of training, propose a monitoring process and evaluate the monitoring period required. The aim is to analyse at what time is it appropriate to start the detection algorithm in order to remove the malicious or unreliable workers from the system and optimise the defense mechanism deployment. We tested our strategy on a behavioral pattern analysis defense applied to the FL process of different benchmark systems for text and image classification. Our results show that the monitoring process lowers false positives and false negatives and consequently increases system efficiency by enabling the distributed learning system to achieve better performance in the early stage of training.

Cybersecurity Threats in Connected and Automated Vehicles based Federated Learning Systems

Feb 26, 2021

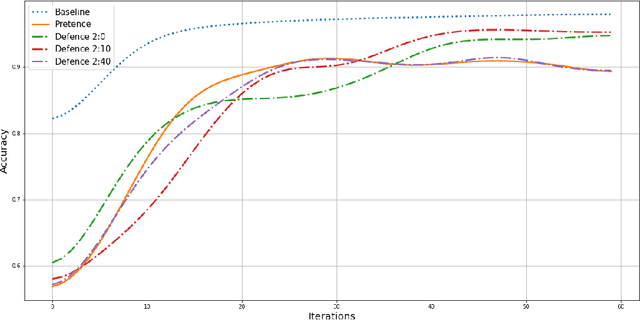

Abstract:Federated learning (FL) is a machine learning technique that aims at training an algorithm across decentralized entities holding their local data private. Wireless mobile networks allow users to communicate with other fixed or mobile users. The road traffic network represents an infrastructure-based configuration of a wireless mobile network where the Connected and Automated Vehicles (CAV) represent the communicating entities. Applying FL in a wireless mobile network setting gives rise to a new threat in the mobile environment that is very different from the traditional fixed networks. The threat is due to the intrinsic characteristics of the wireless medium and is caused by the characteristics of the vehicular networks such as high node-mobility and rapidly changing topology. Most cyber defense techniques depend on highly reliable and connected networks. This paper explores falsified information attacks, which target the FL process that is ongoing at the RSU. We identified a number of attack strategies conducted by the malicious CAVs to disrupt the training of the global model in vehicular networks. We show that the attacks were able to increase the convergence time and decrease the accuracy the model. We demonstrate that our attacks bypass FL defense strategies in their primary form and highlight the need for novel poisoning resilience defense mechanisms in the wireless mobile setting of the future road networks.

Untargeted Poisoning Attack Detection in Federated Learning via Behavior Attestation

Jan 28, 2021

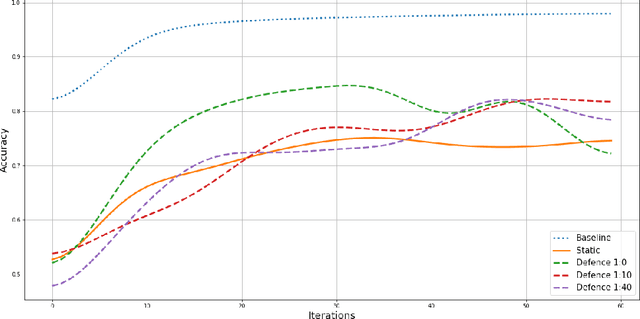

Abstract:Federated Learning (FL) is a paradigm in Machine Learning (ML) that addresses data privacy, security, access rights and access to heterogeneous information issues by training a global model using distributed nodes. Despite its advantages, there is an increased potential for cyberattacks on FL-based ML techniques that can undermine the benefits. Model-poisoning attacks on FL target the availability of the model. The adversarial objective is to disrupt the training. We propose attestedFL, a defense mechanism that monitors the training of individual nodes through state persistence in order to detect a malicious worker. A fine-grained assessment of the history of the worker permits the evaluation of its behavior in time and results in innovative detection strategies. We present three lines of defense that aim at assessing if the worker is reliable by observing if the node is really training, advancing towards a goal. Our defense exposes an attacker's malicious behavior and removes unreliable nodes from the aggregation process so that the FL process converge faster. Through extensive evaluations and against various adversarial settings, attestedFL increased the accuracy of the model between 12% to 58% under different scenarios such as attacks performed at different stages of convergence, attackers colluding and continuous attacks.

Prediction of Traffic Flow via Connected Vehicles

Jul 10, 2020

Abstract:We propose a Short-term Traffic flow Prediction (STP) framework so that transportation authorities take early actions to control flow and prevent congestion. We anticipate flow at future time frames on a target road segment based on historical flow data and innovative features such as real time feeds and trajectory data provided by Connected Vehicles (CV) technology. To cope with the fact that existing approaches do not adapt to variation in traffic, we show how this novel approach allows advanced modelling by integrating into the forecasting of flow, the impact of the various events that CV realistically encountered on segments along their trajectory. We solve the STP problem with a Deep Neural Networks (DNN) in a multitask learning setting augmented by input from CV. Results show that our approach, namely MTL-CV, with an average Root-Mean-Square Error (RMSE) of 0.052, outperforms state-of-the-art ARIMA time series (RMSE of 0.255) and baseline classifiers (RMSE of 0.122). Compared to single task learning with Artificial Neural Network (ANN), ANN had a lower performance, 0.113 for RMSE, than MTL-CV. MTL-CV learned historical similarities between segments, in contrast to using direct historical trends in the measure, because trends may not exist in the measure but do in the similarities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge