David Lopez

Blockchain-based Monitoring for Poison Attack Detection in Decentralized Federated Learning

Sep 30, 2022

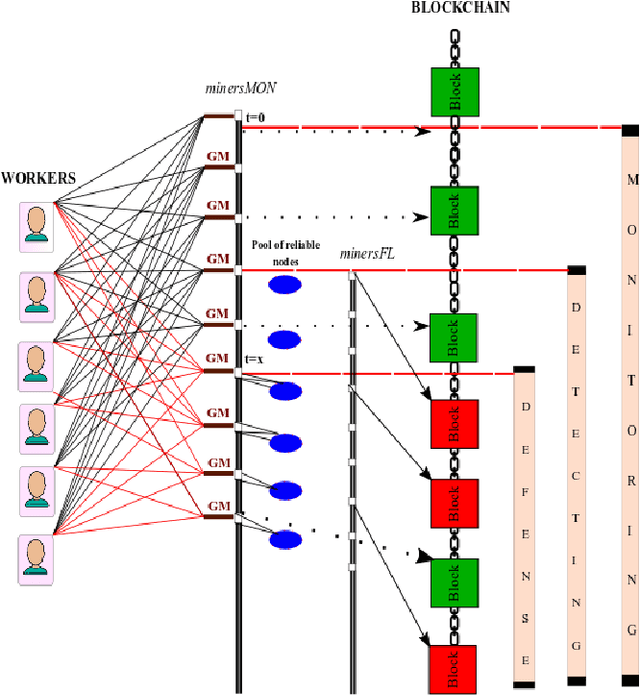

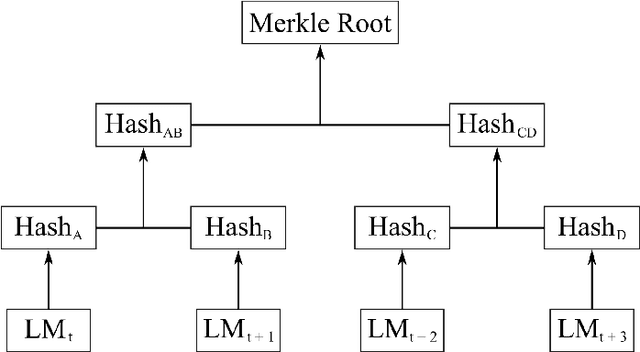

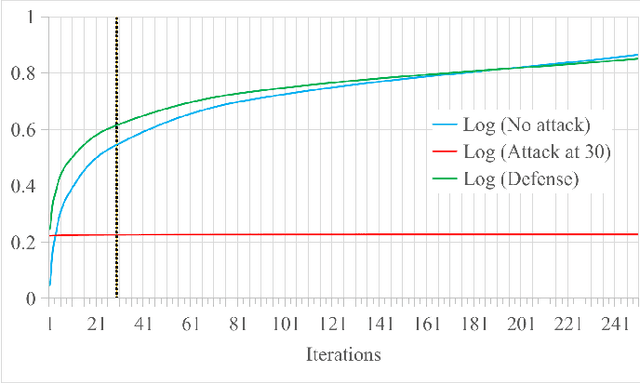

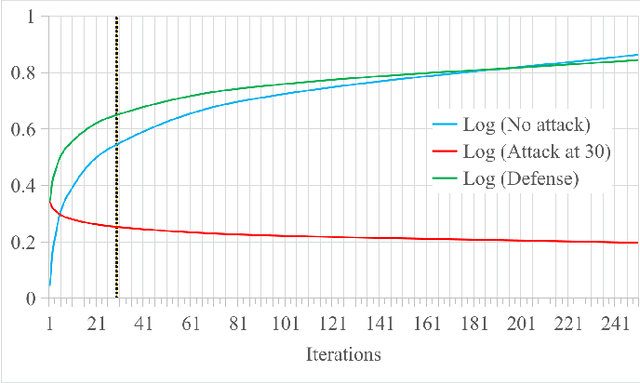

Abstract:Federated Learning (FL) is a machine learning technique that addresses the privacy challenges in terms of access rights of local datasets by enabling the training of a model across nodes holding their data samples locally. To achieve decentralized federated learning, blockchain-based FL was proposed as a distributed FL architecture. In decentralized FL, the chief is eliminated from the learning process as workers collaborate between each other to train the global model. Decentralized FL applications need to account for the additional delay incurred by blockchain-based FL deployments. Particularly in this setting, to detect targeted/untargeted poisoning attacks, we investigate the end-to-end learning completion latency of a realistic decentralized FL process protected against poisoning attacks. We propose a technique which consists in decoupling the monitoring phase from the detection phase in defenses against poisoning attacks in a decentralized federated learning deployment that aim at monitoring the behavior of the workers. We demonstrate that our proposed blockchain-based monitoring improved network scalability, robustness and time efficiency. The parallelization of operations results in minimized latency over the end-to-end communication, computation, and consensus delays incurred during the FL and blockchain operations.

Untargeted Poisoning Attack Detection in Federated Learning via Behavior Attestation

Jan 28, 2021

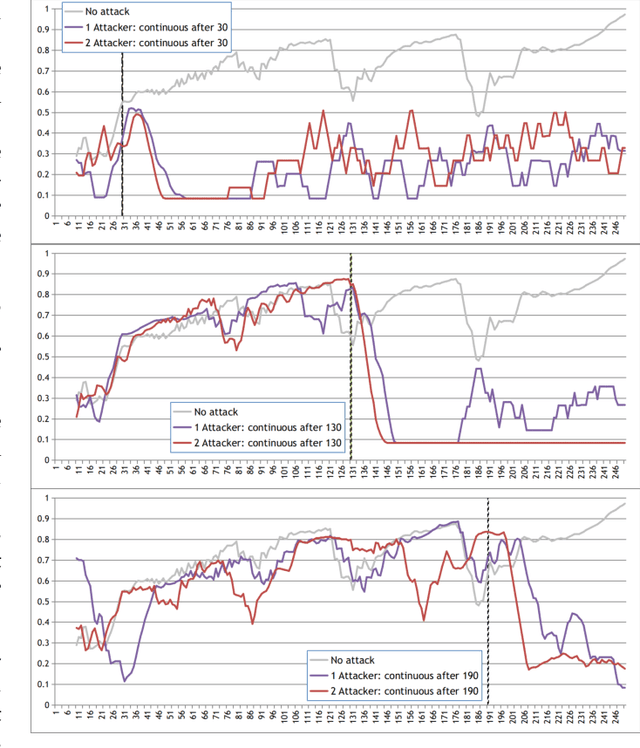

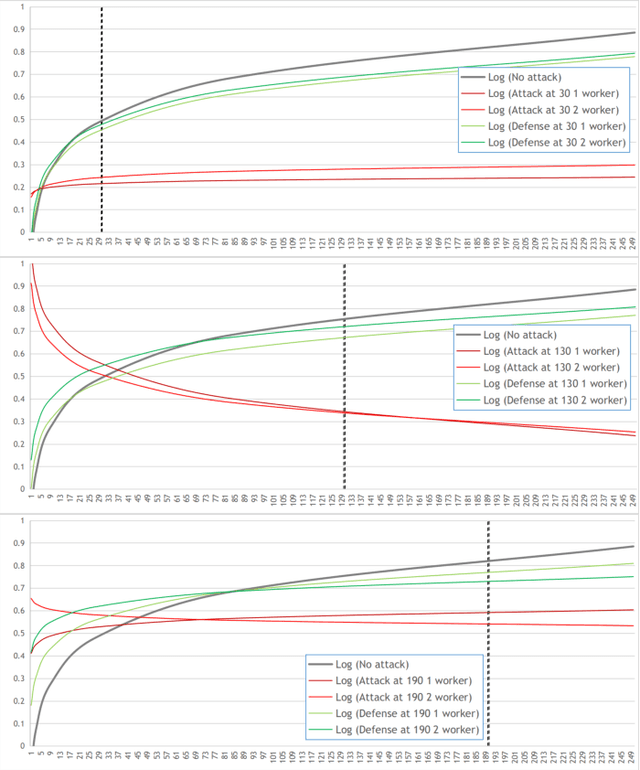

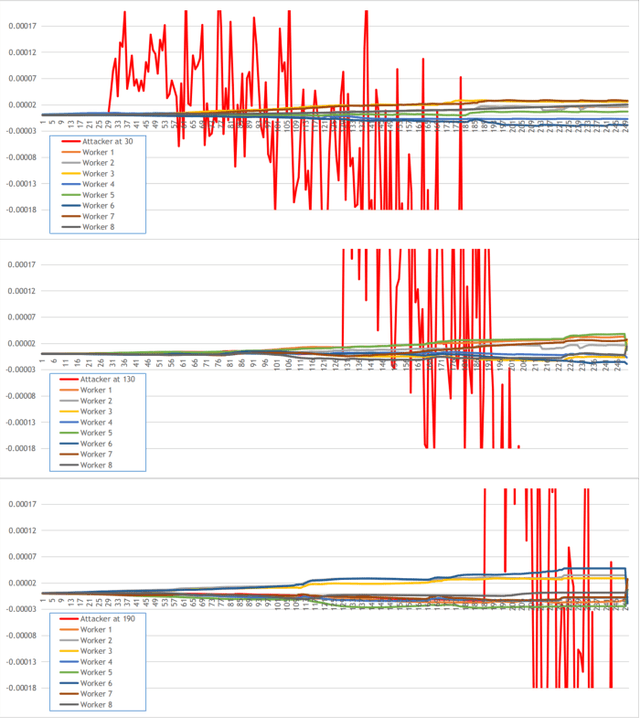

Abstract:Federated Learning (FL) is a paradigm in Machine Learning (ML) that addresses data privacy, security, access rights and access to heterogeneous information issues by training a global model using distributed nodes. Despite its advantages, there is an increased potential for cyberattacks on FL-based ML techniques that can undermine the benefits. Model-poisoning attacks on FL target the availability of the model. The adversarial objective is to disrupt the training. We propose attestedFL, a defense mechanism that monitors the training of individual nodes through state persistence in order to detect a malicious worker. A fine-grained assessment of the history of the worker permits the evaluation of its behavior in time and results in innovative detection strategies. We present three lines of defense that aim at assessing if the worker is reliable by observing if the node is really training, advancing towards a goal. Our defense exposes an attacker's malicious behavior and removes unreliable nodes from the aggregation process so that the FL process converge faster. Through extensive evaluations and against various adversarial settings, attestedFL increased the accuracy of the model between 12% to 58% under different scenarios such as attacks performed at different stages of convergence, attackers colluding and continuous attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge