Rana Hammad Raza

A Robust Deep Networks based Multi-Object MultiCamera Tracking System for City Scale Traffic

May 01, 2025Abstract:Vision sensors are becoming more important in Intelligent Transportation Systems (ITS) for traffic monitoring, management, and optimization as the number of network cameras continues to rise. However, manual object tracking and matching across multiple non-overlapping cameras pose significant challenges in city-scale urban traffic scenarios. These challenges include handling diverse vehicle attributes, occlusions, illumination variations, shadows, and varying video resolutions. To address these issues, we propose an efficient and cost-effective deep learning-based framework for Multi-Object Multi-Camera Tracking (MO-MCT). The proposed framework utilizes Mask R-CNN for object detection and employs Non-Maximum Suppression (NMS) to select target objects from overlapping detections. Transfer learning is employed for re-identification, enabling the association and generation of vehicle tracklets across multiple cameras. Moreover, we leverage appropriate loss functions and distance measures to handle occlusion, illumination, and shadow challenges. The final solution identification module performs feature extraction using ResNet-152 coupled with Deep SORT based vehicle tracking. The proposed framework is evaluated on the 5th AI City Challenge dataset (Track 3), comprising 46 camera feeds. Among these 46 camera streams, 40 are used for model training and validation, while the remaining six are utilized for model testing. The proposed framework achieves competitive performance with an IDF1 score of 0.8289, and precision and recall scores of 0.9026 and 0.8527 respectively, demonstrating its effectiveness in robust and accurate vehicle tracking.

Fine-Grained Vehicle Classification in Urban Traffic Scenes using Deep Learning

Nov 17, 2021

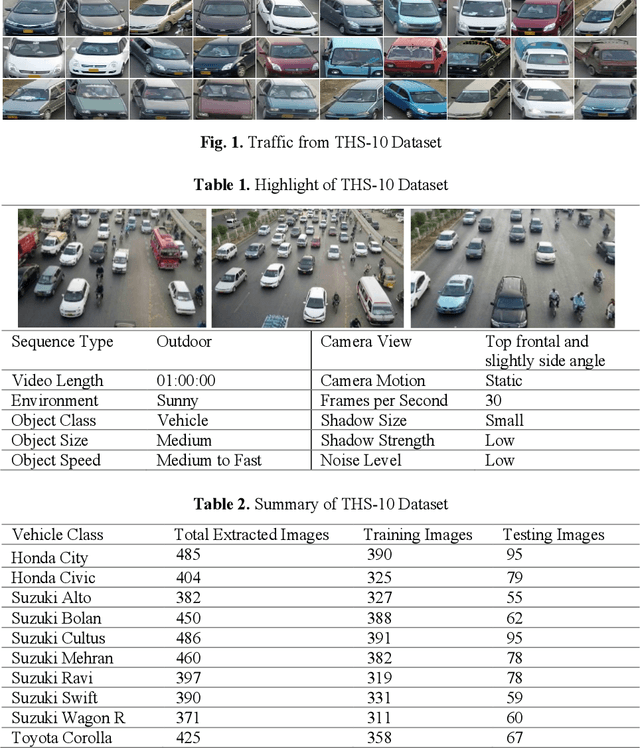

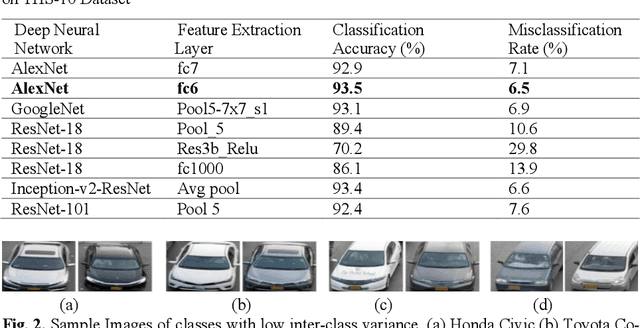

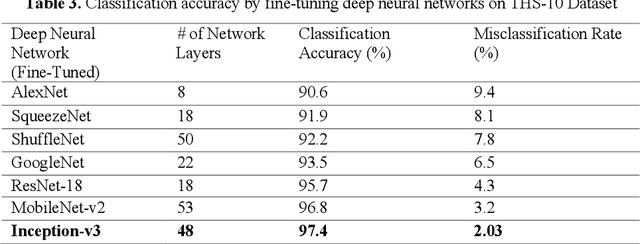

Abstract:The increasingly dense traffic is becoming a challenge in our local settings, urging the need for a better traffic monitoring and management system. Fine-grained vehicle classification appears to be a challenging task as compared to vehicle coarse classification. Exploring a robust approach for vehicle detection and classification into fine-grained categories is therefore essentially required. Existing Vehicle Make and Model Recognition (VMMR) systems have been developed on synchronized and controlled traffic conditions. Need for robust VMMR in complex, urban, heterogeneous, and unsynchronized traffic conditions still remain an open research area. In this paper, vehicle detection and fine-grained classification are addressed using deep learning. To perform fine-grained classification with related complexities, local dataset THS-10 having high intra-class and low interclass variation is exclusively prepared. The dataset consists of 4250 vehicle images of 10 vehicle models, i.e., Honda City, Honda Civic, Suzuki Alto, Suzuki Bolan, Suzuki Cultus, Suzuki Mehran, Suzuki Ravi, Suzuki Swift, Suzuki Wagon R and Toyota Corolla. This dataset is available online. Two approaches have been explored and analyzed for classification of vehicles i.e, fine-tuning, and feature extraction from deep neural networks. A comparative study is performed, and it is demonstrated that simpler approaches can produce good results in local environment to deal with complex issues such as dense occlusion and lane departures. Hence reducing computational load and time, e.g. fine-tuning Inception-v3 produced highest accuracy of 97.4% with lowest misclassification rate of 2.08%. Fine-tuning MobileNet-v2 and ResNet-18 produced 96.8% and 95.7% accuracies, respectively. Extracting features from fc6 layer of AlexNet produces an accuracy of 93.5% with a misclassification rate of 6.5%.

Effectiveness of State-of-the-Art Super Resolution Algorithms in Surveillance Environment

Jul 08, 2021

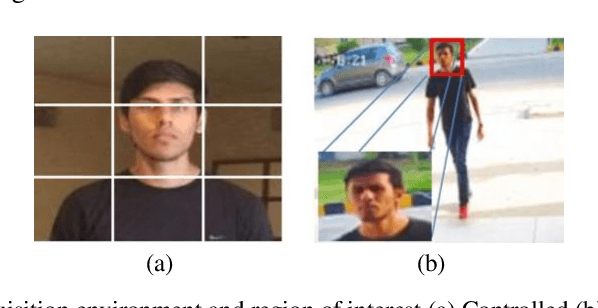

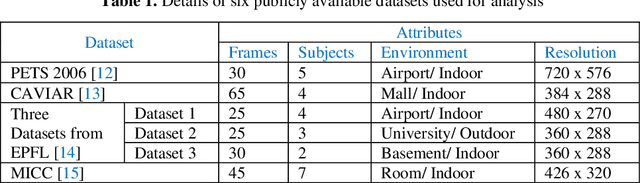

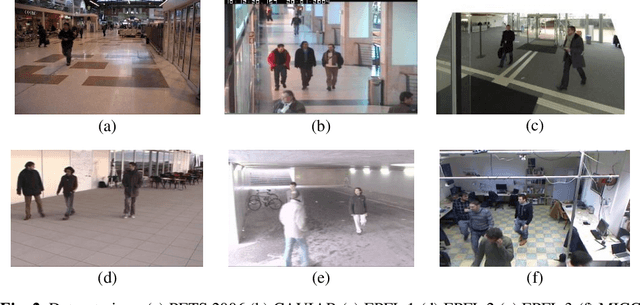

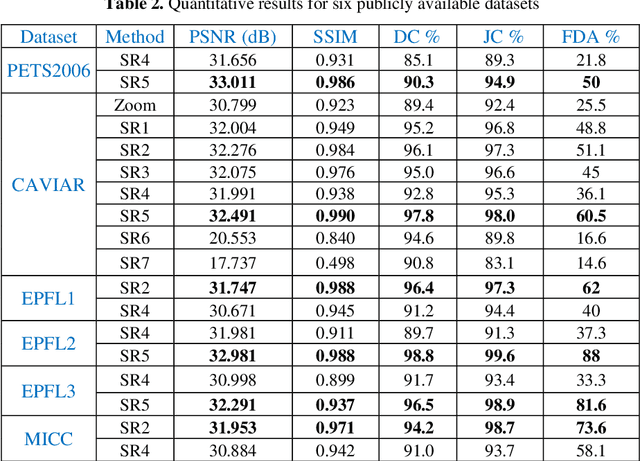

Abstract:Image Super Resolution (SR) finds applications in areas where images need to be closely inspected by the observer to extract enhanced information. One such focused application is an offline forensic analysis of surveillance feeds. Due to the limitations of camera hardware, camera pose, limited bandwidth, varying illumination conditions, and occlusions, the quality of the surveillance feed is significantly degraded at times, thereby compromising monitoring of behavior, activities, and other sporadic information in the scene. For the proposed research work, we have inspected the effectiveness of four conventional yet effective SR algorithms and three deep learning-based SR algorithms to seek the finest method that executes well in a surveillance environment with limited training data op-tions. These algorithms generate an enhanced resolution output image from a sin-gle low-resolution (LR) input image. For performance analysis, a subset of 220 images from six surveillance datasets has been used, consisting of individuals with varying distances from the camera, changing illumination conditions, and complex backgrounds. The performance of these algorithms has been evaluated and compared using both qualitative and quantitative metrics. These SR algo-rithms have also been compared based on face detection accuracy. By analyzing and comparing the performance of all the algorithms, a Convolutional Neural Network (CNN) based SR technique using an external dictionary proved to be best by achieving robust face detection accuracy and scoring optimal quantitative metric results under different surveillance conditions. This is because the CNN layers progressively learn more complex features using an external dictionary.

Performance Evaluation of Advanced Deep Learning Architectures for Offline Handwritten Character Recognition

Mar 15, 2020

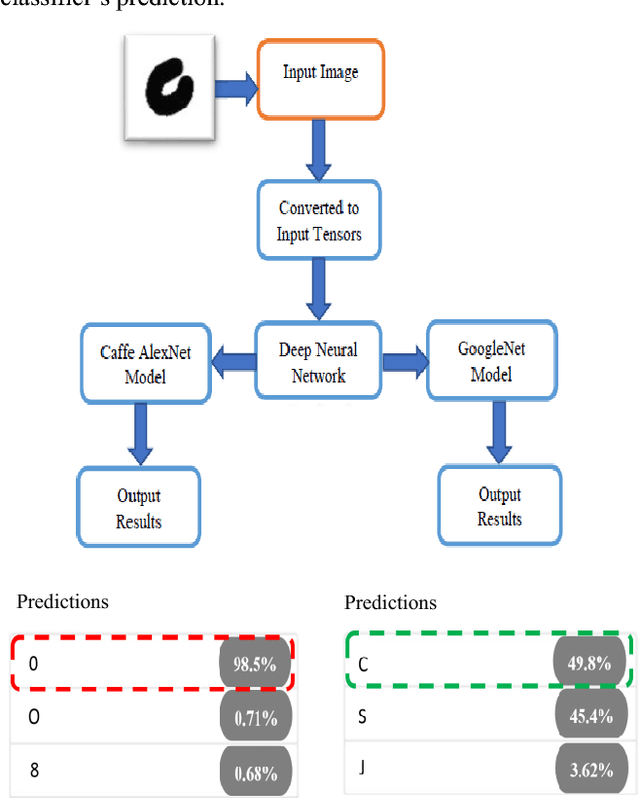

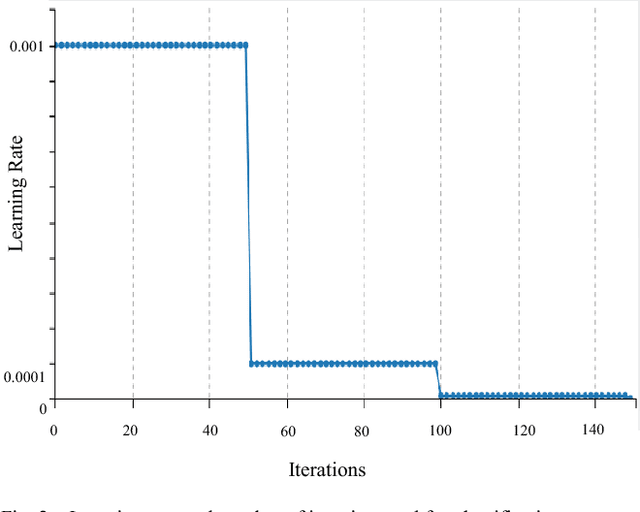

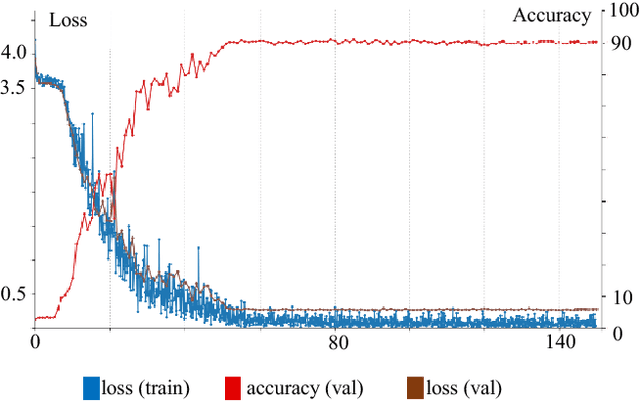

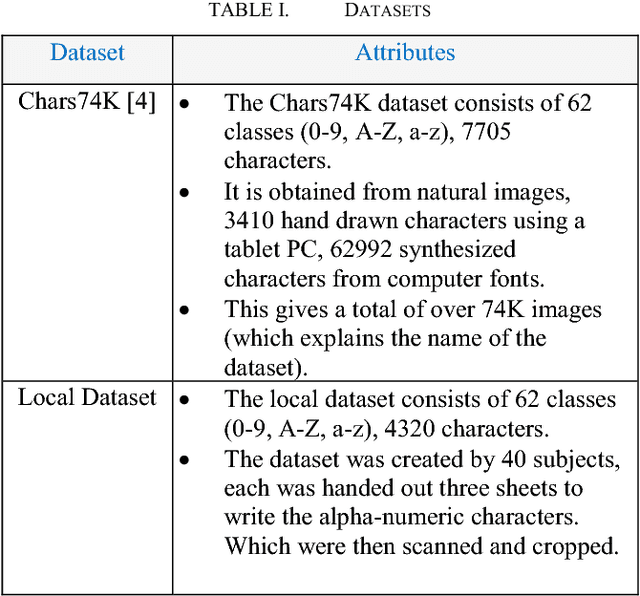

Abstract:This paper presents a hand-written character recognition comparison and performance evaluation for robust and precise classification of different hand-written characters. The system utilizes advanced multilayer deep neural network by collecting features from raw pixel values. The hidden layers stack deep hierarchies of non-linear features since learning complex features from conventional neural networks is very challenging. Two state of the art deep learning architectures were used which includes Caffe AlexNet and GoogleNet models in NVIDIA DIGITS.The frameworks were trained and tested on two different datasets for incorporating diversity and complexity. One of them is the publicly available dataset i.e. Chars74K comprising of 7705 characters and has upper and lowercase English alphabets, along with numerical digits. While the other dataset created locally consists of 4320 characters. The local dataset consists of 62 classes and was created by 40 subjects. It also consists upper and lowercase English alphabets, along with numerical digits. The overall dataset is divided in the ratio of 80% for training and 20% for testing phase. The time required for training phase is approximately 90 minutes. For validation part, the results obtained were compared with the groundtruth. The accuracy level achieved with AlexNet was 77.77% and 88.89% with Google Net. The higher accuracy level of GoogleNet is due to its unique combination of inception modules, each including pooling, convolutions at various scales and concatenation procedures.

Automatic Lesion Detection System (ALDS) for Skin Cancer Classification Using SVM and Neural Classifiers

Mar 13, 2020

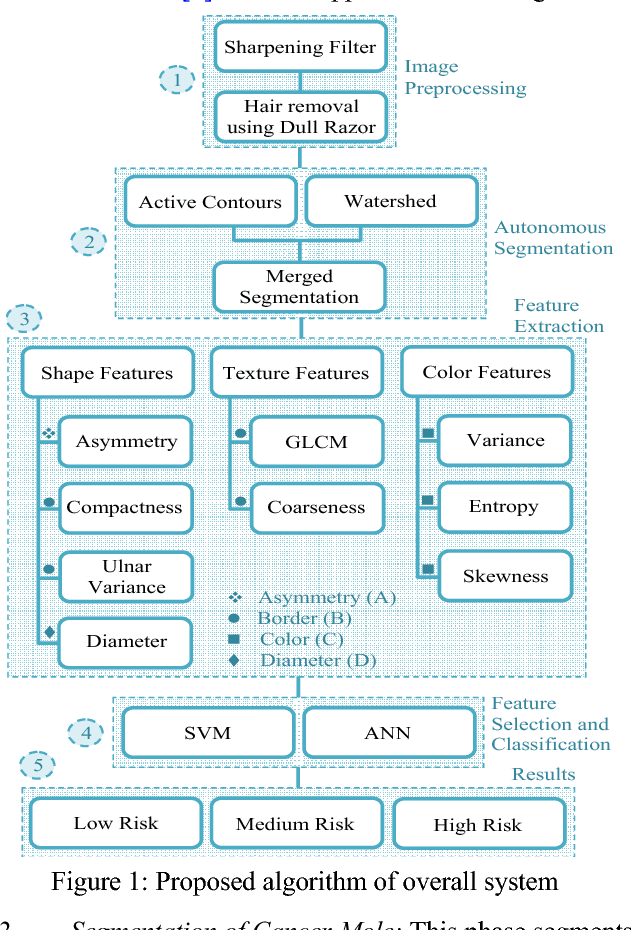

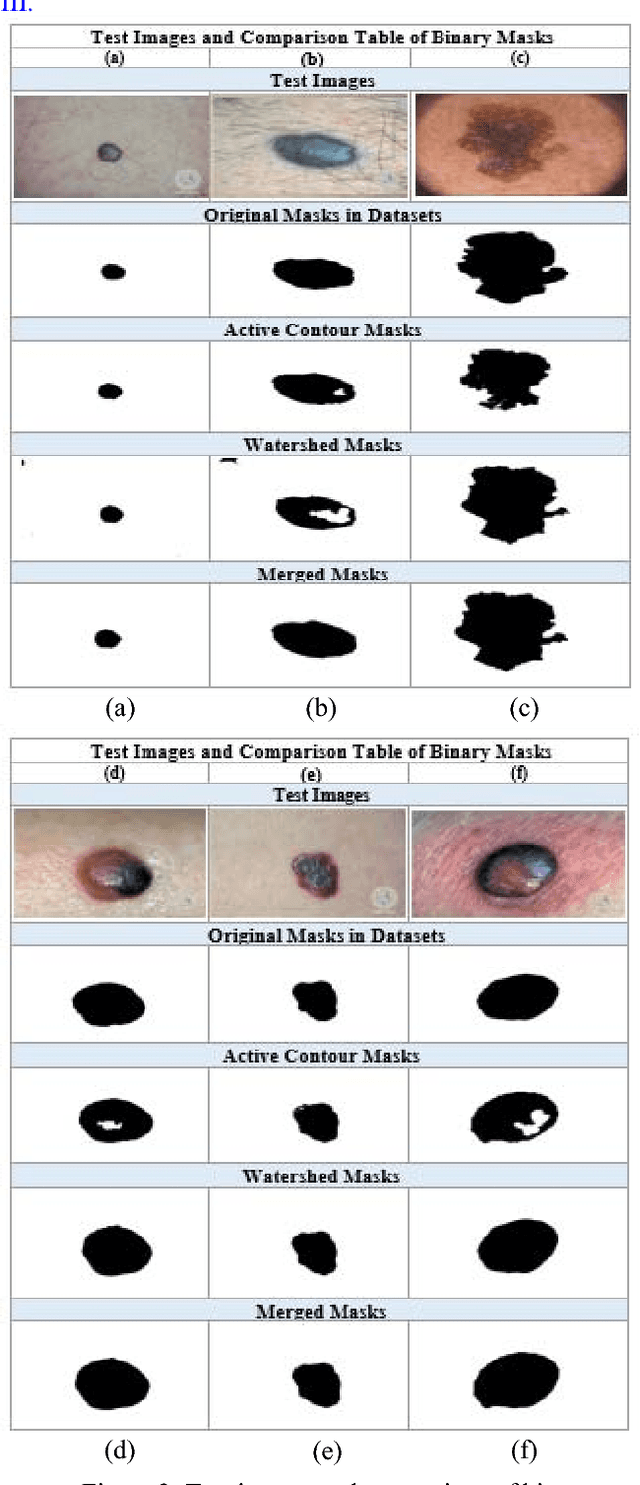

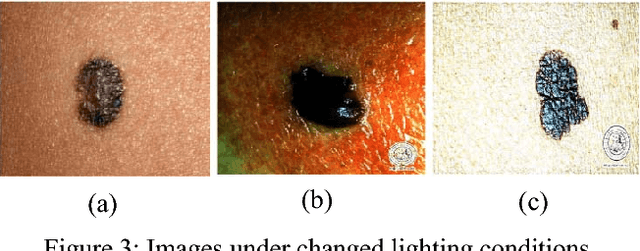

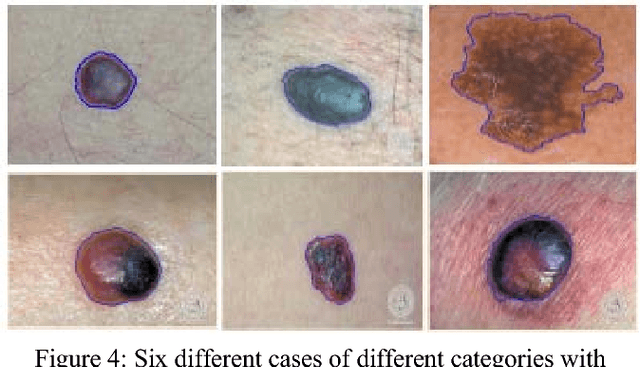

Abstract:Technology aided platforms provide reliable tools in almost every field these days. These tools being supported by computational power are significant for applications that need sensitive and precise data analysis. One such important application in the medical field is Automatic Lesion Detection System (ALDS) for skin cancer classification. Computer aided diagnosis helps physicians and dermatologists to obtain a second opinion for proper analysis and treatment of skin cancer. Precise segmentation of the cancerous mole along with surrounding area is essential for proper analysis and diagnosis. This paper is focused towards the development of improved ALDS framework based on probabilistic approach that initially utilizes active contours and watershed merged mask for segmenting out the mole and later SVM and Neural Classifier are applied for the classification of the segmented mole. After lesion segmentation, the selected features are classified to ascertain that whether the case under consideration is melanoma or non-melanoma. The approach is tested for varying datasets and comparative analysis is performed that reflects the effectiveness of the proposed system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge