Ramin Hasibi

A Pipeline of Augmentation and Sequence Embedding for Classification of Imbalanced Network Traffic

Feb 26, 2025Abstract:Network Traffic Classification (NTC) is one of the most important tasks in network management. The imbalanced nature of classes on the internet presents a critical challenge in classification tasks. For example, some classes of applications are much more prevalent than others, such as HTTP. As a result, machine learning classification models do not perform well on those classes with fewer data. To address this problem, we propose a pipeline to balance the dataset and classify it using a robust and accurate embedding technique. First, we generate artificial data using Long Short-Term Memory (LSTM) networks and Kernel Density Estimation (KDE). Next, we propose replacing one-hot encoding for categorical features with a novel embedding framework based on the "Flow as a Sentence" perspective, which we name FS-Embedding. This framework treats the source and destination ports, along with the packet's direction, as one word in a flow, then trains an embedding vector space based on these new features through the learning classification task. Finally, we compare our pipeline with the training of a Convolutional Recurrent Neural Network (CRNN) and Transformers, both with imbalanced and sampled datasets, as well as with the one-hot encoding approach. We demonstrate that the proposed augmentation pipeline, combined with FS-Embedding, increases convergence speed and leads to a significant reduction in the number of model parameters, all while maintaining the same performance in terms of accuracy.

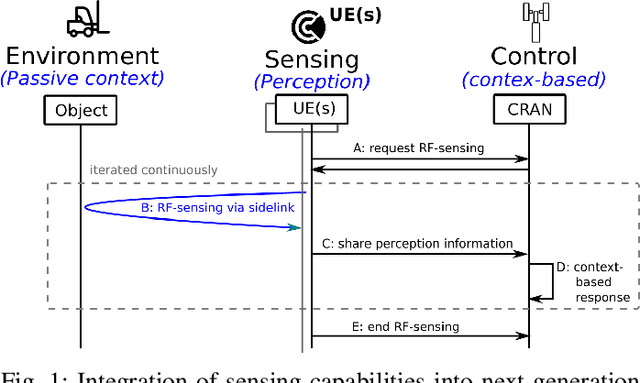

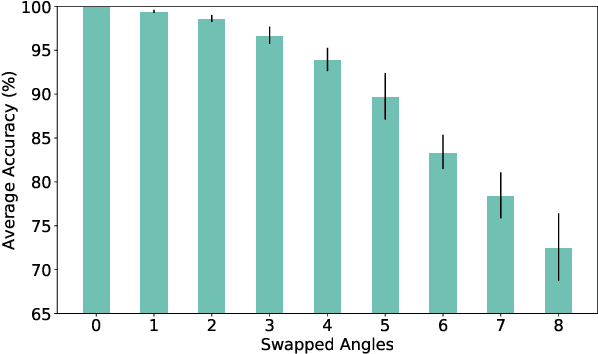

Integrating Sensing and Communication in Cellular Networks via NR Sidelink

Sep 15, 2021

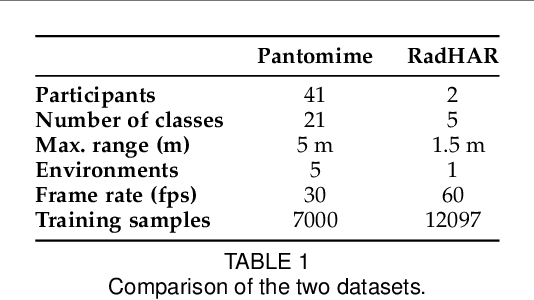

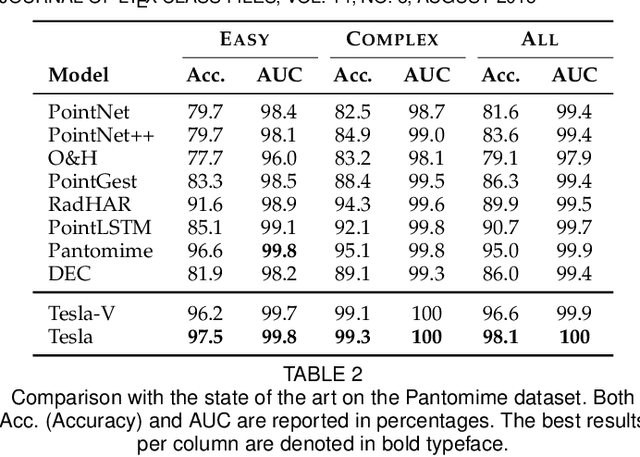

Abstract:RF-sensing, the analysis and interpretation of movement or environment-induced patterns in received electromagnetic signals, has been actively investigated for more than a decade. Since electromagnetic signals, through cellular communication systems, are omnipresent, RF sensing has the potential to become a universal sensing mechanism with applications in smart home, retail, localization, gesture recognition, intrusion detection, etc. Specifically, existing cellular network installations might be dual-used for both communication and sensing. Such communications and sensing convergence is envisioned for future communication networks. We propose the use of NR-sidelink direct device-to-device communication to achieve device-initiated,flexible sensing capabilities in beyond 5G cellular communication systems. In this article, we specifically investigate a common issue related to sidelink-based RF-sensing, which is its angle and rotation dependence. In particular, we discuss transformations of mmWave point-cloud data which achieve rotational invariance, as well as distributed processing based on such rotational invariant inputs, at angle and distance diverse devices. To process the distributed data, we propose a graph based encoder to capture spatio-temporal features of the data and propose four approaches for multi-angle learning. The approaches are compared on a newly recorded and openly available dataset comprising 15 subjects, performing 21 gestures which are recorded from 8 angles.

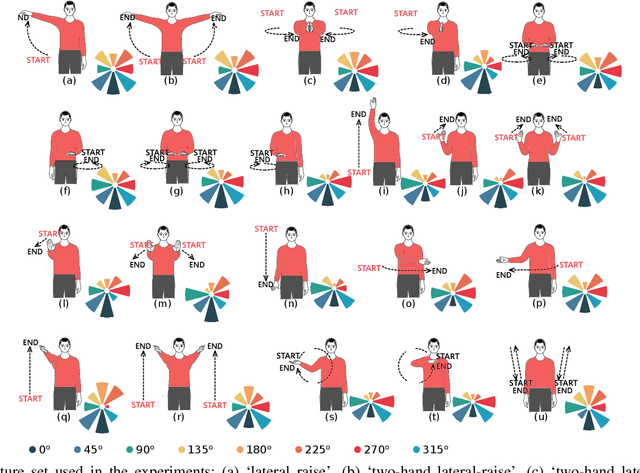

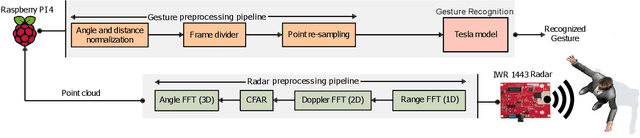

Tesla-Rapture: A Lightweight Gesture Recognition System from mmWave Radar Point Clouds

Sep 14, 2021

Abstract:We present Tesla-Rapture, a gesture recognition interface for point clouds generated by mmWave Radars. State of the art gesture recognition models are either too resource consuming or not sufficiently accurate for integration into real-life scenarios using wearable or constrained equipment such as IoT devices (e.g. Raspberry PI), XR hardware (e.g. HoloLens), or smart-phones. To tackle this issue, we developed Tesla, a Message Passing Neural Network (MPNN) graph convolution approach for mmWave radar point clouds. The model outperforms the state of the art on two datasets in terms of accuracy while reducing the computational complexity and, hence, the execution time. In particular, the approach, is able to predict a gesture almost 8 times faster than the most accurate competitor. Our performance evaluation in different scenarios (environments, angles, distances) shows that Tesla generalizes well and improves the accuracy up to 20% in challenging scenarios like a through-wall setting and sensing at extreme angles. Utilizing Tesla, we develop Tesla-Rapture, a real-time implementation using a mmWave Radar on a Raspberry PI 4 and evaluate its accuracy and time-complexity. We also publish the source code, the trained models, and the implementation of the model for embedded devices.

Predicting gene expression from network topology using graph neural networks

May 08, 2020

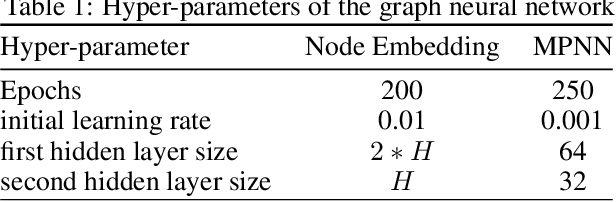

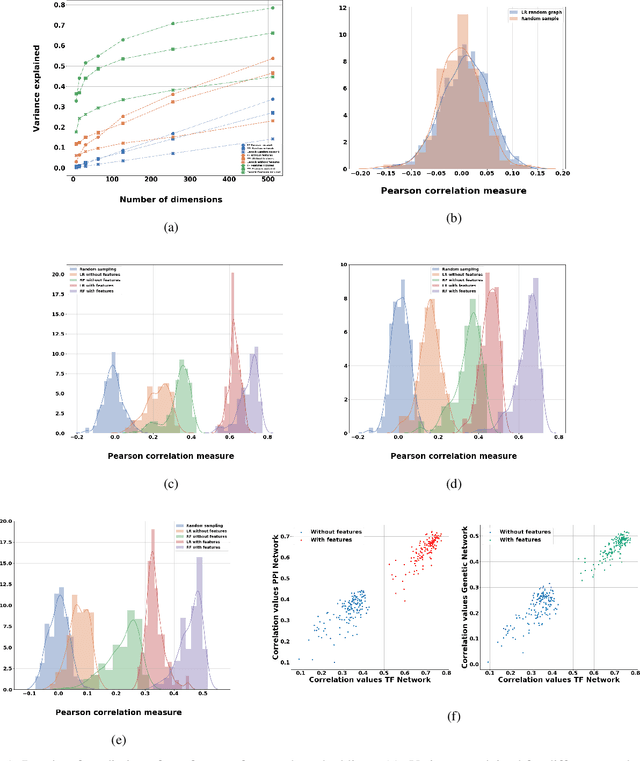

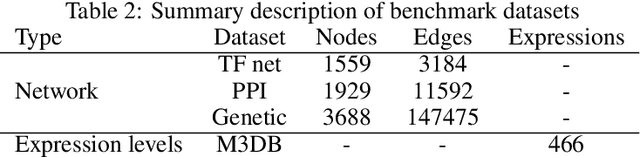

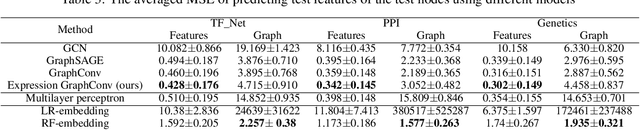

Abstract:Motivation: It is known that the structure of transcription and protein interaction networks is informative of its biological function at multiple scales. However, thus far it has not been possible to systematically connect network topology to gene expression in a quantitative way. Results: We investigated whether there is a relationship between interaction networks and gene expression values by using a graph convolutional auto-encoder and two end-to-end learning approaches for three interaction networks and hundreds of experimental conditions in the model organism \textit{E.\ coli}. Graph neural networks use a message passing framework to learn an embedding of a graph in a continuous space, either using network topology alone, or including additional node features. We found that graph embeddings trained on transcription and PPI networks can explain more than 50 and 40 percent, respectively, of the variance in gene expression data, thus confirming the relationship between network structure and gene expression value. Additionally, for the task of predicting gene expression values using GNNs, with and without additional expression training data, we found that the message passing scheme of GNNs is able to obtain the lowest mean squared error between the tested models both in prediction of unseen test values, and in an auto-encoder scheme for reconstruction of the feature matrix of expression values.

Augmentation Scheme for Dealing with Imbalanced Network Traffic Classification Using Deep Learning

Jan 01, 2019

Abstract:One of the most important tasks in network management is identifying different types of traffic flows. As a result, a type of management service, called Network Traffic Classifier (NTC), has been introduced. One type of NTCs that has gained huge attention in recent years applies deep learning on packets in order to classify flows. Internet is an imbalanced environment i.e., some classes of applications are a lot more populated than others e.g., HTTP. Additionally, one of the challenges in deep learning methods is that they do not perform well in imbalanced environments in terms of evaluation metrics such as precision, recall, and $\mathrm{F_1}$ measure. In order to solve this problem, we recommend the use of augmentation methods to balance the dataset. In this paper, we propose a novel data augmentation approach based on the use of Long Short Term Memory (LSTM) networks for generating traffic flow patterns and Kernel Density Estimation (KDE) for replicating the numerical features of each class. First, we use the LSTM network in order to learn and generate the sequence of packets in a flow for classes with less population. Then, we complete the features of the sequence with generating random values based on the distribution of a certain feature, which will be estimated using KDE. Finally, we compare the training of a Convolutional Recurrent Neural Network (CRNN) in large-scale imbalanced, sampled, and augmented datasets. The contribution of our augmentation scheme is then evaluated on all of the datasets through measurements of precision, recall, and F1 measure for every class of application. The results demonstrate that our scheme is well suited for network traffic flow datasets and improves the performance of deep learning algorithms when it comes to above-mentioned metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge