Rami N. Khushaba

The University of Sydney

Radar-based Materials Classification Using Deep Wavelet Scattering Transform: A Comparison of Centimeter vs. Millimeter Wave Units

Feb 08, 2022

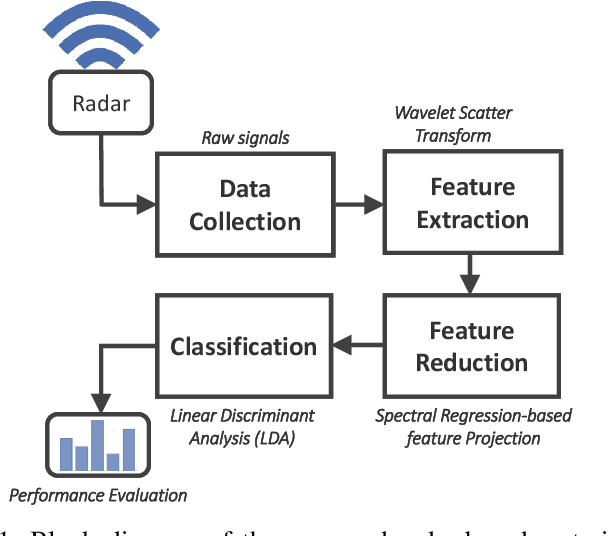

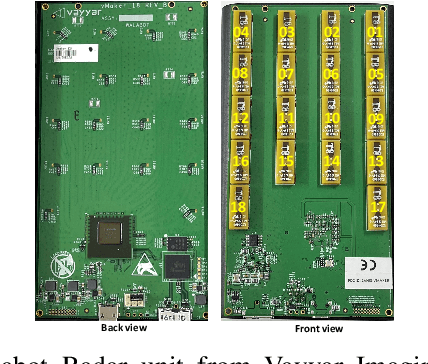

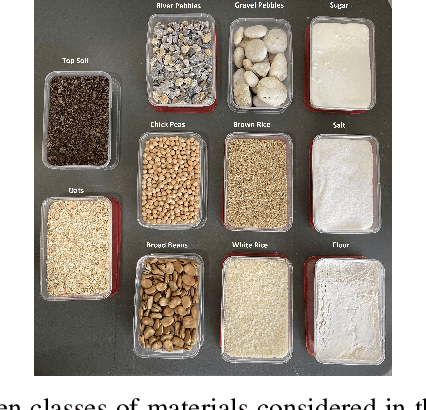

Abstract:Radar-based materials detection received significant attention in recent years for its potential inclusion in consumer and industrial applications like object recognition for grasping and manufacturing quality assurance and control. Several radar publications were developed for material classification under controlled settings with specific materials' properties and shapes. Recent literature has challenged the earlier findings on radars-based materials classification claiming that earlier solutions are not easily scaled to industrial applications due to a variety of real-world issues. Published experiments on the impact of these factors on the robustness of the extracted radar-based traditional features have already demonstrated that the application of deep neural networks can mitigate, to some extent, the impact to produce a viable solution. However, previous studies lacked an investigation of the usefulness of lower frequency radar units, specifically <10GHz, against the higher range units around and above 60GHz. This research considers two radar units with different frequency ranges: Walabot-3D (6.3-8 GHz) cm-wave and IMAGEVK-74 (62-69 GHz) mm-wave imaging units by Vayyar Imaging. A comparison is presented on the applicability of each unit for material classification. This work extends upon previous efforts, by applying deep wavelet scattering transform for the identification of different materials based on the reflected signals. In the wavelet scattering feature extractor, data is propagated through a series of wavelet transforms, nonlinearities, and averaging to produce low-variance representations of the reflected radar signals. This work is unique in comparison of the radar units and algorithms in material classification and includes real-time demonstrations that show strong performance by both units, with increased robustness offered by the cm-wave radar unit.

* 6 pages, 8 figures, accepted IEEE in Robotics and Automation Letters c. January 2022 associated video: https://www.youtube.com/watch?v=Mfohzvf7iuA

An Efficient Mixture of Deep and Machine Learning Models for COVID-19 and Tuberculosis Detection Using X-Ray Images in Resource Limited Settings

Jul 16, 2020

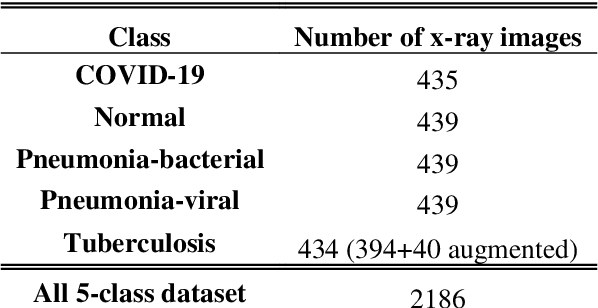

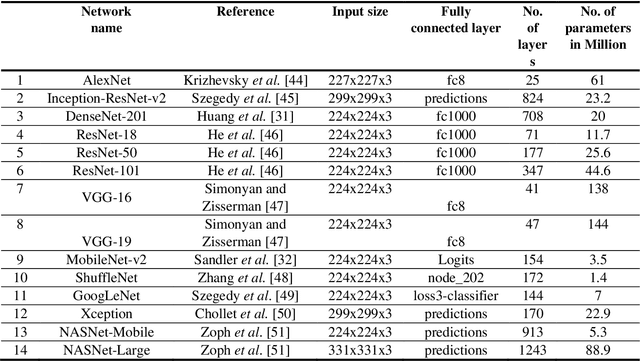

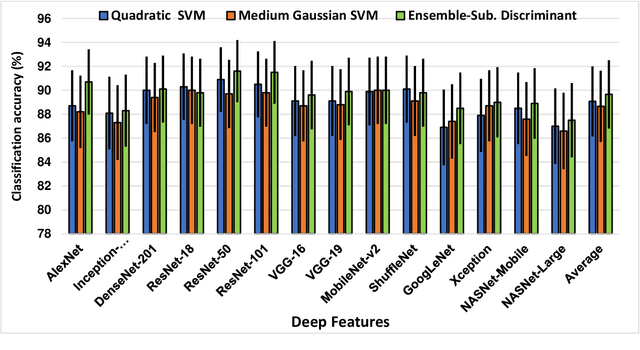

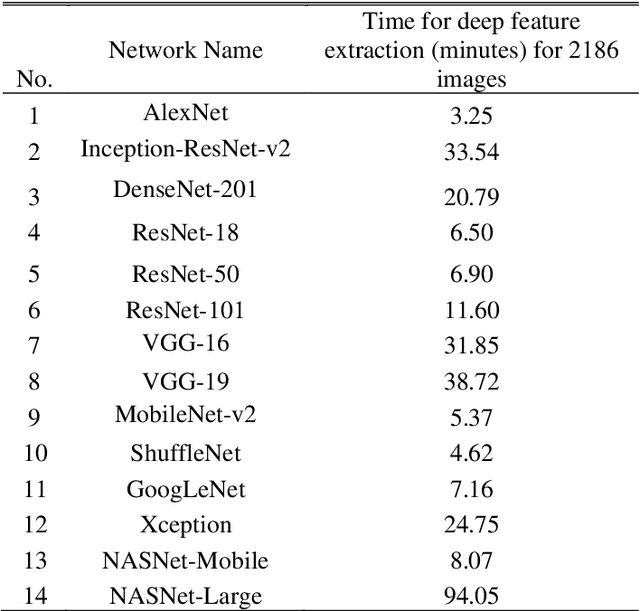

Abstract:Clinicians in the frontline need to assess quickly whether a patient with symptoms indeed has COVID-19 or not. The difficulty of this task is exacerbated in low resource settings that may not have access to biotechnology tests. Furthermore, Tuberculosis (TB) remains a major health problem in several low- and middle-income countries and its common symptoms include fever, cough and tiredness, similarly to COVID-19. In order to help in the detection of COVID-19, we propose the extraction of deep features (DF) from chest X-ray images, a technology available in most hospitals, and their subsequent classification using machine learning methods that do not require large computational resources. We compiled a five-class dataset of X-ray chest images including a balanced number of COVID-19, viral pneumonia, bacterial pneumonia, TB, and healthy cases. We compared the performance of pipelines combining 14 individual state-of-the-art pre-trained deep networks for DF extraction with traditional machine learning classifiers. A pipeline consisting of ResNet-50 for DF computation and ensemble of subspace discriminant classifier was the best performer in the classification of the five classes, achieving a detection accuracy of 91.6+ 2.6% (accuracy + 95% Confidence Interval). Furthermore, the same pipeline achieved accuracies of 98.6+1.4% and 99.9+0.5% in simpler three-class and two-class classification problems focused on distinguishing COVID-19, TB and healthy cases; and COVID-19 and healthy images, respectively. The pipeline was computationally efficient requiring just 0.19 second to extract DF per X-ray image and 2 minutes for training a traditional classifier with more than 2000 images on a CPU machine. The results suggest the potential benefits of using our pipeline in the detection of COVID-19, particularly in resource-limited settings and it can run with limited computational resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge