Ramesh K. Sitaraman

Towards Environmentally Equitable AI

Dec 21, 2024

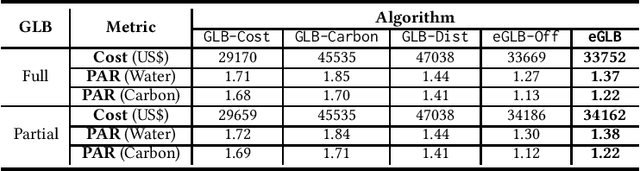

Abstract:The skyrocketing demand for artificial intelligence (AI) has created an enormous appetite for globally deployed power-hungry servers. As a result, the environmental footprint of AI systems has come under increasing scrutiny. More crucially, the current way that we exploit AI workloads' flexibility and manage AI systems can lead to wildly different environmental impacts across locations, increasingly raising environmental inequity concerns and creating unintended sociotechnical consequences. In this paper, we advocate environmental equity as a priority for the management of future AI systems, advancing the boundaries of existing resource management for sustainable AI and also adding a unique dimension to AI fairness. Concretely, we uncover the potential of equity-aware geographical load balancing to fairly re-distribute the environmental cost across different regions, followed by algorithmic challenges. We conclude by discussing a few future directions to exploit the full potential of system management approaches to mitigate AI's environmental inequity.

BONES: Near-Optimal Neural-Enhanced Video Streaming

Oct 15, 2023Abstract:Accessing high-quality video content can be challenging due to insufficient and unstable network bandwidth. Recent advances in neural enhancement have shown promising results in improving the quality of degraded videos through deep learning. Neural-Enhanced Streaming (NES) incorporates this new approach into video streaming, allowing users to download low-quality video segments and then enhance them to obtain high-quality content without violating the playback of the video stream. We introduce BONES, an NES control algorithm that jointly manages the network and computational resources to maximize the quality of experience (QoE) of the user. BONES formulates NES as a Lyapunov optimization problem and solves it in an online manner with near-optimal performance, making it the first NES algorithm to provide a theoretical performance guarantee. Our comprehensive experimental results indicate that BONES increases QoE by 4% to 13% over state-of-the-art algorithms, demonstrating its potential to enhance the video streaming experience for users. Our code and data will be released to the public.

CU-Net: Efficient Point Cloud Color Upsampling Network

Sep 12, 2022

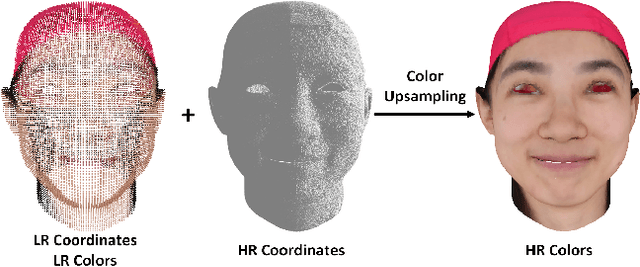

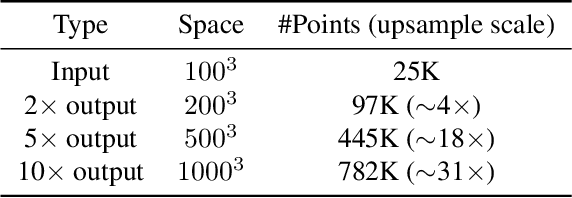

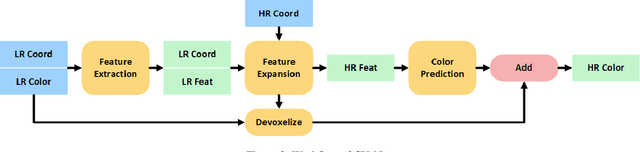

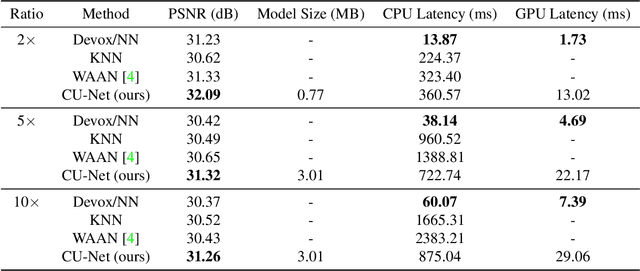

Abstract:Point cloud upsampling is necessary for Augmented Reality, Virtual Reality, and telepresence scenarios. Although the geometry upsampling is well studied to densify point cloud coordinates, the upsampling of colors has been largely overlooked. In this paper, we propose CU-Net, the first deep-learning point cloud color upsampling model. Leveraging a feature extractor based on sparse convolution and a color prediction module based on neural implicit function, CU-Net achieves linear time and space complexity. Therefore, CU-Net is theoretically guaranteed to be more efficient than most existing methods with quadratic complexity. Experimental results demonstrate that CU-Net can colorize a photo-realistic point cloud with nearly a million points in real time, while having better visual quality than baselines. Besides, CU-Net can adapt to an arbitrary upsampling ratio and unseen objects. Our source code will be released to the public soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge