Raj Patel

The AI Black-Scholes: Finance-Informed Neural Network

Dec 15, 2024Abstract:In the realm of option pricing, existing models are typically classified into principle-driven methods, such as solving partial differential equations (PDEs) that pricing function satisfies, and data-driven approaches, such as machine learning (ML) techniques that parameterize the pricing function directly. While principle-driven models offer a rigorous theoretical framework, they often rely on unrealistic assumptions, such as asset processes adhering to fixed stochastic differential equations (SDEs). Moreover, they can become computationally intensive, particularly in high-dimensional settings when analytical solutions are not available and thus numerical solutions are needed. In contrast, data-driven models excel in capturing market data trends, but they often lack alignment with core financial principles, raising concerns about interpretability and predictive accuracy, especially when dealing with limited or biased datasets. This work proposes a hybrid approach to address these limitations by integrating the strengths of both principled and data-driven methodologies. Our framework combines the theoretical rigor and interpretability of PDE-based models with the adaptability of machine learning techniques, yielding a more versatile methodology for pricing a broad spectrum of options. We validate our approach across different volatility modeling approaches-both with constant volatility (Black-Scholes) and stochastic volatility (Heston), demonstrating that our proposed framework, Finance-Informed Neural Network (FINN), not only enhances predictive accuracy but also maintains adherence to core financial principles. FINN presents a promising tool for practitioners, offering robust performance across a variety of market conditions.

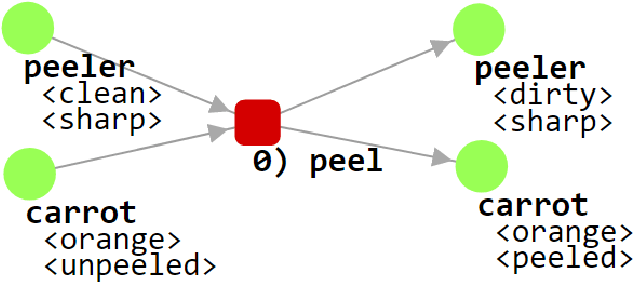

FOON Creation and Traversal for Recipe Generation

Oct 13, 2022

Abstract:Task competition by robots is still off from being completely dependable and usable. One way a robot may decipher information given to it and accomplish tasks is by utilizing FOON, which stands for functional object-oriented network. The network first needs to be created by having a human creates action nodes as well as input and output nodes in a .txt file. After the network is sizeable, utilization of this network allows for traversal of the network in a variety of ways such as choosing steps via iterative deepening searching by using the first seen valid option. Another mechanism is heuristics, such as choosing steps based on the highest success rate or lowest amount of input ingredients. Via any of these methods, a program can traverse the network given an output product, and derive the series of steps that need to be taken to produce the output.

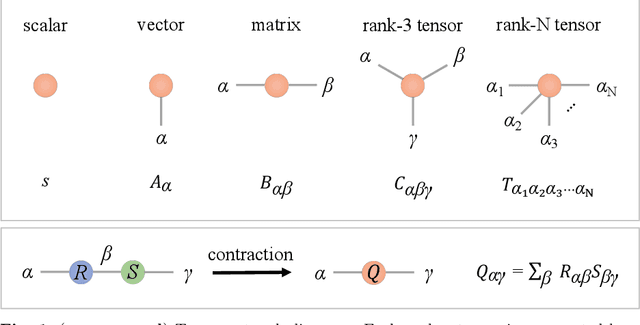

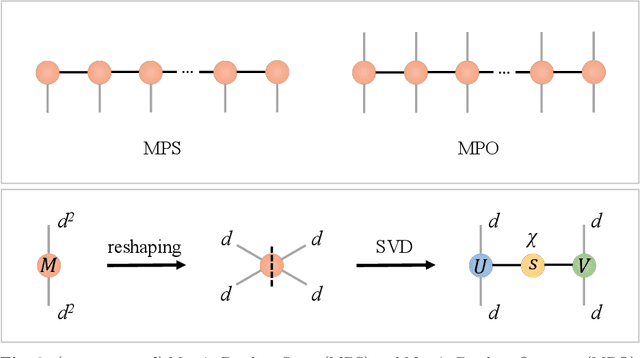

Quantum-Inspired Tensor Neural Networks for Partial Differential Equations

Aug 10, 2022

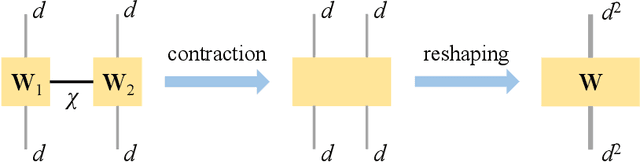

Abstract:Partial Differential Equations (PDEs) are used to model a variety of dynamical systems in science and engineering. Recent advances in deep learning have enabled us to solve them in a higher dimension by addressing the curse of dimensionality in new ways. However, deep learning methods are constrained by training time and memory. To tackle these shortcomings, we implement Tensor Neural Networks (TNN), a quantum-inspired neural network architecture that leverages Tensor Network ideas to improve upon deep learning approaches. We demonstrate that TNN provide significant parameter savings while attaining the same accuracy as compared to the classical Dense Neural Network (DNN). In addition, we also show how TNN can be trained faster than DNN for the same accuracy. We benchmark TNN by applying them to solve parabolic PDEs, specifically the Black-Scholes-Barenblatt equation, widely used in financial pricing theory, empirically showing the advantages of TNN over DNN. Further examples, such as the Hamilton-Jacobi-Bellman equation, are also discussed.

Estimator Vectors: OOV Word Embeddings based on Subword and Context Clue Estimates

Oct 18, 2019

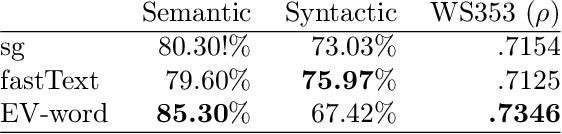

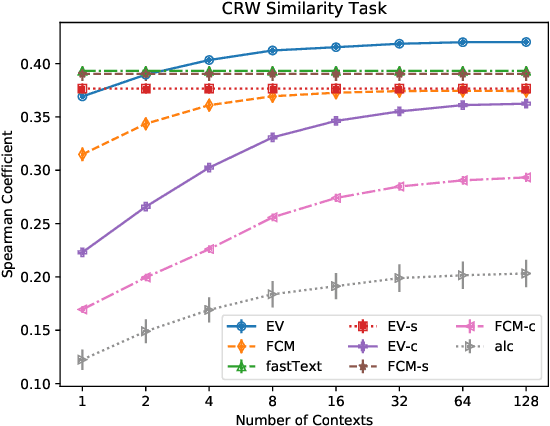

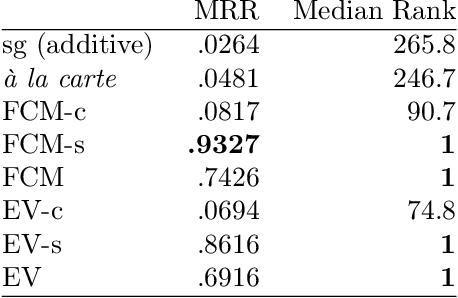

Abstract:Semantic representations of words have been successfully extracted from unlabeled corpuses using neural network models like word2vec. These representations are generally high quality and are computationally inexpensive to train, making them popular. However, these approaches generally fail to approximate out of vocabulary (OOV) words, a task humans can do quite easily, using word roots and context clues. This paper proposes a neural network model that learns high quality word representations, subword representations, and context clue representations jointly. Learning all three types of representations together enhances the learning of each, leading to enriched word vectors, along with strong estimates for OOV words, via the combination of the corresponding context clue and subword embeddings. Our model, called Estimator Vectors (EV), learns strong word embeddings and is competitive with state of the art methods for OOV estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge