Rahul Sankar

Free Form Medical Visual Question Answering in Radiology

Jan 23, 2024Abstract:Visual Question Answering (VQA) in the medical domain presents a unique, interdisciplinary challenge, combining fields such as Computer Vision, Natural Language Processing, and Knowledge Representation. Despite its importance, research in medical VQA has been scant, only gaining momentum since 2018. Addressing this gap, our research delves into the effective representation of radiology images and the joint learning of multimodal representations, surpassing existing methods. We innovatively augment the SLAKE dataset, enabling our model to respond to a more diverse array of questions, not limited to the immediate content of radiology or pathology images. Our model achieves a top-1 accuracy of 79.55\% with a less complex architecture, demonstrating comparable performance to current state-of-the-art models. This research not only advances medical VQA but also opens avenues for practical applications in diagnostic settings.

MobileCaps: A Lightweight Model for Screening and Severity Analysis of COVID-19 Chest X-Ray Images

Aug 19, 2021

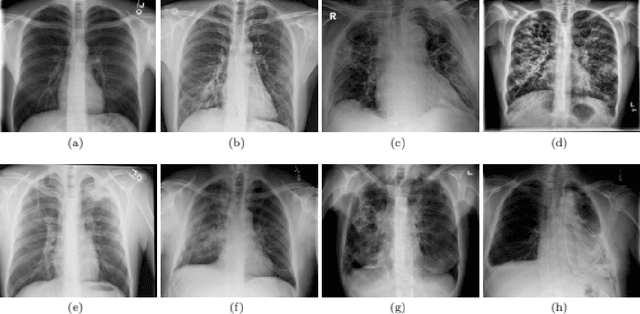

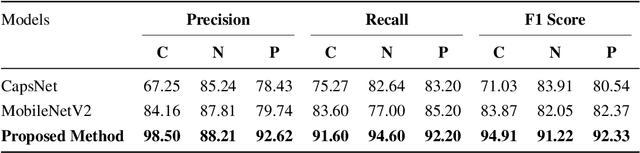

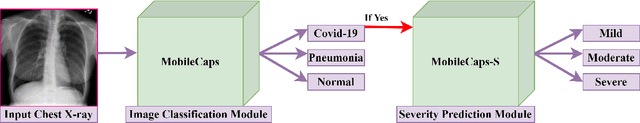

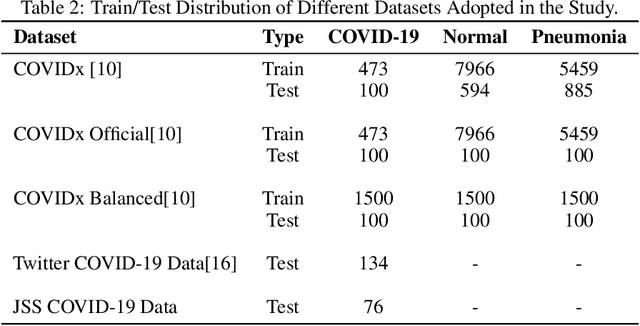

Abstract:The world is going through a challenging phase due to the disastrous effect caused by the COVID-19 pandemic on the healthcare system and the economy. The rate of spreading, post-COVID-19 symptoms, and the occurrence of new strands of COVID-19 have put the healthcare systems in disruption across the globe. Due to this, the task of accurately screening COVID-19 cases has become of utmost priority. Since the virus infects the respiratory system, Chest X-Ray is an imaging modality that is adopted extensively for the initial screening. We have performed a comprehensive study that uses CXR images to identify COVID-19 cases and realized the necessity of having a more generalizable model. We utilize MobileNetV2 architecture as the feature extractor and integrate it into Capsule Networks to construct a fully automated and lightweight model termed as MobileCaps. MobileCaps is trained and evaluated on the publicly available dataset with the model ensembling and Bayesian optimization strategies to efficiently classify CXR images of patients with COVID-19 from non-COVID-19 pneumonia and healthy cases. The proposed model is further evaluated on two additional RT-PCR confirmed datasets to demonstrate the generalizability. We also introduce MobileCaps-S and leverage it for performing severity assessment of CXR images of COVID-19 based on the Radiographic Assessment of Lung Edema (RALE) scoring technique. Our classification model achieved an overall recall of 91.60, 94.60, 92.20, and a precision of 98.50, 88.21, 92.62 for COVID-19, non-COVID-19 pneumonia, and healthy cases, respectively. Further, the severity assessment model attained an R$^2$ coefficient of 70.51. Owing to the fact that the proposed models have fewer trainable parameters than the state-of-the-art models reported in the literature, we believe our models will go a long way in aiding healthcare systems in the battle against the pandemic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge