Quentin Paletta

SkyGPT: Probabilistic Short-term Solar Forecasting Using Synthetic Sky Videos from Physics-constrained VideoGPT

Jun 20, 2023

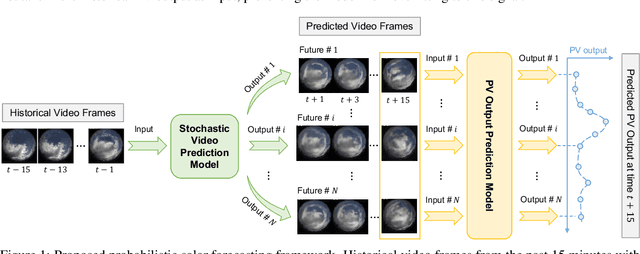

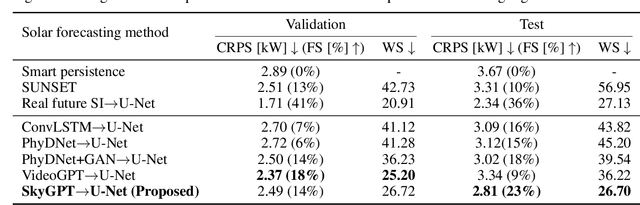

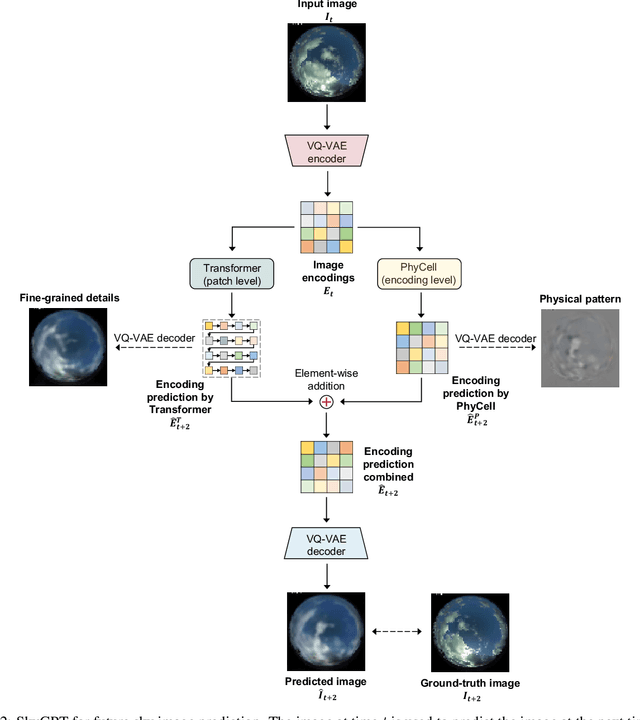

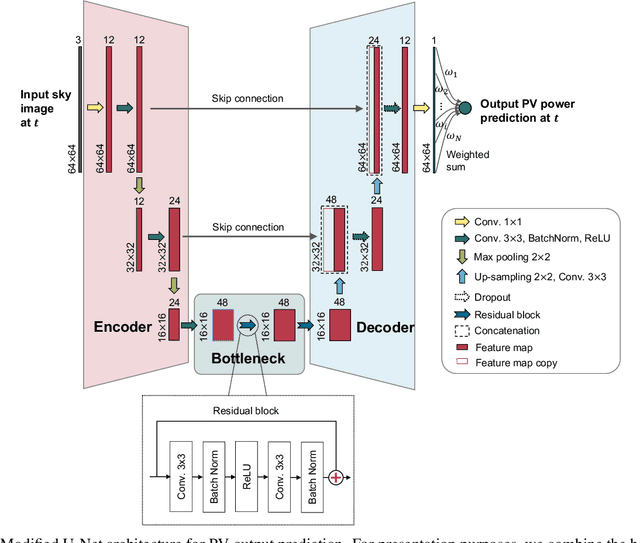

Abstract:In recent years, deep learning-based solar forecasting using all-sky images has emerged as a promising approach for alleviating uncertainty in PV power generation. However, the stochastic nature of cloud movement remains a major challenge for accurate and reliable solar forecasting. With the recent advances in generative artificial intelligence, the synthesis of visually plausible yet diversified sky videos has potential for aiding in forecasts. In this study, we introduce \emph{SkyGPT}, a physics-informed stochastic video prediction model that is able to generate multiple possible future images of the sky with diverse cloud motion patterns, by using past sky image sequences as input. Extensive experiments and comparison with benchmark video prediction models demonstrate the effectiveness of the proposed model in capturing cloud dynamics and generating future sky images with high realism and diversity. Furthermore, we feed the generated future sky images from the video prediction models for 15-minute-ahead probabilistic solar forecasting for a 30-kW roof-top PV system, and compare it with an end-to-end deep learning baseline model SUNSET and a smart persistence model. Better PV output prediction reliability and sharpness is observed by using the predicted sky images generated with SkyGPT compared with other benchmark models, achieving a continuous ranked probability score (CRPS) of 2.81 (13\% better than SUNSET and 23\% better than smart persistence) and a Winkler score of 26.70 for the test set. Although an arbitrary number of futures can be generated from a historical sky image sequence, the results suggest that 10 future scenarios is a good choice that balances probabilistic solar forecasting performance and computational cost.

Open-Source Ground-based Sky Image Datasets for Very Short-term Solar Forecasting, Cloud Analysis and Modeling: A Comprehensive Survey

Dec 01, 2022Abstract:Sky-image-based solar forecasting using deep learning has been recognized as a promising approach in reducing the uncertainty in solar power generation. However, one of the biggest challenges is the lack of massive and diversified sky image samples. In this study, we present a comprehensive survey of open-source ground-based sky image datasets for very short-term solar forecasting (i.e., forecasting horizon less than 30 minutes), as well as related research areas which can potentially help improve solar forecasting methods, including cloud segmentation, cloud classification and cloud motion prediction. We first identify 72 open-source sky image datasets that satisfy the needs of machine/deep learning. Then a database of information about various aspects of the identified datasets is constructed. To evaluate each surveyed datasets, we further develop a multi-criteria ranking system based on 8 dimensions of the datasets which could have important impacts on usage of the data. Finally, we provide insights on the usage of these datasets for different applications. We hope this paper can provide an overview for researchers who are looking for datasets for very short-term solar forecasting and related areas.

Sky-image-based solar forecasting using deep learning with multi-location data: training models locally, globally or via transfer learning?

Nov 03, 2022Abstract:Solar forecasting from ground-based sky images using deep learning models has shown great promise in reducing the uncertainty in solar power generation. One of the biggest challenges for training deep learning models is the availability of labeled datasets. With more and more sky image datasets open sourced in recent years, the development of accurate and reliable solar forecasting methods has seen a huge growth in potential. In this study, we explore three different training strategies for deep-learning-based solar forecasting models by leveraging three heterogeneous datasets collected around the world with drastically different climate patterns. Specifically, we compare the performance of models trained individually based on local datasets (local models) and models trained jointly based on the fusion of multiple datasets from different locations (global models), and we further examine the knowledge transfer from pre-trained solar forecasting models to a new dataset of interest (transfer learning models). The results suggest that the local models work well when deployed locally, but significant errors are observed for the scale of the prediction when applied offsite. The global model can adapt well to individual locations, while the possible increase in training efforts need to be taken into account. Pre-training models on a large and diversified source dataset and transferring to a local target dataset generally achieves superior performance over the other two training strategies. Transfer learning brings the most benefits when there are limited local data. With 80% less training data, it can achieve 1% improvement over the local baseline model trained using the entire dataset. Therefore, we call on the efforts from the solar forecasting community to contribute to a global dataset containing a massive amount of imagery and displaying diversified samples with a range of sky conditions.

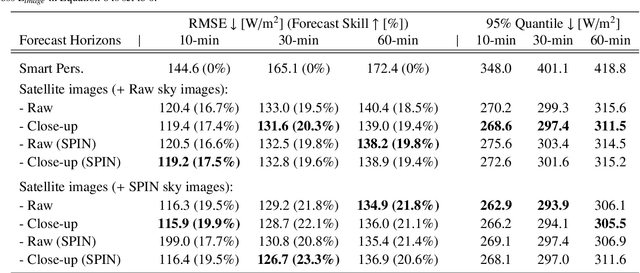

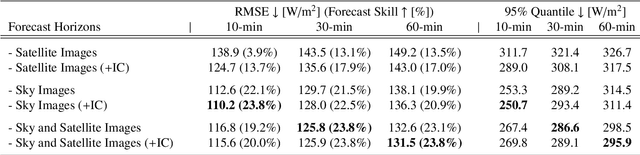

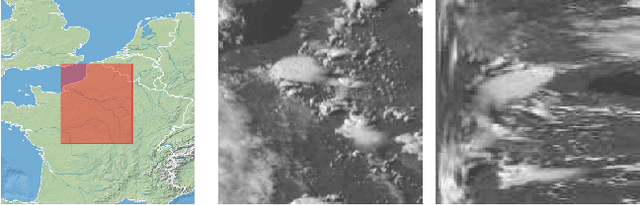

Omnivision forecasting: combining satellite observations with sky images for improved intra-hour solar energy predictions

Jun 07, 2022

Abstract:Integration of intermittent renewable energy sources into electric grids in large proportions is challenging. A well-established approach aimed at addressing this difficulty involves the anticipation of the upcoming energy supply variability to adapt the response of the grid. In solar energy, short-term changes in electricity production caused by occluding clouds can be predicted at different time scales from all-sky cameras (up to 30-min ahead) and satellite observations (up to 6h ahead). In this study, we integrate these two complementary points of view on the cloud cover in a single machine learning framework to improve intra-hour (up to 60-min ahead) irradiance forecasting. Both deterministic and probabilistic predictions are evaluated in different weather conditions (clear-sky, cloudy, overcast) and with different input configurations (sky images, satellite observations and/or past irradiance values). Our results show that the hybrid model benefits predictions in clear-sky conditions and improves longer-term forecasting. This study lays the groundwork for future novel approaches of combining sky images and satellite observations in a single learning framework to advance solar nowcasting.

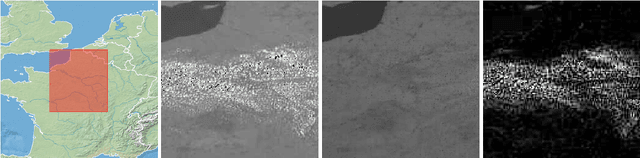

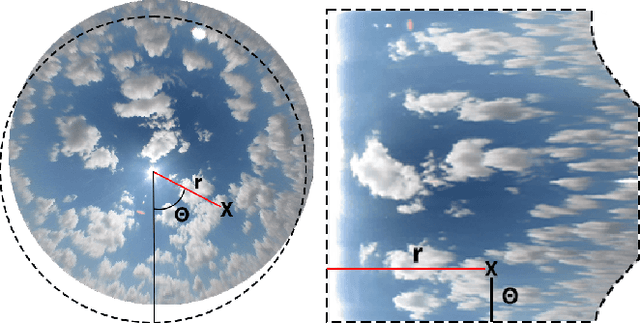

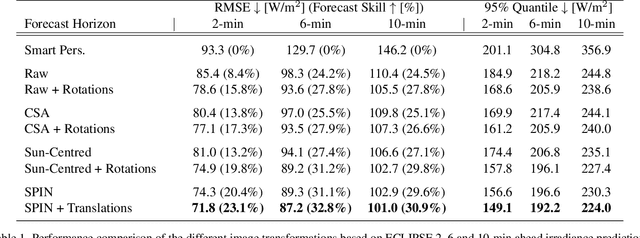

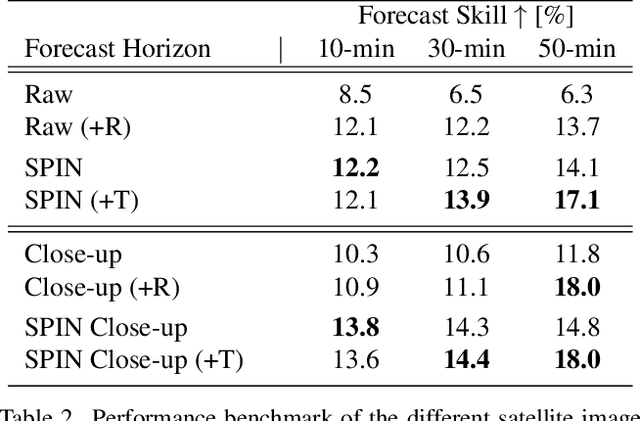

SPIN: Simplifying Polar Invariance for Neural networks Application to vision-based irradiance forecasting

Nov 29, 2021

Abstract:Translational invariance induced by pooling operations is an inherent property of convolutional neural networks, which facilitates numerous computer vision tasks such as classification. Yet to leverage rotational invariant tasks, convolutional architectures require specific rotational invariant layers or extensive data augmentation to learn from diverse rotated versions of a given spatial configuration. Unwrapping the image into its polar coordinates provides a more explicit representation to train a convolutional architecture as the rotational invariance becomes translational, hence the visually distinct but otherwise equivalent rotated versions of a given scene can be learnt from a single image. We show with two common vision-based solar irradiance forecasting challenges (i.e. using ground-taken sky images or satellite images), that this preprocessing step significantly improves prediction results by standardising the scene representation, while decreasing training time by a factor of 4 compared to augmenting data with rotations. In addition, this transformation magnifies the area surrounding the centre of the rotation, leading to more accurate short-term irradiance predictions.

ECLIPSE : Envisioning Cloud Induced Perturbations in Solar Energy

Apr 26, 2021

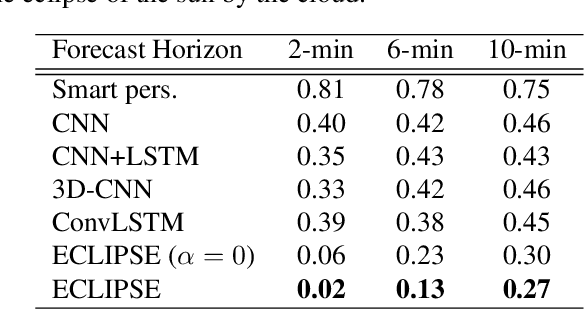

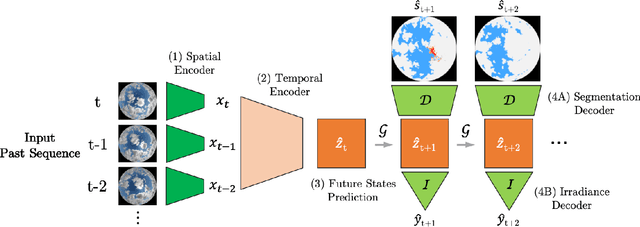

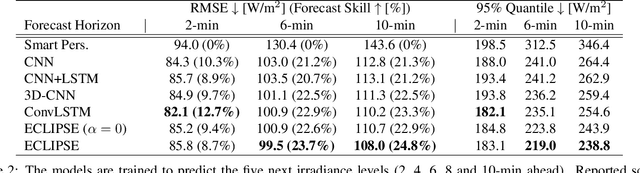

Abstract:Efficient integration of solar energy into the electricity mix depends on a reliable anticipation of its intermittency. A promising approach to forecast the temporal variability of solar irradiance resulting from the cloud cover dynamics, is based on the analysis of sequences of ground-taken sky images. Despite encouraging results, a recurrent limitation of current Deep Learning approaches lies in the ubiquitous tendency of reacting to past observations rather than actively anticipating future events. This leads to a systematic temporal lag and little ability to predict sudden events. To address this challenge, we introduce ECLIPSE, a spatio-temporal neural network architecture that models cloud motion from sky images to predict both future segmented images and corresponding irradiance levels. We show that ECLIPSE anticipates critical events and considerably reduces temporal delay while generating visually realistic futures.

Benchmarking of Deep Learning Irradiance Forecasting Models from Sky Images -- an in-depth Analysis

Feb 02, 2021

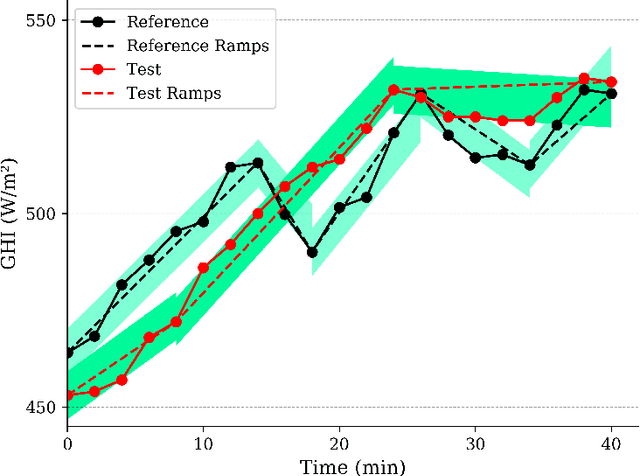

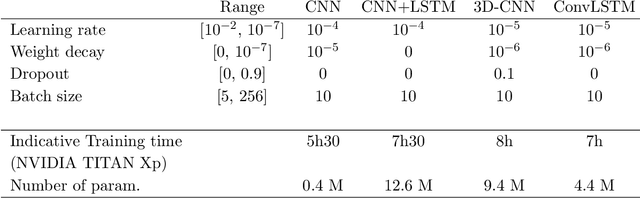

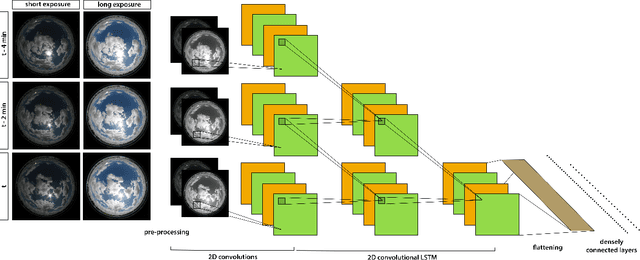

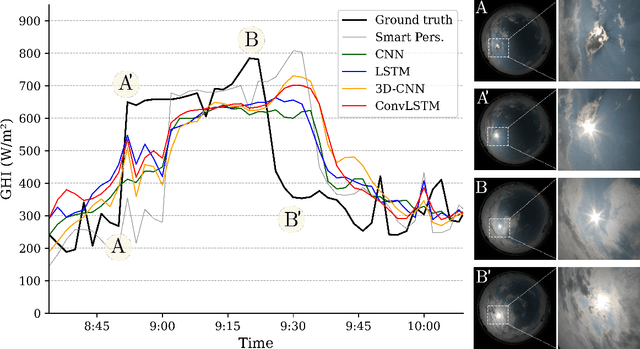

Abstract:A number of industrial applications, such as smart grids, power plant operation, hybrid system management or energy trading, could benefit from improved short-term solar forecasting, addressing the intermittent energy production from solar panels. However, current approaches to modelling the cloud cover dynamics from sky images still lack precision regarding the spatial configuration of clouds, their temporal dynamics and physical interactions with solar radiation. Benefiting from a growing number of large datasets, data driven methods are being developed to address these limitations with promising results. In this study, we compare four commonly used Deep Learning architectures trained to forecast solar irradiance from sequences of hemispherical sky images and exogenous variables. To assess the relative performance of each model, we used the Forecast Skill metric based on the smart persistence model, as well as ramp and time distortion metrics. The results show that encoding spatiotemporal aspects of the sequence of sky images greatly improved the predictions with 10 min ahead Forecast Skill reaching 20.4% on the test year. However, based on the experimental data, we conclude that, with a common setup, Deep Learning models tend to behave just as a 'very smart persistence model', temporally aligned with the persistence model while mitigating its most penalising errors. Thus, despite being captured by the sky cameras, models often miss fundamental events causing large irradiance changes such as clouds obscuring the sun. We hope that our work will contribute to a shift of this approach to irradiance forecasting, from reactive to anticipatory.

A Temporally Consistent Image-based Sun Tracking Algorithm for Solar Energy Forecasting Applications

Dec 02, 2020

Abstract:Improving irradiance forecasting is critical to further increase the share of solar in the energy mix. On a short time scale, fish-eye cameras on the ground are used to capture cloud displacements causing the local variability of the electricity production. As most of the solar radiation comes directly from the Sun, current forecasting approaches use its position in the image as a reference to interpret the cloud cover dynamics. However, existing Sun tracking methods rely on external data and a calibration of the camera, which requires access to the device. To address these limitations, this study introduces an image-based Sun tracking algorithm to localise the Sun in the image when it is visible and interpolate its daily trajectory from past observations. We validate the method on a set of sky images collected over a year at SIRTA's lab. Experimental results show that the proposed method provides robust smooth Sun trajectories with a mean absolute error below 1% of the image size.

Convolutional Neural Networks applied to sky images for short-term solar irradiance forecasting

May 22, 2020

Abstract:Despite the advances in the field of solar energy, improvements of solar forecasting techniques, addressing the intermittent electricity production, remain essential for securing its future integration into a wider energy supply. A promising approach to anticipate irradiance changes consists of modeling the cloud cover dynamics from ground taken or satellite images. This work presents preliminary results on the application of deep Convolutional Neural Networks for 2 to 20 min irradiance forecasting using hemispherical sky images and exogenous variables. We evaluate the models on a set of irradiance measurements and corresponding sky images collected in Palaiseau (France) over 8 months with a temporal resolution of 2 min. To outline the learning of neural networks in the context of short-term irradiance forecasting, we implemented visualisation techniques revealing the types of patterns recognised by trained algorithms in sky images. In addition, we show that training models with past samples of the same day improves their forecast skill, relative to the smart persistence model based on the Mean Square Error, by around 10% on a 10 min ahead prediction. These results emphasise the benefit of integrating previous same-day data in short-term forecasting. This, in turn, can be achieved through model fine tuning or using recurrent units to facilitate the extraction of relevant temporal features from past data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge