Quentin Mérigot

Properties of Wasserstein Gradient Flows for the Sliced-Wasserstein Distance

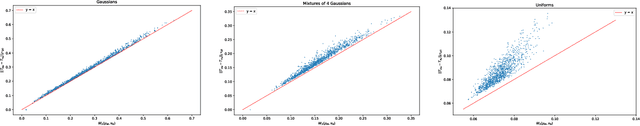

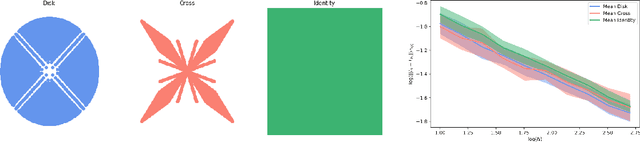

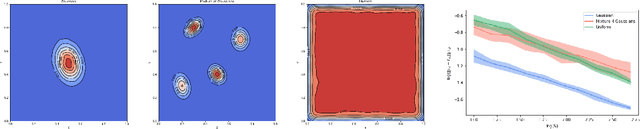

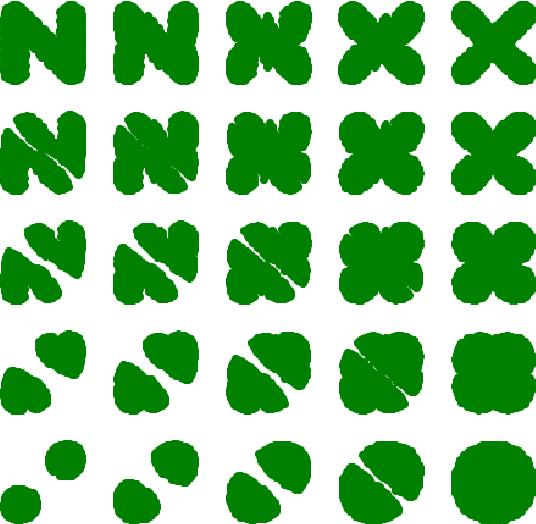

Feb 10, 2025Abstract:In this paper, we investigate the properties of the Sliced Wasserstein Distance (SW) when employed as an objective functional. The SW metric has gained significant interest in the optimal transport and machine learning literature, due to its ability to capture intricate geometric properties of probability distributions while remaining computationally tractable, making it a valuable tool for various applications, including generative modeling and domain adaptation. Our study aims to provide a rigorous analysis of the critical points arising from the optimization of the SW objective. By computing explicit perturbations, we establish that stable critical points of SW cannot concentrate on segments. This stability analysis is crucial for understanding the behaviour of optimization algorithms for models trained using the SW objective. Furthermore, we investigate the properties of the SW objective, shedding light on the existence and convergence behavior of critical points. We illustrate our theoretical results through numerical experiments.

Quantitative stability of optimal transport maps and linearization of the 2-Wasserstein space

Oct 14, 2019

Abstract:This work studies an explicit embedding of the set of probability measures into a Hilbert space, defined using optimal transport maps from a reference probability density. This embedding linearizes to some extent the 2-Wasserstein space, and enables the direct use of generic supervised and unsupervised learning algorithms on measure data. Our main result is that the embedding is (bi-)H\"older continuous, when the reference density is uniform over a convex set, and can be equivalently phrased as a dimension-independent H\"older-stability results for optimal transport maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge