Alex Delalande

LMO, DATASHAPE

Nearly Tight Convergence Bounds for Semi-discrete Entropic Optimal Transport

Oct 25, 2021

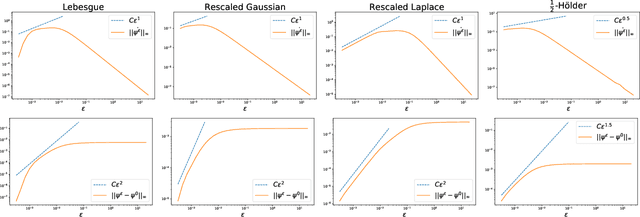

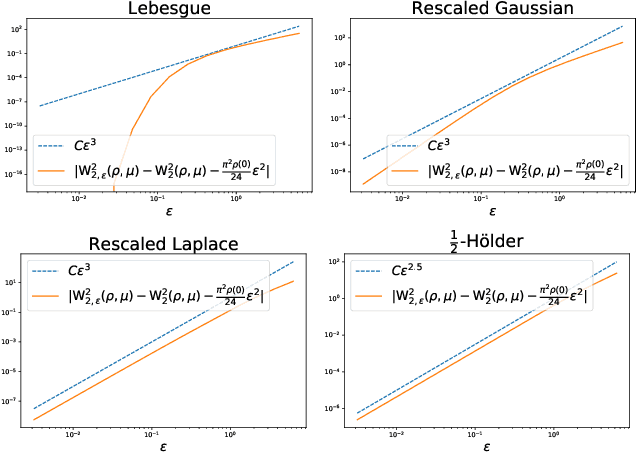

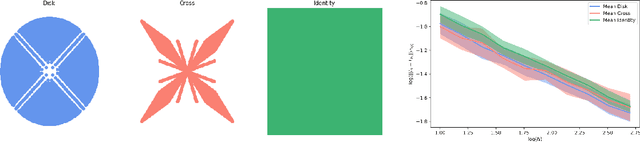

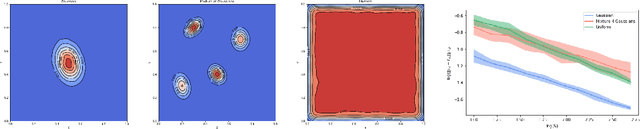

Abstract:We derive nearly tight and non-asymptotic convergence bounds for solutions of entropic semi-discrete optimal transport. These bounds quantify the stability of the dual solutions of the regularized problem (sometimes called Sinkhorn potentials) w.r.t. the regularization parameter, for which we ensure a better than Lipschitz dependence. Such facts may be a first step towards a mathematical justification of annealing or $\eps$-scaling heuristics for the numerical resolution of regularized semi-discrete optimal transport. Our results also entail a non-asymptotic and tight expansion of the difference between the entropic and the unregularized costs.

Quantitative stability of optimal transport maps and linearization of the 2-Wasserstein space

Oct 14, 2019

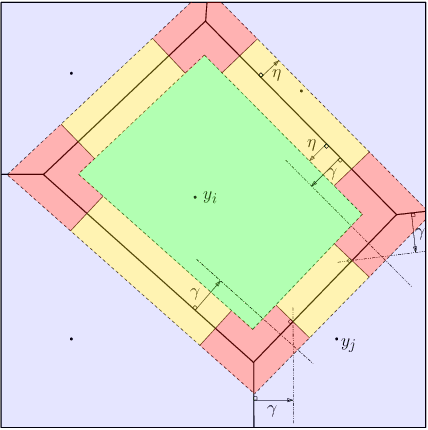

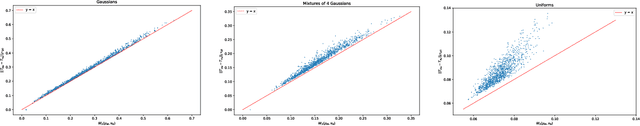

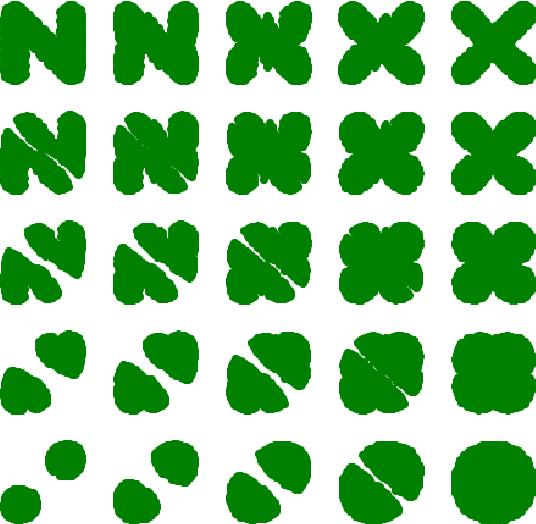

Abstract:This work studies an explicit embedding of the set of probability measures into a Hilbert space, defined using optimal transport maps from a reference probability density. This embedding linearizes to some extent the 2-Wasserstein space, and enables the direct use of generic supervised and unsupervised learning algorithms on measure data. Our main result is that the embedding is (bi-)H\"older continuous, when the reference density is uniform over a convex set, and can be equivalently phrased as a dimension-independent H\"older-stability results for optimal transport maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge