Qiude Zhang

Revisiting Monocular 3D Object Detection from Scene-Level Depth Retargeting to Instance-Level Spatial Refinement

Dec 26, 2024

Abstract:Monocular 3D object detection is challenging due to the lack of accurate depth. However, existing depth-assisted solutions still exhibit inferior performance, whose reason is universally acknowledged as the unsatisfactory accuracy of monocular depth estimation models. In this paper, we revisit monocular 3D object detection from the depth perspective and formulate an additional issue as the limited 3D structure-aware capability of existing depth representations (\textit{e.g.}, depth one-hot encoding or depth distribution). To address this issue, we propose a novel depth-adapted monocular 3D object detection network, termed \textbf{RD3D}, that mainly comprises a Scene-Level Depth Retargeting (SDR) module and an Instance-Level Spatial Refinement (ISR) module. The former incorporates the scene-level perception of 3D structures, retargeting traditional depth representations to a new formulation: \textbf{Depth Thickness Field}. The latter refines the voxel spatial representation with the guidance of instances, eliminating the ambiguity of 3D occupation and thus improving detection accuracy. Extensive experiments on the KITTI and Waymo datasets demonstrate our superiority to existing state-of-the-art (SoTA) methods and the universality when equipped with different depth estimation models. The code will be available.

A Plug-and-Play Untrained Neural Network for Full Waveform Inversion in Reconstructing Sound Speed Images of Ultrasound Computed Tomography

Jun 14, 2024

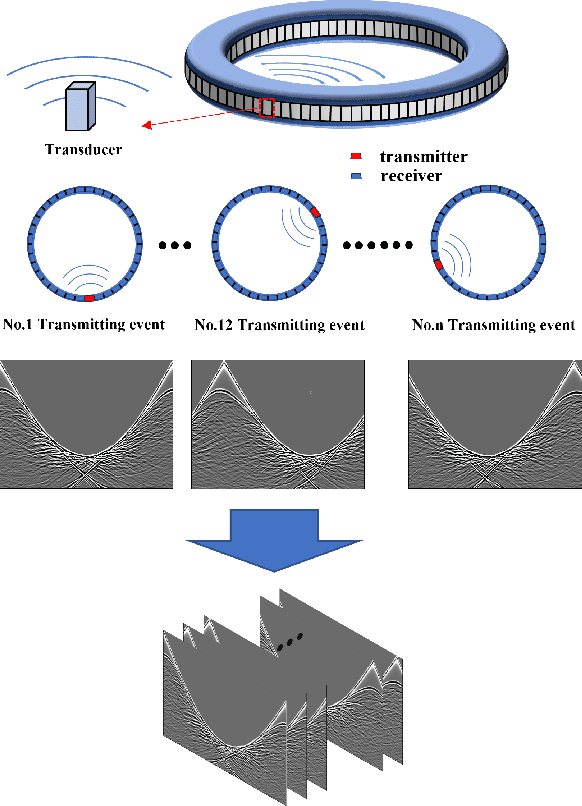

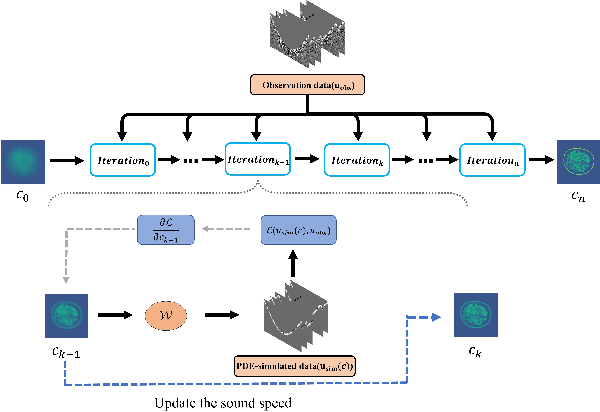

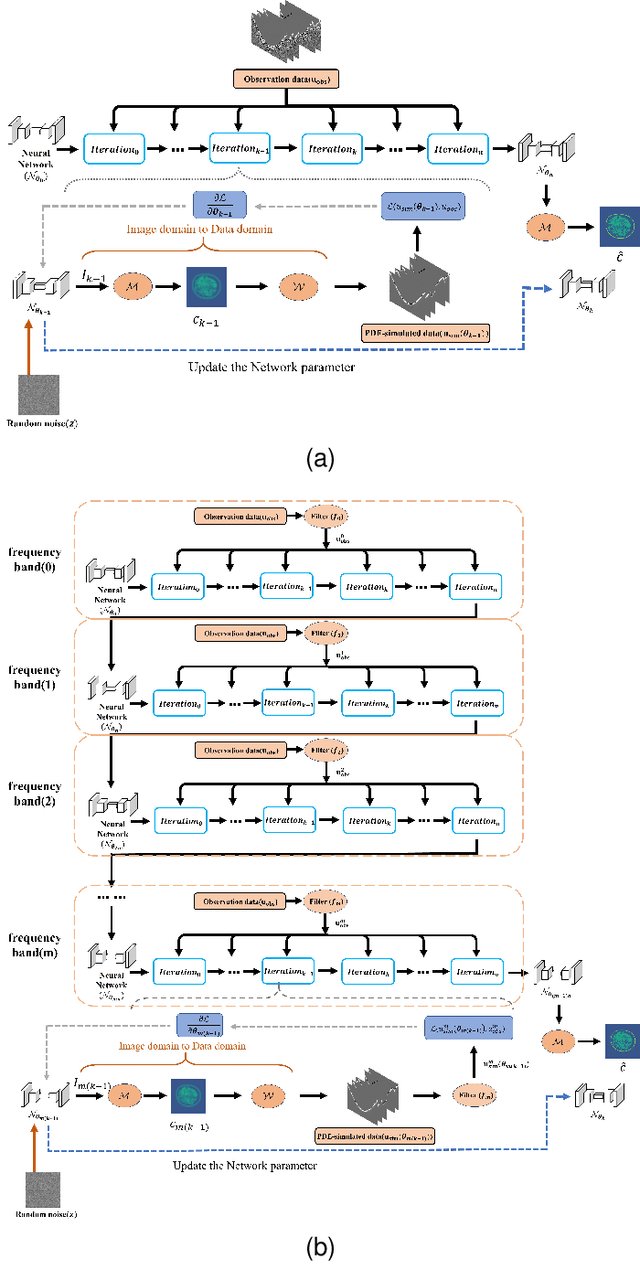

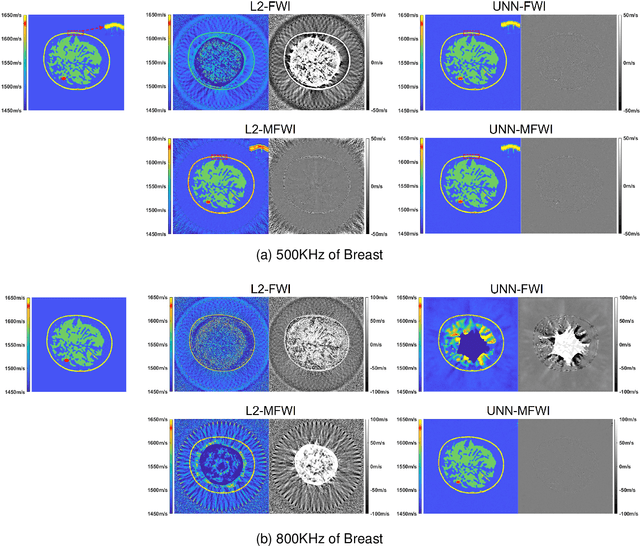

Abstract:Ultrasound computed tomography (USCT), as an emerging technology, can provide multiple quantitative parametric images of human tissue, such as sound speed and attenuation images, distinguishing it from conventional B-mode (reflection) ultrasound imaging. Full waveform inversion (FWI) is acknowledged as a technique with the greatest potential for reconstructing high-resolution sound speed images in USCT. However, traditional FWI for sound speed image reconstruction suffers from high sensitivity to the initial model caused by its strong non-convex nonlinearity, resulting in poor performance when ultrasound signals are at high frequencies. This limitation significantly restricts the application of FWI in the USCT imaging field. In this paper, we propose an untrained neural network (UNN) that can be integrated into the traditional iteration-based FWI framework as an implicit regularization prior. This integration allows for seamless deployment as a plug-and-play module within existing FWI algorithms or their variants. Notably, the proposed UNN method can be trained in an unsupervised fashion, a vital aspect in medical imaging where ground truth data is often unavailable. Evaluations of the numerical simulation and phantom experiment of the breast demonstrate that the proposed UNN improves the robustness of image reconstruction, reduces image artifacts, and achieves great image contrast. To the best of our knowledge, this study represents the first attempt to propose an implicit UNN for FWI in reconstructing sound speed images for USCT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge