Qinyun Tang

Balancing Value Underestimation and Overestimation with Realistic Actor-Critic

Nov 10, 2021

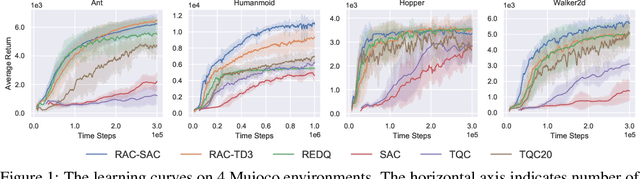

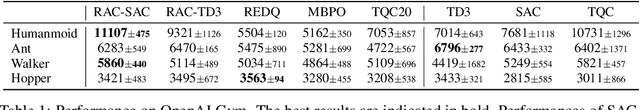

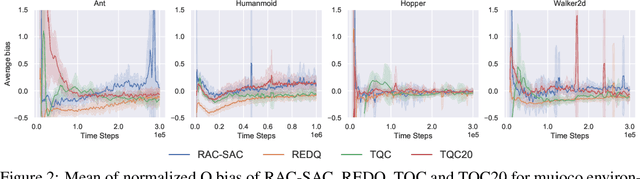

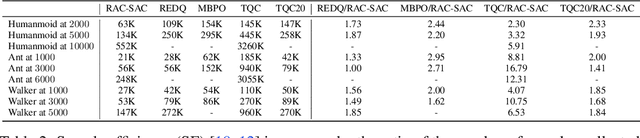

Abstract:Model-free deep reinforcement learning (RL) has been successfully applied to challenging continuous control domains. However, poor sample efficiency prevents these methods from being widely used in real-world domains. We address this problem by proposing a novel model-free algorithm, Realistic Actor-Critic(RAC), which aims to solve trade-offs between value underestimation and overestimation by learning a policy family concerning various confidence-bounds of Q-function. We construct uncertainty punished Q-learning(UPQ), which uses uncertainty from the ensembling of multiple critics to control estimation bias of Q-function, making Q-functions smoothly shift from lower- to higher-confidence bounds. With the guide of these critics, RAC employs Universal Value Function Approximators (UVFA) to simultaneously learn many optimistic and pessimistic policies with the same neural network. Optimistic policies generate effective exploratory behaviors, while pessimistic policies reduce the risk of value overestimation to ensure stable updates of policies and Q-functions. The proposed method can be incorporated with any off-policy actor-critic RL algorithms. Our method achieve 10x sample efficiency and 25\% performance improvement compared to SAC on the most challenging Humanoid environment, obtaining the episode reward $11107\pm 475$ at $10^6$ time steps. All the source codes are available at https://github.com/ihuhuhu/RAC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge