Qingju Liu

Content and Style Aware Audio-Driven Facial Animation

Aug 14, 2024

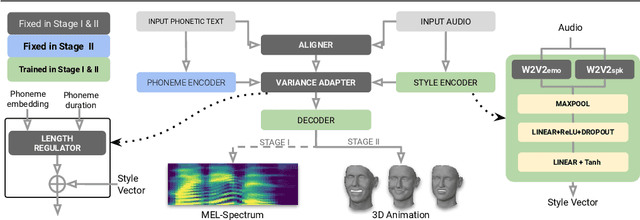

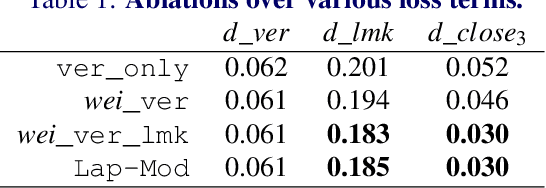

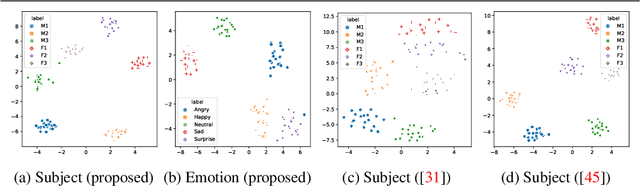

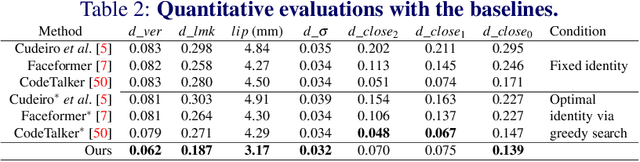

Abstract:Audio-driven 3D facial animation has several virtual humans applications for content creation and editing. While several existing methods provide solutions for speech-driven animation, precise control over content (what) and style (how) of the final performance is still challenging. We propose a novel approach that takes as input an audio, and the corresponding text to extract temporally-aligned content and disentangled style representations, in order to provide controls over 3D facial animation. Our method is trained in two stages, that evolves from audio prominent styles (how it sounds) to visual prominent styles (how it looks). We leverage a high-resource audio dataset in stage I to learn styles that control speech generation in a self-supervised learning framework, and then fine-tune this model with low-resource audio/3D mesh pairs in stage II to control 3D vertex generation. We employ a non-autoregressive seq2seq formulation to model sentence-level dependencies, and better mouth articulations. Our method provides flexibility that the style of a reference audio and the content of a source audio can be combined to enable audio style transfer. Similarly, the content can be modified, e.g. muting or swapping words, that enables style-preserving content editing.

An Intelligent Bed Sensor System for Non-Contact Respiratory Rate Monitoring

Mar 25, 2021

Abstract:We present an IoT-based intelligent bed sensor system that collects and analyses respiration-associated signals for unobtrusive monitoring in the home, hospitals and care units. A contactless device is used, which contains four load sensors mounted under the bed and one data processing unit (data logger). Various machine learning methods are applied to the data streamed from the data logger to detect the Respiratory Rate (RR). We have implemented Support Vector Machine (SVM) and also Neural Network (NN)-based pattern recognition methods, which are combined with either peak detection or Hilbert transform for robust RR calculation. Experimental results show that our methods could effectively extract RR using the data collected by contactless bed sensors. The proposed methods are robust to outliers and noise, which are caused by body movements. The monitoring system provides a flexible and scalable way for continuous and remote monitoring of sleep, movement and weight using the embedded sensors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge