Puntawat Ponglertnapakorn

Where Is The Ball: 3D Ball Trajectory Estimation From 2D Monocular Tracking

Jun 06, 2025Abstract:We present a method for 3D ball trajectory estimation from a 2D tracking sequence. To overcome the ambiguity in 3D from 2D estimation, we design an LSTM-based pipeline that utilizes a novel canonical 3D representation that is independent of the camera's location to handle arbitrary views and a series of intermediate representations that encourage crucial invariance and reprojection consistency. We evaluated our method on four synthetic and three real datasets and conducted extensive ablation studies on our design choices. Despite training solely on simulated data, our method achieves state-of-the-art performance and can generalize to real-world scenarios with multiple trajectories, opening up a range of applications in sport analysis and virtual replay. Please visit our page: https://where-is-the-ball.github.io.

DiFaReli: Diffusion Face Relighting

Apr 21, 2023

Abstract:We present a novel approach to single-view face relighting in the wild. Handling non-diffuse effects, such as global illumination or cast shadows, has long been a challenge in face relighting. Prior work often assumes Lambertian surfaces, simplified lighting models or involves estimating 3D shape, albedo, or a shadow map. This estimation, however, is error-prone and requires many training examples with lighting ground truth to generalize well. Our work bypasses the need for accurate estimation of intrinsic components and can be trained solely on 2D images without any light stage data, multi-view images, or lighting ground truth. Our key idea is to leverage a conditional diffusion implicit model (DDIM) for decoding a disentangled light encoding along with other encodings related to 3D shape and facial identity inferred from off-the-shelf estimators. We also propose a novel conditioning technique that eases the modeling of the complex interaction between light and geometry by using a rendered shading reference to spatially modulate the DDIM. We achieve state-of-the-art performance on standard benchmark Multi-PIE and can photorealistically relight in-the-wild images. Please visit our page: https://diffusion-face-relighting.github.io

Revealing Preference in Popular Music Through Familiarity and Brain Response

Jan 30, 2021

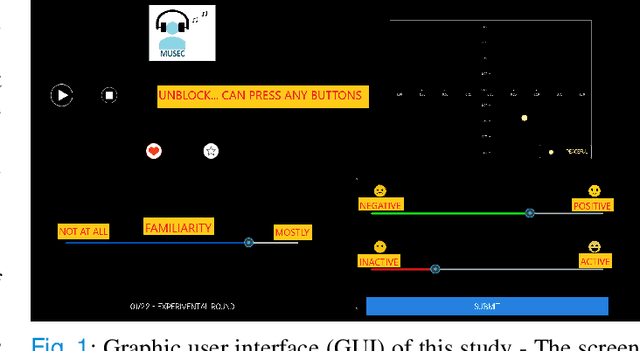

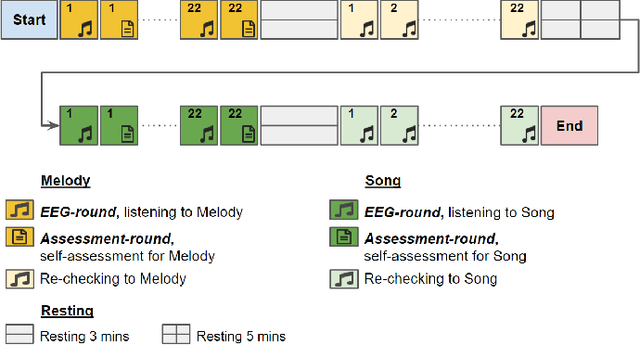

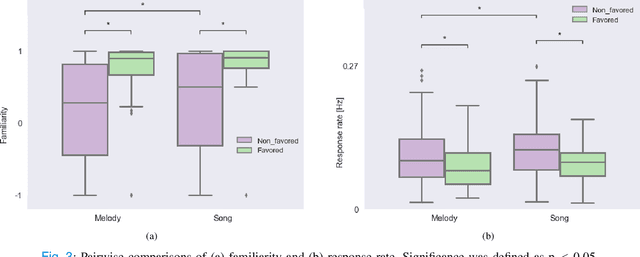

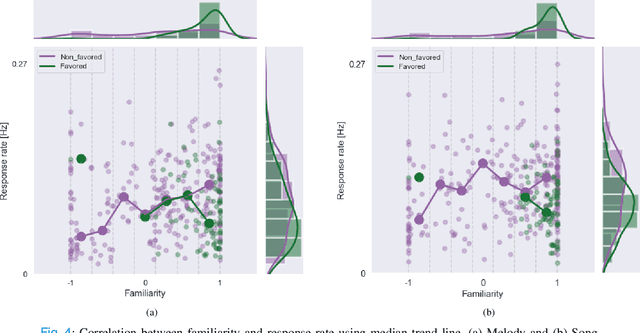

Abstract:Music preference was reported as a factor, which could elicit innermost music emotion, entailing accurate ground-truth data and music therapy efficiency. This study executes statistical analysis to investigate the distinction of music preference through familiarity scores, response times (response rates), and brain response (EEG). Twenty participants did self-assessment after listening to two types of popular music's chorus section: music without lyrics (Melody) and music with lyrics (Song). We then conduct a music preference classification using a support vector machine (SVM) with the familiarity scores, the response rates, and EEG as the feature vectors. The statistical analysis and SVM's F1-score of EEG are congruent, which is the brain's right side outperformed its left side in classification performance. Finally, these behavioral and brain studies support that preference, familiarity, and response rates can contribute to the music emotion experiment's design to understand music, emotion, and listener. Not only to the music industry, the biomedical, and healthcare industry can also exploit this experiment to collect data from patients to improve the efficiency of healing by music.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge