Revealing Preference in Popular Music Through Familiarity and Brain Response

Paper and Code

Jan 30, 2021

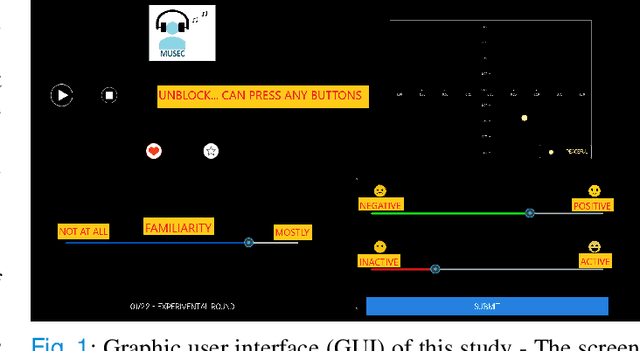

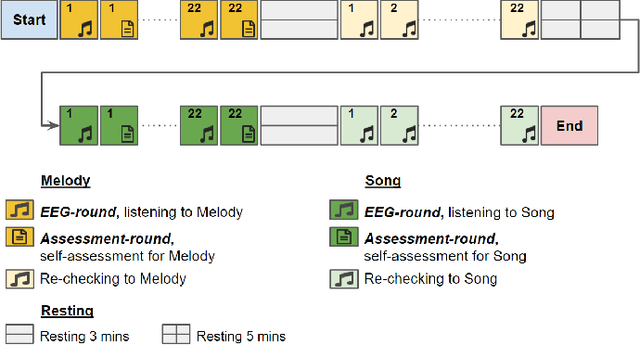

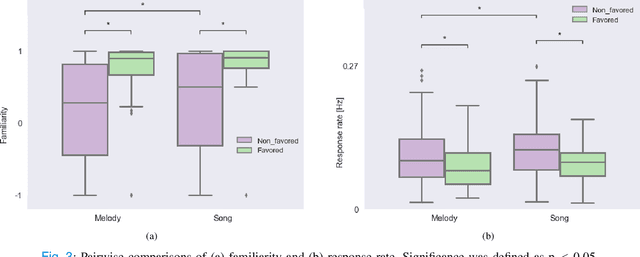

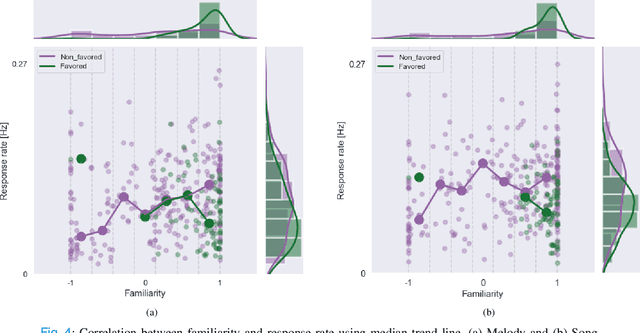

Music preference was reported as a factor, which could elicit innermost music emotion, entailing accurate ground-truth data and music therapy efficiency. This study executes statistical analysis to investigate the distinction of music preference through familiarity scores, response times (response rates), and brain response (EEG). Twenty participants did self-assessment after listening to two types of popular music's chorus section: music without lyrics (Melody) and music with lyrics (Song). We then conduct a music preference classification using a support vector machine (SVM) with the familiarity scores, the response rates, and EEG as the feature vectors. The statistical analysis and SVM's F1-score of EEG are congruent, which is the brain's right side outperformed its left side in classification performance. Finally, these behavioral and brain studies support that preference, familiarity, and response rates can contribute to the music emotion experiment's design to understand music, emotion, and listener. Not only to the music industry, the biomedical, and healthcare industry can also exploit this experiment to collect data from patients to improve the efficiency of healing by music.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge