Priyadarshi Patnaik

Facial asymmetry: A Computer Vision based behaviometric index for assessment during a face-to-face interview

Oct 30, 2023

Abstract:Choosing the right person for the right job makes the personnel interview process a cognitively demanding task. Psychometric tests, followed by an interview, have often been used to aid the process although such mechanisms have their limitations. While psychometric tests suffer from faking or social desirability of responses, the interview process depends on the way the responses are analyzed by the interviewers. We propose the use of behaviometry as an assistive tool to facilitate an objective assessment of the interviewee without increasing the cognitive load of the interviewer. Behaviometry is a relatively little explored field of study in the selection process, that utilizes inimitable behavioral characteristics like facial expressions, vocalization patterns, pupillary reactions, proximal behavior, body language, etc. The method analyzes thin slices of behavior and provides unbiased information about the interviewee. The current study proposes the methodology behind this tool to capture facial expressions, in terms of facial asymmetry and micro-expressions. Hemi-facial composites using a structural similarity index was used to develop a progressive time graph of facial asymmetry, as a test case. A frame-by-frame analysis was performed on three YouTube video samples, where Structural similarity index (SSID) scores of 75% and more showed behavioral congruence. The research utilizes open-source computer vision algorithms and libraries (python-opencv and dlib) to formulate the procedure for analysis of the facial asymmetry.

The Indian Spontaneous Expression Database for Emotion Recognition

Jun 16, 2016

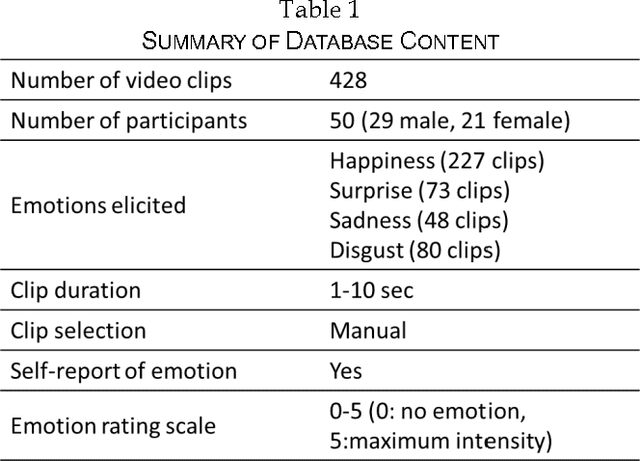

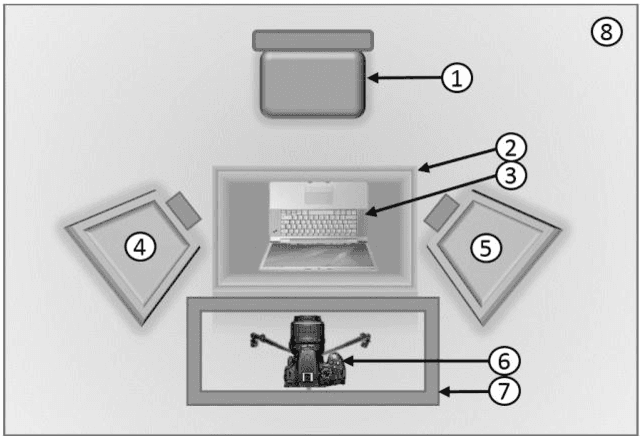

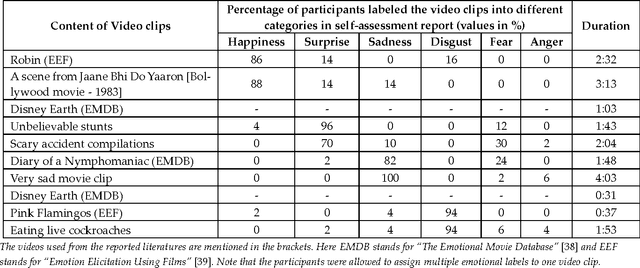

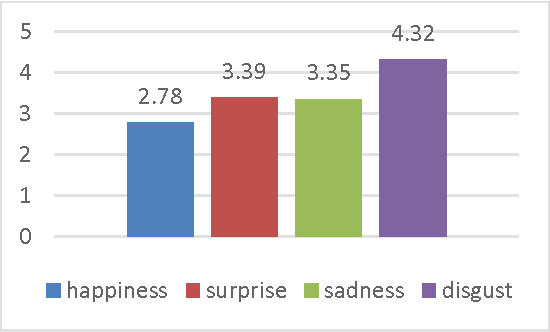

Abstract:Automatic recognition of spontaneous facial expressions is a major challenge in the field of affective computing. Head rotation, face pose, illumination variation, occlusion etc. are the attributes that increase the complexity of recognition of spontaneous expressions in practical applications. Effective recognition of expressions depends significantly on the quality of the database used. Most well-known facial expression databases consist of posed expressions. However, currently there is a huge demand for spontaneous expression databases for the pragmatic implementation of the facial expression recognition algorithms. In this paper, we propose and establish a new facial expression database containing spontaneous expressions of both male and female participants of Indian origin. The database consists of 428 segmented video clips of the spontaneous facial expressions of 50 participants. In our experiment, emotions were induced among the participants by using emotional videos and simultaneously their self-ratings were collected for each experienced emotion. Facial expression clips were annotated carefully by four trained decoders, which were further validated by the nature of stimuli used and self-report of emotions. An extensive analysis was carried out on the database using several machine learning algorithms and the results are provided for future reference. Such a spontaneous database will help in the development and validation of algorithms for recognition of spontaneous expressions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge