Pritam Dash

SpecGuard: Specification Aware Recovery for Robotic Autonomous Vehicles from Physical Attacks

Aug 27, 2024

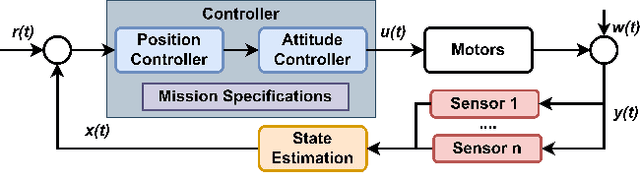

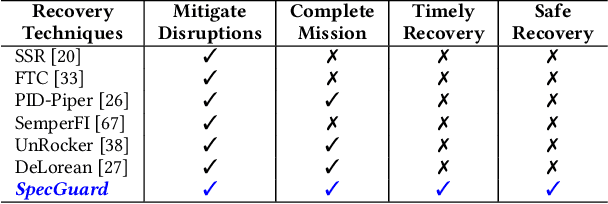

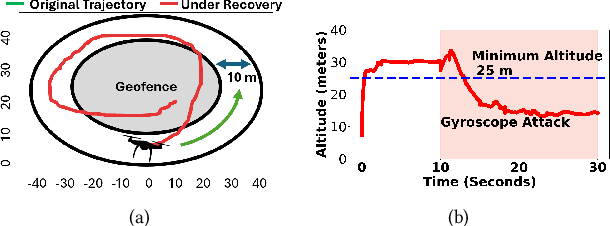

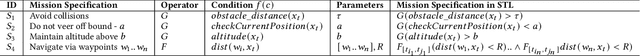

Abstract:Robotic Autonomous Vehicles (RAVs) rely on their sensors for perception, and follow strict mission specifications (e.g., altitude, speed, and geofence constraints) for safe and timely operations. Physical attacks can corrupt the RAVs' sensors, resulting in mission failures. Recovering RAVs from such attacks demands robust control techniques that maintain compliance with mission specifications even under attacks to ensure the RAV's safety and timely operations. We propose SpecGuard, a technique that complies with mission specifications and performs safe recovery of RAVs. There are two innovations in SpecGuard. First, it introduces an approach to incorporate mission specifications and learn a recovery control policy using Deep Reinforcement Learning (Deep-RL). We design a compliance-based reward structure that reflects the RAV's complex dynamics and enables SpecGuard to satisfy multiple mission specifications simultaneously. Second, SpecGuard incorporates state reconstruction, a technique that minimizes attack induced sensor perturbations. This reconstruction enables effective adversarial training, and optimizing the recovery control policy for robustness under attacks. We evaluate SpecGuard in both virtual and real RAVs, and find that it achieves 92% recovery success rate under attacks on different sensors, without any crashes or stalls. SpecGuard achieves 2X higher recovery success than prior work, and incurs about 15% performance overhead on real RAVs.

Replay-based Recovery for Autonomous Robotic Vehicles from Sensor Deception Attacks

Sep 17, 2022

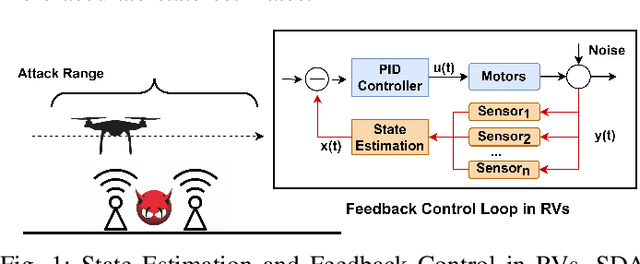

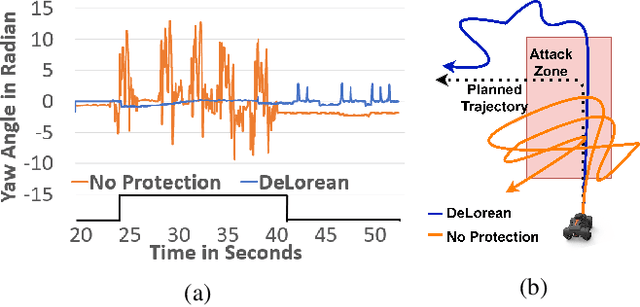

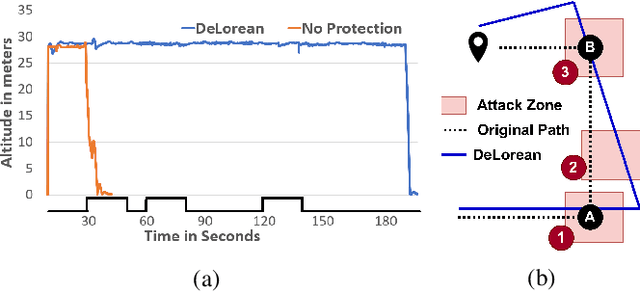

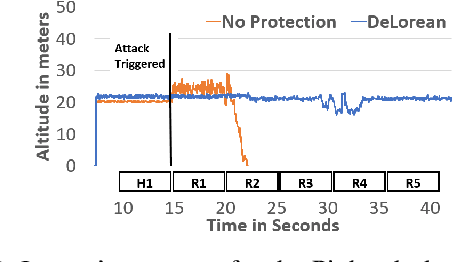

Abstract:Sensors are crucial for autonomous operation in robotic vehicles (RV). Physical attacks on sensors such as sensor tampering or spoofing can feed erroneous values to RVs through physical channels, which results in mission failures. In this paper, we present DeLorean, a comprehensive diagnosis and recovery framework for securing autonomous RVs from physical attacks. We consider a strong form of physical attack called sensor deception attacks (SDAs), in which the adversary targets multiple sensors of different types simultaneously (even including all sensors). Under SDAs, DeLorean inspects the attack induced errors, identifies the targeted sensors, and prevents the erroneous sensor inputs from being used in RV's feedback control loop. DeLorean replays historic state information in the feedback control loop and recovers the RV from attacks. Our evaluation on four real and two simulated RVs shows that DeLorean can recover RVs from different attacks, and ensure mission success in 94% of the cases (on average), without any crashes. DeLorean incurs low performance, memory and battery overheads.

Turning Your Strength against You: Detecting and Mitigating Robust and Universal Adversarial Patch Attack

Aug 11, 2021

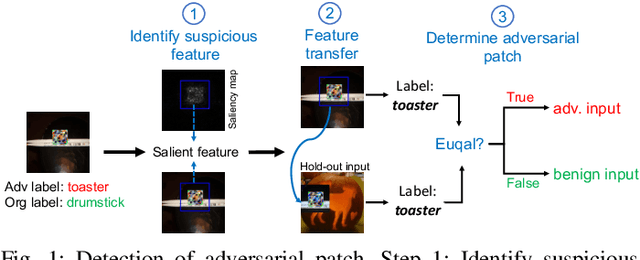

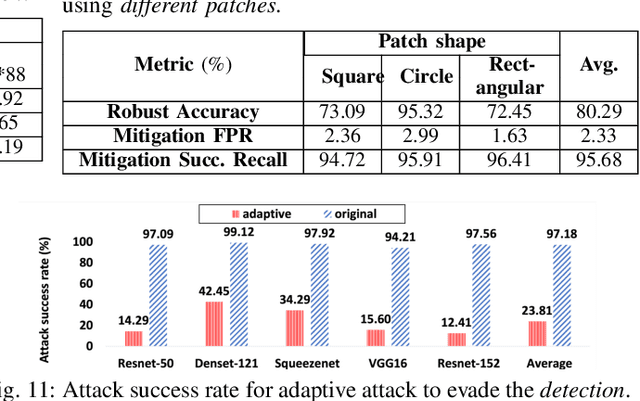

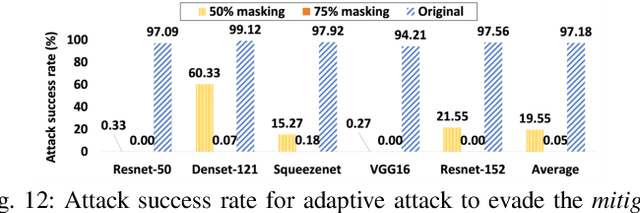

Abstract:Adversarial patch attack against image classification deep neural networks (DNNs), in which the attacker can inject arbitrary distortions within a bounded region of an image, is able to generate adversarial perturbations that are robust (i.e., remain adversarial in physical world) and universal (i.e., remain adversarial on any input). It is thus important to detect and mitigate such attack to ensure the security of DNNs. This work proposes Jujutsu, a technique to detect and mitigate robust and universal adversarial patch attack. Jujutsu leverages the universal property of the patch attack for detection. It uses explainable AI technique to identify suspicious features that are potentially malicious, and verify their maliciousness by transplanting the suspicious features to new images. An adversarial patch continues to exhibit the malicious behavior on the new images and thus can be detected based on prediction consistency. Jujutsu leverages the localized nature of the patch attack for mitigation, by randomly masking the suspicious features to "remove" adversarial perturbations. However, the network might fail to classify the images as some of the contents are removed (masked). Therefore, Jujutsu uses image inpainting for synthesizing alternative contents from the pixels that are masked, which can reconstruct the "clean" image for correct prediction. We evaluate Jujutsu on five DNNs on two datasets, and show that Jujutsu achieves superior performance and significantly outperforms existing techniques. Jujutsu can further defend against various variants of the basic attack, including 1) physical-world attack; 2) attacks that target diverse classes; 3) attacks that use patches in different shapes and 4) adaptive attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge