Prerit Terway

Fast Design Space Exploration of Nonlinear Systems: Part II

Apr 08, 2021

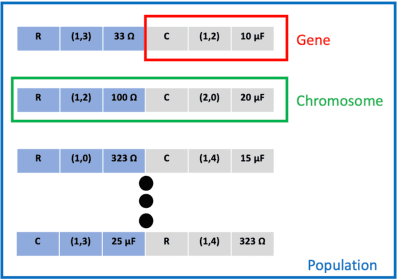

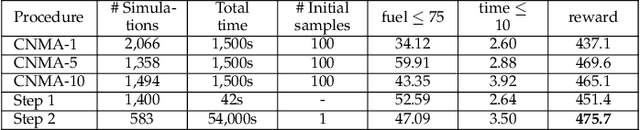

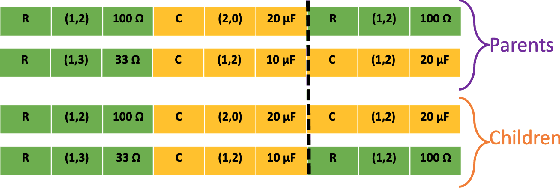

Abstract:Nonlinear system design is often a multi-objective optimization problem involving search for a design that satisfies a number of predefined constraints. The design space is typically very large since it includes all possible system architectures with different combinations of components composing each architecture. In this article, we address nonlinear system design space exploration through a two-step approach encapsulated in a framework called Fast Design Space Exploration of Nonlinear Systems (ASSENT). In the first step, we use a genetic algorithm to search for system architectures that allow discrete choices for component values or else only component values for a fixed architecture. This step yields a coarse design since the system may or may not meet the target specifications. In the second step, we use an inverse design to search over a continuous space and fine-tune the component values with the goal of improving the value of the objective function. We use a neural network to model the system response. The neural network is converted into a mixed-integer linear program for active learning to sample component values efficiently. We illustrate the efficacy of ASSENT on problems ranging from nonlinear system design to design of electrical circuits. Experimental results show that ASSENT achieves the same or better value of the objective function compared to various other optimization techniques for nonlinear system design by up to 54%. We improve sample efficiency by 6-10x compared to reinforcement learning based synthesis of electrical circuits.

TUTOR: Training Neural Networks Using Decision Rules as Model Priors

Oct 13, 2020

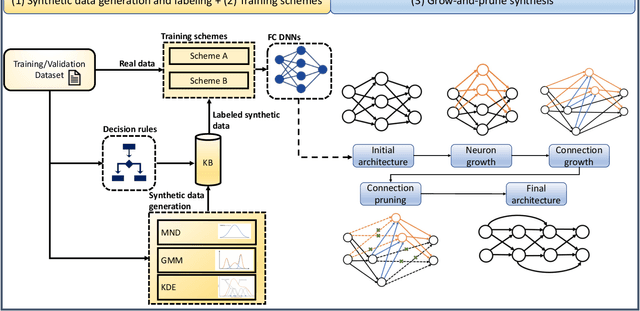

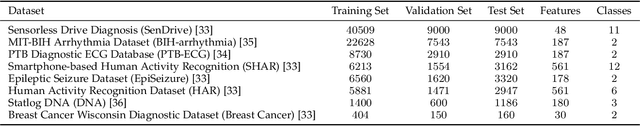

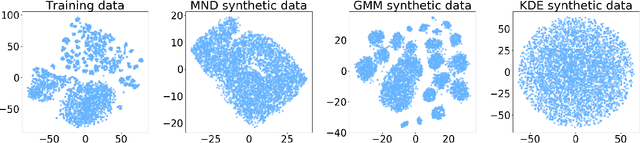

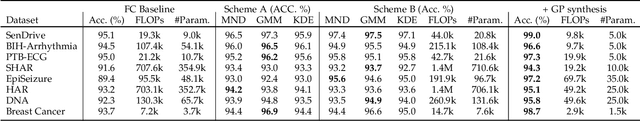

Abstract:The human brain has the ability to carry out new tasks with limited experience. It utilizes prior learning experiences to adapt the solution strategy to new domains. On the other hand, deep neural networks (DNNs) generally need large amounts of data and computational resources for training. However, this requirement is not met in many settings. To address these challenges, we propose the TUTOR DNN synthesis framework. TUTOR targets non-image datasets. It synthesizes accurate DNN models with limited available data, and reduced memory and computational requirements. It consists of three sequential steps: (1) drawing synthetic data from the same probability distribution as the training data and labeling the synthetic data based on a set of rules extracted from the real dataset, (2) use of two training schemes that combine synthetic data and training data to learn DNN weights, and (3) employing a grow-and-prune synthesis paradigm to learn both the weights and the architecture of the DNN to reduce model size while ensuring its accuracy. We show that in comparison with fully-connected DNNs, on an average TUTOR reduces the need for data by 6.0x (geometric mean), improves accuracy by 3.6%, and reduces the number of parameters (floating-point operations) by 4.7x (4.3x) (geometric mean). Thus, TUTOR is a less data-hungry, accurate, and efficient DNN synthesis framework.

DISPATCH: Design Space Exploration of Cyber-Physical Systems

Sep 25, 2020

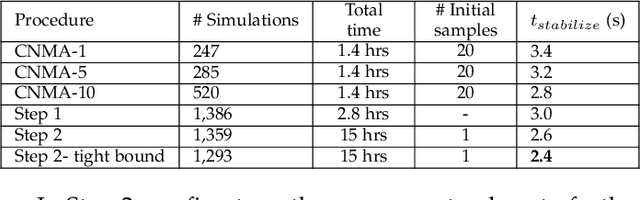

Abstract:Design of cyber-physical systems (CPSs) is a challenging task that involves searching over a large search space of various CPS configurations and possible values of components composing the system. Hence, there is a need for sample-efficient CPS design space exploration to select the system architecture and component values that meet the target system requirements. We address this challenge by formulating CPS design as a multi-objective optimization problem and propose DISPATCH, a two-step methodology for sample-efficient search over the design space. First, we use a genetic algorithm to search over discrete choices of system component values for architecture search and component selection or only component selection and terminate the algorithm even before meeting the system requirements, thus yielding a coarse design. In the second step, we use an inverse design to search over a continuous space to fine-tune the component values and meet the diverse set of system requirements. We use a neural network as a surrogate function for the inverse design of the system. The neural network, converted into a mixed-integer linear program, is used for active learning to sample component values efficiently in a continuous search space. We illustrate the efficacy of DISPATCH on electrical circuit benchmarks: two-stage and three-stage transimpedence amplifiers. Simulation results show that the proposed methodology improves sample efficiency by 5-14x compared to a prior synthesis method that relies on reinforcement learning. It also synthesizes circuits with the best performance (highest bandwidth/lowest area) compared to designs synthesized using reinforcement learning, Bayesian optimization, or humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge