Pratool Bharti

An Edge Map based Ensemble Solution to Detect Water Level in Stream

Jan 16, 2022Abstract:Flooding is one of the most dangerous weather events today. Between $2015-2019$, on average, flooding has caused more than $130$ deaths every year in the USA alone. The devastating nature of flood necessitates the continuous monitoring of water level in the rivers and streams to detect the incoming flood. In this work, we have designed and implemented an efficient vision-based ensemble solution to continuously detect the water level in the creek. Our solution adapts template matching algorithm to find the region of interest by leveraging edge maps, and combines two parallel approach to identify the water level. While first approach fits a linear regression model in edge map to identify the water line, second approach uses a split sliding window to compute the sum of squared difference in pixel intensities to find the water surface. We evaluated the proposed system on $4306$ images collected between $3$rd October and $18$th December in 2019 with the frequency of $1$ image in every $10$ minutes. The system exhibited low error rate as it achieved $4.8$, $3.1\%$ and $0.92$ scores for MAE, MAPE and $R^2$ evaluation metrics, respectively. We believe the proposed solution is very practical as it is pervasive, accurate, doesn't require installation of any additional infrastructure in the water body and can be easily adapted to other locations.

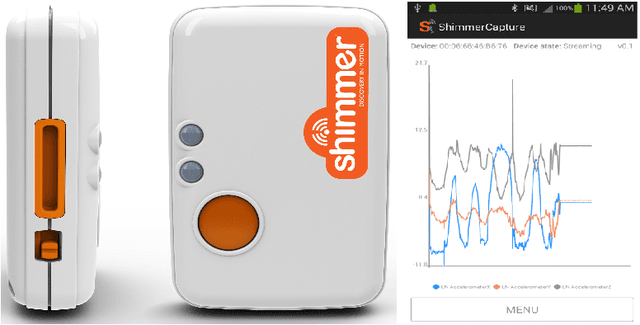

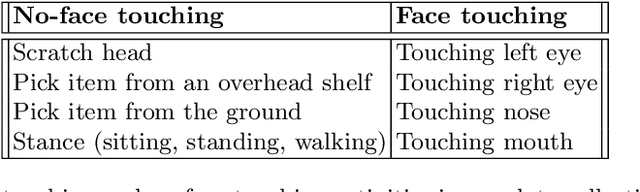

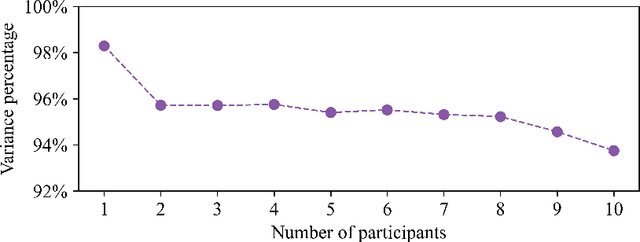

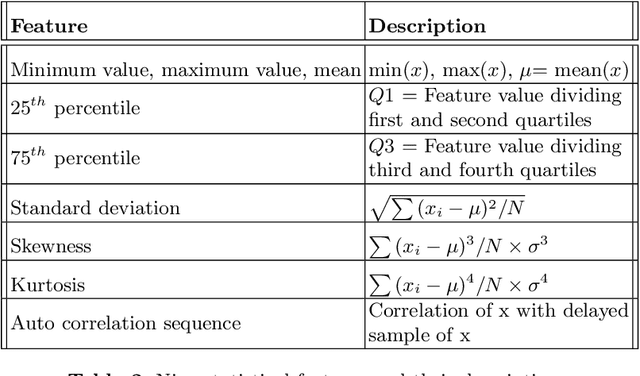

CovidAlert -- A Wristwatch-based System to Alert Users from Face Touching

Nov 30, 2021

Abstract:Worldwide 2019 million people have been infected and 4.5 million have lost their lives in the ongoing Covid-19 pandemic. Until vaccines became widely available, precautions and safety measures like wearing masks, physical distancing, avoiding face touching were some of the primary means to curb the spread of virus. Face touching is a compulsive human begavior that can not be prevented without making a continuous consious effort, even then it is inevitable. To address this problem, we have designed a smartwatch-based solution, CovidAlert, that leverages Random Forest algorithm trained on accelerometer and gyroscope data from the smartwatch to detects hand transition to face and sends a quick haptic alert to the users. CovidALert is highly energy efficient as it employs STA/LTA algorithm as a gatekeeper to curtail the usage of Random Forest model on the watch when user is inactive. The overall accuracy of our system is 88.4% with low false negatives and false positives. We also demonstrated the system viability by implementing it on a commercial Fossil Gen 5 smartwatch.

An Explainable-AI approach for Diagnosis of COVID-19 using MALDI-ToF Mass Spectrometry

Sep 28, 2021

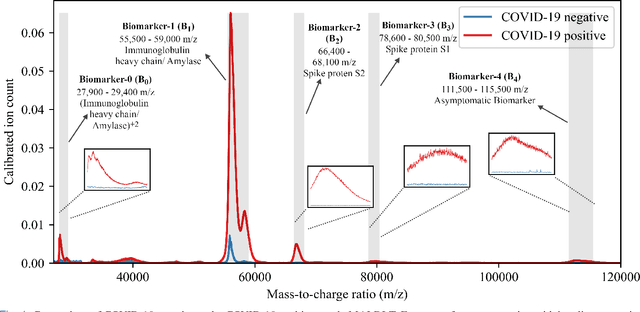

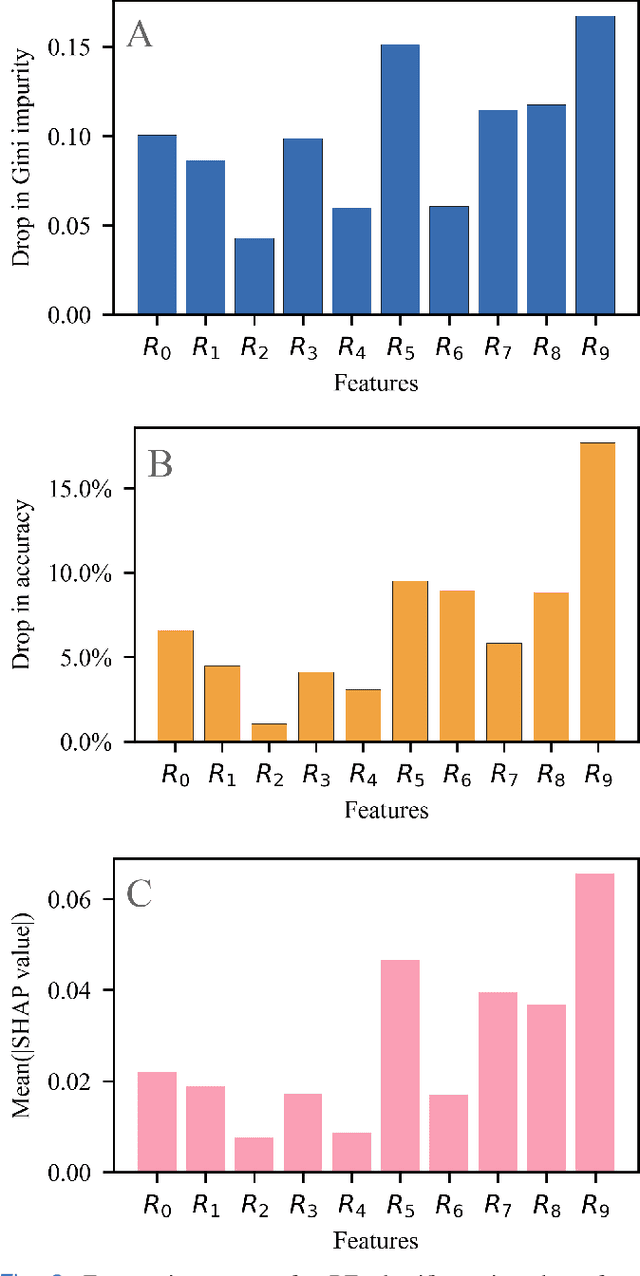

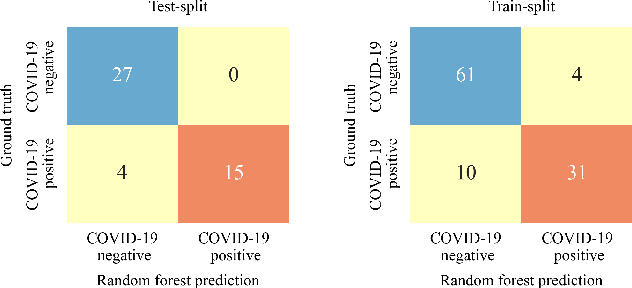

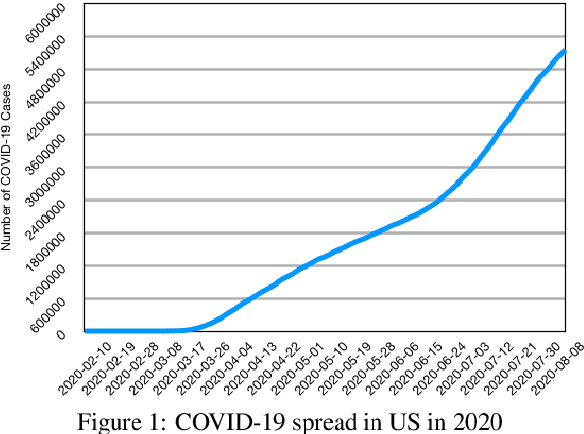

Abstract:The novel severe acute respiratory syndrome coronavirus type-2 (SARS-CoV-2) caused a global pandemic that has taken more than 4.5 million lives and severely affected the global economy. To curb the spread of the virus, an accurate, cost-effective, and quick testing for large populations is exceedingly important in order to identify, isolate, and treat infected people. Current testing methods commonly use PCR (Polymerase Chain Reaction) based equipment that have limitations on throughput, cost-effectiveness, and simplicity of procedure which creates a compelling need for developing additional coronavirus disease-2019 (COVID-19) testing mechanisms, that are highly sensitive, rapid, trustworthy, and convenient to use by the public. We propose a COVID-19 testing method using artificial intelligence (AI) techniques on MALDI-ToF (matrix-assisted laser desorption/ionization time-of-flight) data extracted from 152 human gargle samples (60 COVID-19 positive tests and 92 COVID-19 negative tests). Our AI-based approach leverages explainable-AI (X-AI) methods to explain the decision rules behind the predictive algorithm both on a local (per-sample) and global (all-samples) basis to make the AI model more trustworthy. Finally, we evaluated our proposed method using a 70%-30% train-test-split strategy and achieved a training accuracy of 86.79% and a testing accuracy of 91.30%.

Assessing COVID-19 Impacts on College Students via Automated Processing of Free-form Text

Dec 17, 2020

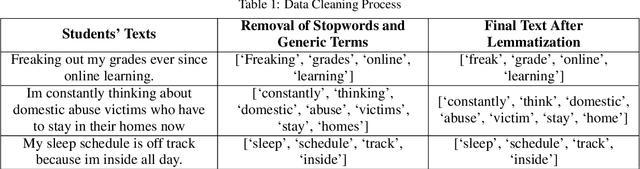

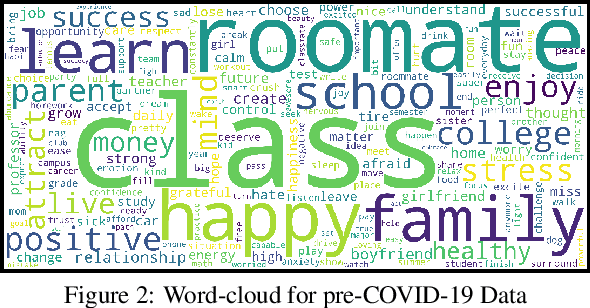

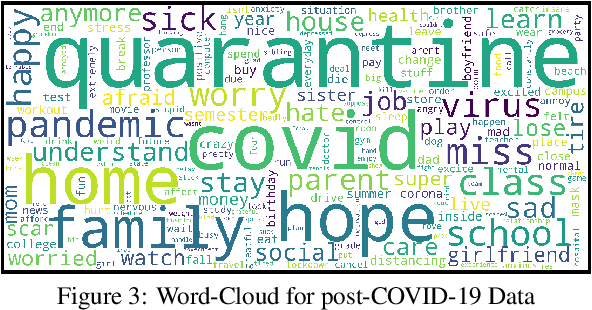

Abstract:In this paper, we report experimental results on assessing the impact of COVID-19 on college students by processing free-form texts generated by them. By free-form texts, we mean textual entries posted by college students (enrolled in a four year US college) via an app specifically designed to assess and improve their mental health. Using a dataset comprising of more than 9000 textual entries from 1451 students collected over four months (split between pre and post COVID-19), and established NLP techniques, a) we assess how topics of most interest to student change between pre and post COVID-19, and b) we assess the sentiments that students exhibit in each topic between pre and post COVID-19. Our analysis reveals that topics like Education became noticeably less important to students post COVID-19, while Health became much more trending. We also found that across all topics, negative sentiment among students post COVID-19 was much higher compared to pre-COVID-19. We expect our study to have an impact on policy-makers in higher education across several spectra, including college administrators, teachers, parents, and mental health counselors.

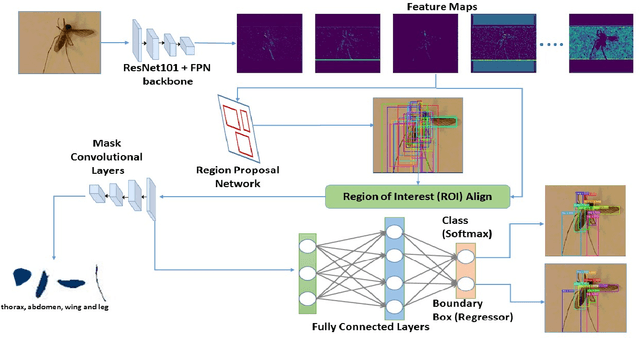

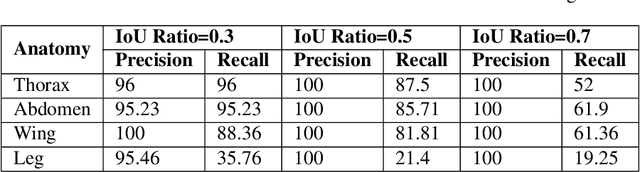

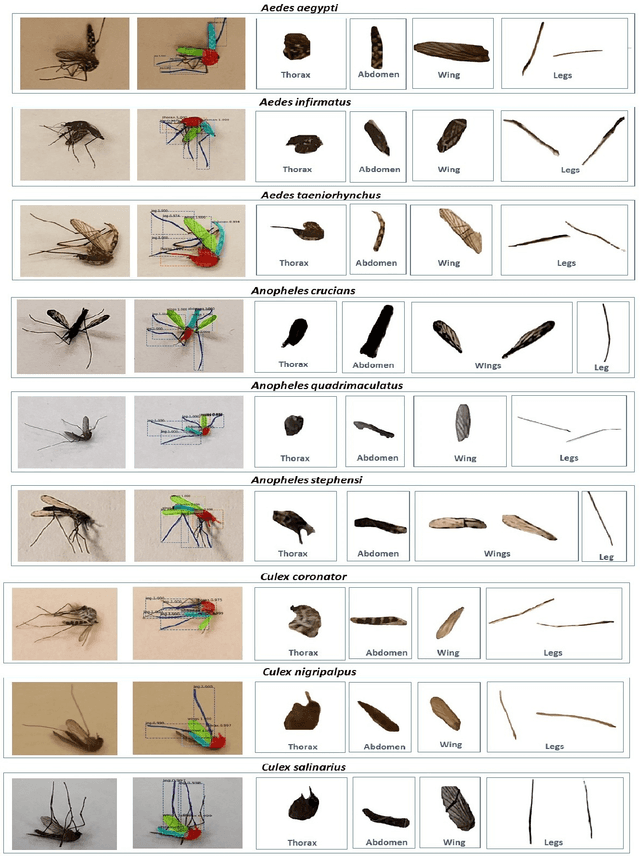

A Framework based on Deep Neural Networks to Extract Anatomy of Mosquitoes from Images

Jul 29, 2020

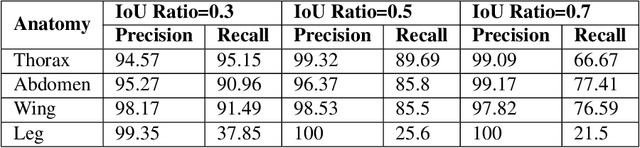

Abstract:We design a framework based on Mask Region-based Convolutional Neural Network (Mask R-CNN) to automatically detect and separately extract anatomical components of mosquitoes - thorax, wings, abdomen and legs from images. Our training dataset consisted of 1500 smartphone images of nine mosquito species trapped in Florida. In the proposed technique, the first step is to detect anatomical components within a mosquito image. Then, we localize and classify the extracted anatomical components, while simultaneously adding a branch in the neural network architecture to segment pixels containing only the anatomical components. Evaluation results are favorable. To evaluate generality, we test our architecture trained only with mosquito images on bumblebee images. We again reveal favorable results, particularly in extracting wings. Our techniques in this paper have practical applications in public health, taxonomy and citizen-science efforts.

CNN-based Speed Detection Algorithm for Walking and Running using Wrist-worn Wearable Sensors

Jun 03, 2020Abstract:In recent years, there have been a surge in ubiquitous technologies such as smartwatches and fitness trackers that can track the human physical activities effortlessly. These devices have enabled common citizens to track their physical fitness and encourage them to lead a healthy lifestyle. Among various exercises, walking and running are the most common ones people do in everyday life, either through commute, exercise, or doing household chores. If done at the right intensity, walking and running are sufficient enough to help individual reach the fitness and weight-loss goals. Therefore, it is important to measure walking/ running speed to estimate the burned calories along with preventing them from the risk of soreness, injury, and burnout. Existing wearable technologies use GPS sensor to measure the speed which is highly energy inefficient and does not work well indoors. In this paper, we design, implement and evaluate a convolutional neural network based algorithm that leverages accelerometer and gyroscope sensory data from the wrist-worn device to detect the speed with high precision. Data from $15$ participants were collected while they were walking/running at different speeds on a treadmill. Our speed detection algorithm achieved $4.2\%$ and $9.8\%$ MAPE (Mean Absolute Error Percentage) value using $70-15-15$ train-test-evaluation split and leave-one-out cross-validation evaluation strategy respectively.

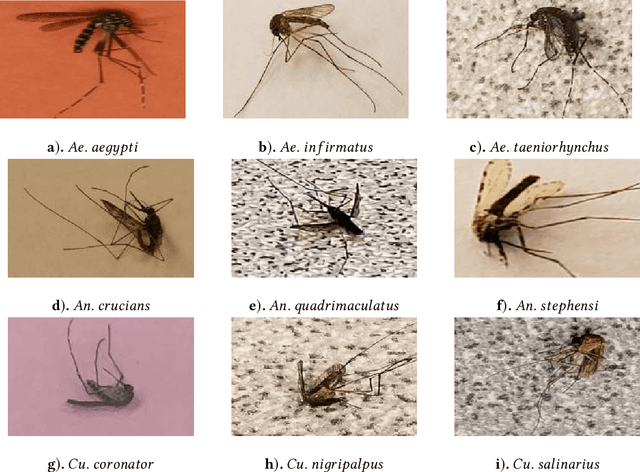

Automating the Surveillance of Mosquito Vectors from Trapped Specimens Using Computer Vision Techniques

May 25, 2020

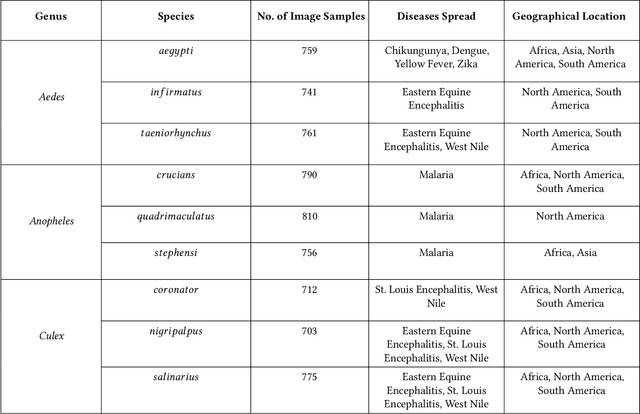

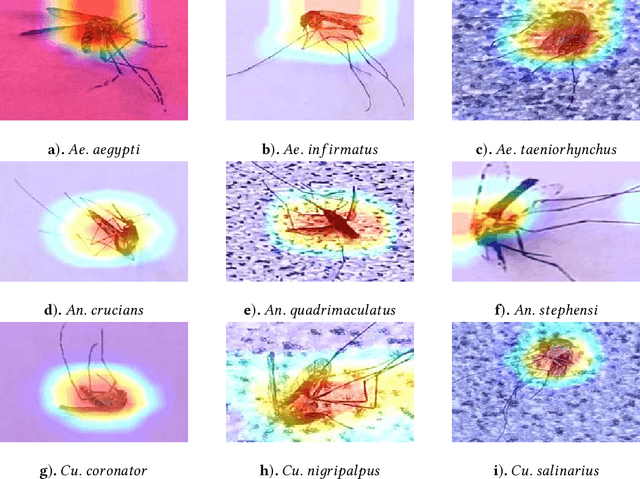

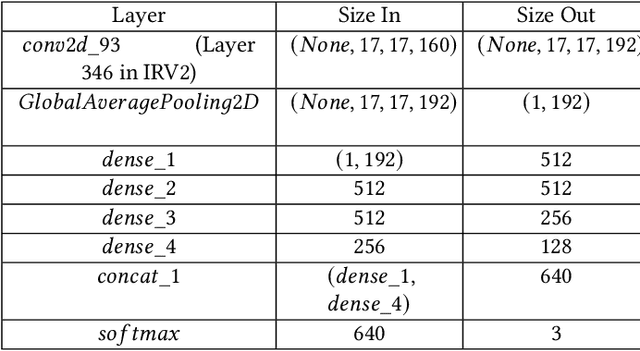

Abstract:Among all animals, mosquitoes are responsible for the most deaths worldwide. Interestingly, not all types of mosquitoes spread diseases, but rather, a select few alone are competent enough to do so. In the case of any disease outbreak, an important first step is surveillance of vectors (i.e., those mosquitoes capable of spreading diseases). To do this today, public health workers lay several mosquito traps in the area of interest. Hundreds of mosquitoes will get trapped. Naturally, among these hundreds, taxonomists have to identify only the vectors to gauge their density. This process today is manual, requires complex expertise/ training, and is based on visual inspection of each trapped specimen under a microscope. It is long, stressful and self-limiting. This paper presents an innovative solution to this problem. Our technique assumes the presence of an embedded camera (similar to those in smart-phones) that can take pictures of trapped mosquitoes. Our techniques proposed here will then process these images to automatically classify the genus and species type. Our CNN model based on Inception-ResNet V2 and Transfer Learning yielded an overall accuracy of 80% in classifying mosquitoes when trained on 25,867 images of 250 trapped mosquito vector specimens captured via many smart-phone cameras. In particular, the accuracy of our model in classifying Aedes aegypti and Anopheles stephensi mosquitoes (both of which are deadly vectors) is amongst the highest. We present important lessons learned and practical impact of our techniques towards the end of the paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge