Pragya Gupta

Department of Mathematics Indian Institute of Technology Kharagpur

Inter Observer Variability Assessment through Ordered Weighted Belief Divergence Measure in MAGDM Application to the Ensemble Classifier Feature Fusion

Sep 13, 2024

Abstract:A large number of multi-attribute group decisionmaking (MAGDM) have been widely introduced to obtain consensus results. However, most of the methodologies ignore the conflict among the experts opinions and only consider equal or variable priorities of them. Therefore, this study aims to propose an Evidential MAGDM method by assessing the inter-observational variability and handling uncertainty that emerges between the experts. The proposed framework has fourfold contributions. First, the basic probability assignment (BPA) generation method is introduced to consider the inherent characteristics of each alternative by computing the degree of belief. Second, the ordered weighted belief and plausibility measure is constructed to capture the overall intrinsic information of the alternative by assessing the inter-observational variability and addressing the conflicts emerging between the group of experts. An ordered weighted belief divergence measure is constructed to acquire the weighted support for each group of experts to obtain the final preference relationship. Finally, we have shown an illustrative example of the proposed Evidential MAGDM framework. Further, we have analyzed the interpretation of Evidential MAGDM in the real-world application for ensemble classifier feature fusion to diagnose retinal disorders using optical coherence tomography images.

Multiscale Color Guided Attention Ensemble Classifier for Age-Related Macular Degeneration using Concurrent Fundus and Optical Coherence Tomography Images

Sep 01, 2024

Abstract:Automatic diagnosis techniques have evolved to identify age-related macular degeneration (AMD) by employing single modality Fundus images or optical coherence tomography (OCT). To classify ocular diseases, fundus and OCT images are the most crucial imaging modalities used in the clinical setting. Most deep learning-based techniques are established on a single imaging modality, which contemplates the ocular disorders to a specific extent and disregards other modality that comprises exhaustive information among distinct imaging modalities. This paper proposes a modality-specific multiscale color space embedding integrated with the attention mechanism based on transfer learning for classification (MCGAEc), which can efficiently extract the distinct modality information at various scales using the distinct color spaces. In this work, we first introduce the modality-specific multiscale color space encoder model, which includes diverse feature representations by integrating distinct characteristic color spaces on a multiscale into a unified framework. The extracted features from the prior encoder module are incorporated with the attention mechanism to extract the global features representation, which is integrated with the prior extracted features and transferred to the random forest classifier for the classification of AMD. To analyze the performance of the proposed MCGAEc method, a publicly available multi-modality dataset from Project Macula for AMD is utilized and compared with the existing models.

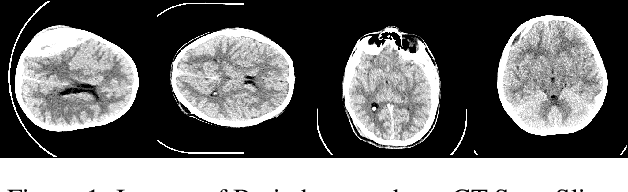

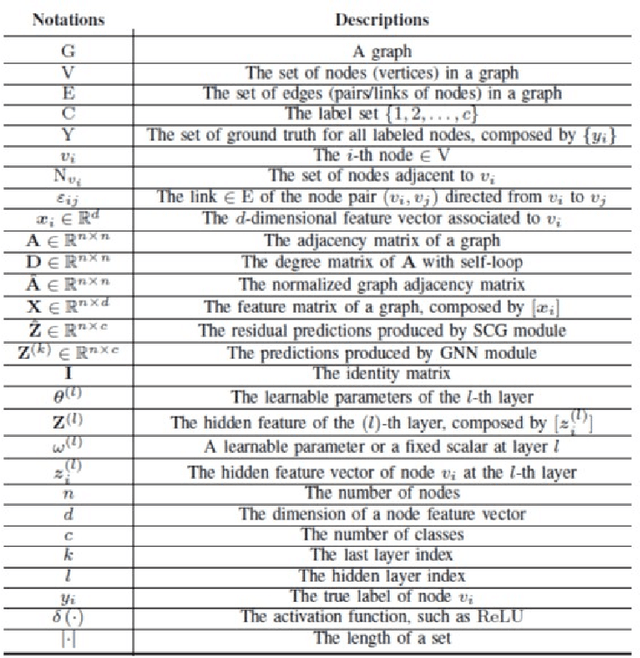

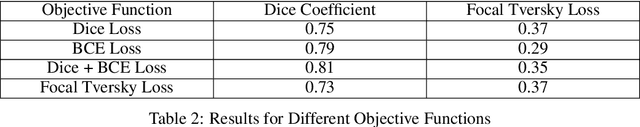

A Graphical Approach For Brain Haemorrhage Segmentation

Feb 14, 2022

Abstract:Haemorrhaging of the brain is the leading cause of death in people between the ages of 15 and 24 and the third leading cause of death in people older than that. Computed tomography (CT) is an imaging modality used to diagnose neurological emergencies, including stroke and traumatic brain injury. Recent advances in Deep Learning and Image Processing have utilised different modalities like CT scans to help automate the detection and segmentation of brain haemorrhage occurrences. In this paper, we propose a novel implementation of an architecture consisting of traditional Convolutional Neural Networks(CNN) along with Graph Neural Networks(GNN) to produce a holistic model for the task of brain haemorrhage segmentation.GNNs work on the principle of neighbourhood aggregation thus providing a reliable estimate of global structures present in images. GNNs work with few layers thus in turn requiring fewer parameters to work with. We were able to achieve a dice coefficient score of around 0.81 with limited data with our implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge