Praboda Rajapaksha

Hate Speech and Offensive Language Detection using an Emotion-aware Shared Encoder

Feb 17, 2023Abstract:The rise of emergence of social media platforms has fundamentally altered how people communicate, and among the results of these developments is an increase in online use of abusive content. Therefore, automatically detecting this content is essential for banning inappropriate information, and reducing toxicity and violence on social media platforms. The existing works on hate speech and offensive language detection produce promising results based on pre-trained transformer models, however, they considered only the analysis of abusive content features generated through annotated datasets. This paper addresses a multi-task joint learning approach which combines external emotional features extracted from another corpora in dealing with the imbalanced and scarcity of labeled datasets. Our analysis are using two well-known Transformer-based models, BERT and mBERT, where the later is used to address abusive content detection in multi-lingual scenarios. Our model jointly learns abusive content detection with emotional features by sharing representations through transformers' shared encoder. This approach increases data efficiency, reduce overfitting via shared representations, and ensure fast learning by leveraging auxiliary information. Our findings demonstrate that emotional knowledge helps to more reliably identify hate speech and offensive language across datasets. Our hate speech detection Multi-task model exhibited 3% performance improvement over baseline models, but the performance of multi-task models were not significant for offensive language detection task. More interestingly, in both tasks, multi-task models exhibits less false positive errors compared to single task scenario.

BERT-based Ensemble Approaches for Hate Speech Detection

Sep 15, 2022

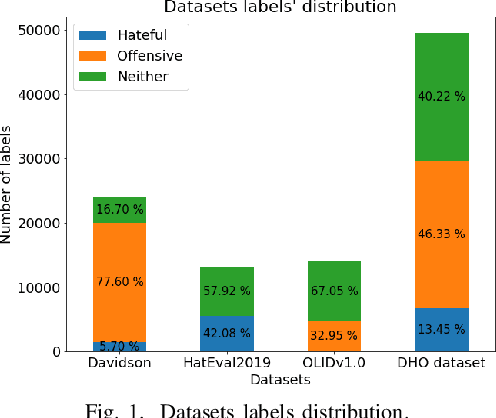

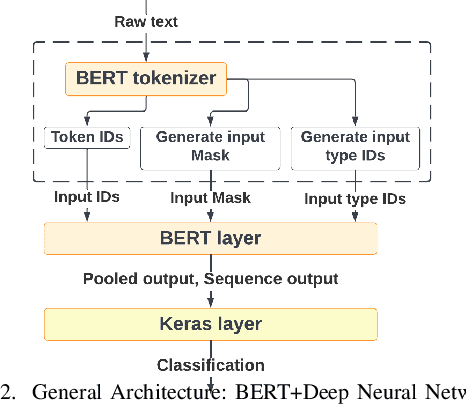

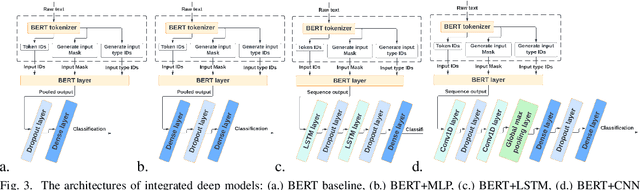

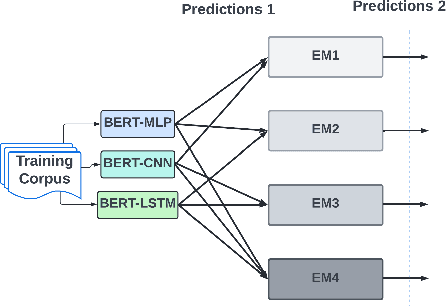

Abstract:With the freedom of communication provided in online social media, hate speech has increasingly generated. This leads to cyber conflicts affecting social life at the individual and national levels. As a result, hateful content classification is becoming increasingly demanded for filtering hate content before being sent to the social networks. This paper focuses on classifying hate speech in social media using multiple deep models that are implemented by integrating recent transformer-based language models such as BERT, and neural networks. To improve the classification performances, we evaluated with several ensemble techniques, including soft voting, maximum value, hard voting and stacking. We used three publicly available Twitter datasets (Davidson, HatEval2019, OLID) that are generated to identify offensive languages. We fused all these datasets to generate a single dataset (DHO dataset), which is more balanced across different labels, to perform multi-label classification. Our experiments have been held on Davidson dataset and the DHO corpora. The later gave the best overall results, especially F1 macro score, even it required more resources (time execution and memory). The experiments have shown good results especially the ensemble models, where stacking gave F1 score of 97% on Davidson dataset and aggregating ensembles 77% on the DHO dataset.

Uncovering Flaming Events on News Media in Social Media

Sep 16, 2019

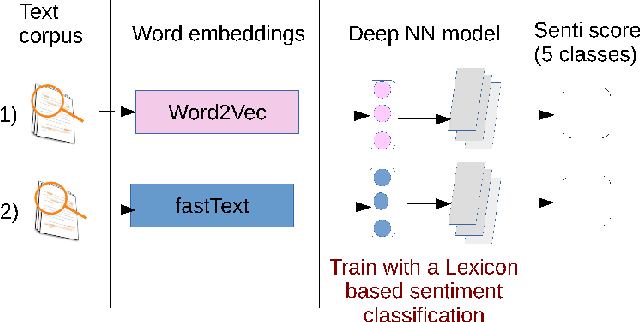

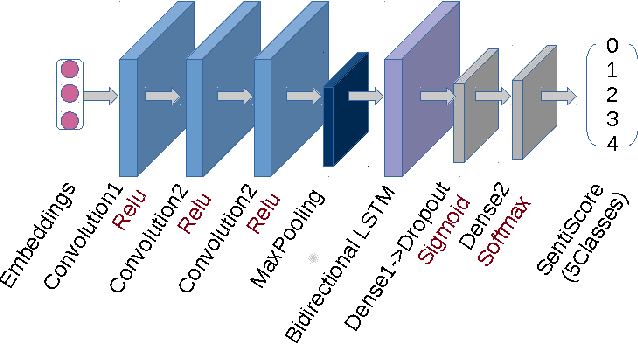

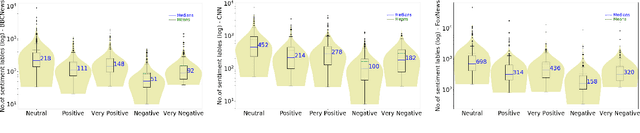

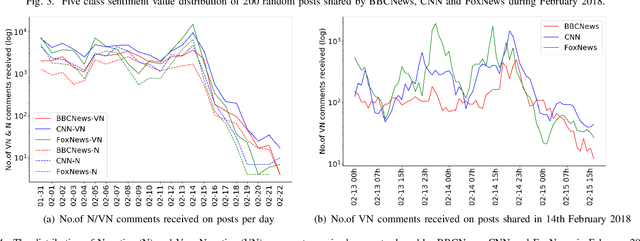

Abstract:Social networking sites (SNSs) facilitate the sharing of ideas and information through different types of feedback including publishing posts, leaving comments and other type of reactions. However, some comments or feedback on SNSs are inconsiderate and offensive, and sometimes this type of feedback has a very negative effect on a target user. The phenomenon known as flaming goes hand-in-hand with this type of posting that can trigger almost instantly on SNSs. Most popular users such as celebrities, politicians and news media are the major victims of the flaming behaviors and so detecting these types of events will be useful and appreciated. Flaming event can be monitored and identified by analyzing negative comments received on a post. Thus, our main objective of this study is to identify a way to detect flaming events in SNS using a sentiment prediction method. We use a deep Neural Network (NN) model that can identity sentiments of variable length sentences and classifies the sentiment of SNSs content (both comments and posts) to discover flaming events. Our deep NN model uses Word2Vec and FastText word embedding methods as its training to explore which method is the most appropriate. The labeled dataset for training the deep NN is generated using an enhanced lexicon based approach. Our deep NN model classifies the sentiment of a sentence into five classes: Very Positive, Positive, Neutral, Negative and Very Negative. To detect flaming incidents, we focus only on the comments classified into the Negative and Very Negative classes. As a use-case, we try to explore the flaming phenomena in the news media domain and therefore we focused on news items posted by three popular news media on Facebook (BBCNews, CNN and FoxNews) to train and test the model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge