Poppy Collis

Learning in Hybrid Active Inference Models

Sep 02, 2024

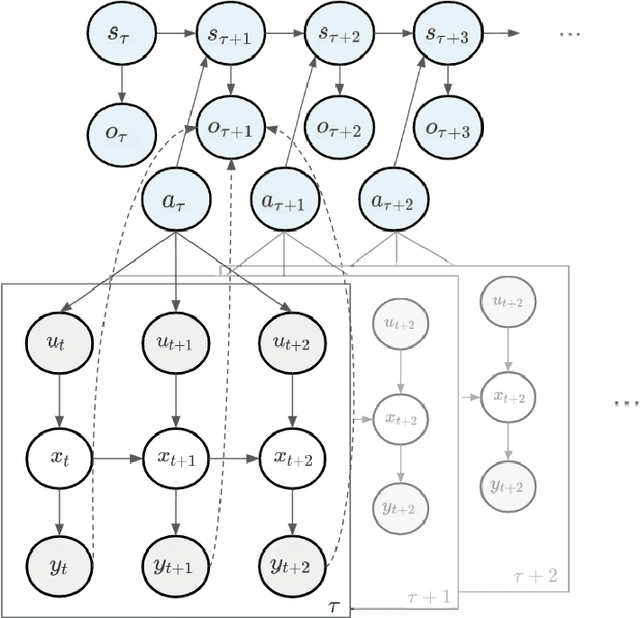

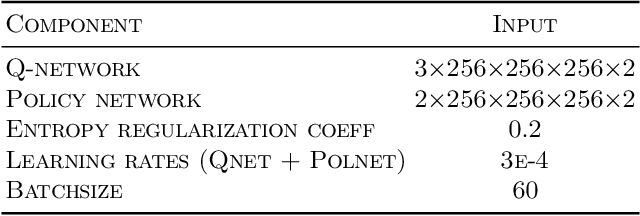

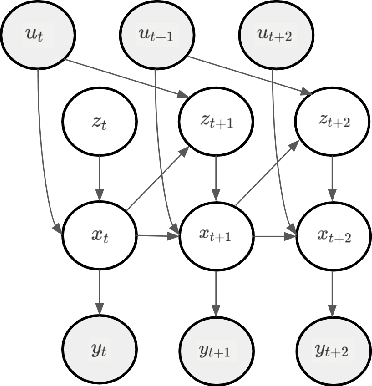

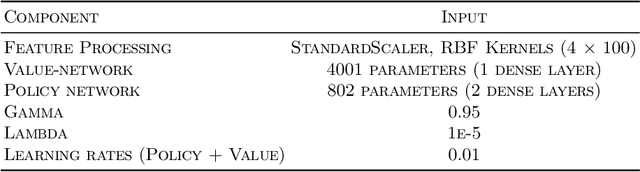

Abstract:An open problem in artificial intelligence is how systems can flexibly learn discrete abstractions that are useful for solving inherently continuous problems. Previous work in computational neuroscience has considered this functional integration of discrete and continuous variables during decision-making under the formalism of active inference (Parr, Friston & de Vries, 2017; Parr & Friston, 2018). However, their focus is on the expressive physical implementation of categorical decisions and the hierarchical mixed generative model is assumed to be known. As a consequence, it is unclear how this framework might be extended to learning. We therefore present a novel hierarchical hybrid active inference agent in which a high-level discrete active inference planner sits above a low-level continuous active inference controller. We make use of recent work in recurrent switching linear dynamical systems (rSLDS) which implement end-to-end learning of meaningful discrete representations via the piecewise linear decomposition of complex continuous dynamics (Linderman et al., 2016). The representations learned by the rSLDS inform the structure of the hybrid decision-making agent and allow us to (1) specify temporally-abstracted sub-goals in a method reminiscent of the options framework, (2) lift the exploration into discrete space allowing us to exploit information-theoretic exploration bonuses and (3) `cache' the approximate solutions to low-level problems in the discrete planner. We apply our model to the sparse Continuous Mountain Car task, demonstrating fast system identification via enhanced exploration and successful planning through the delineation of abstract sub-goals.

Hybrid Recurrent Models Support Emergent Descriptions for Hierarchical Planning and Control

Aug 20, 2024

Abstract:An open problem in artificial intelligence is how systems can flexibly learn discrete abstractions that are useful for solving inherently continuous problems. Previous work has demonstrated that a class of hybrid state-space model known as recurrent switching linear dynamical systems (rSLDS) discover meaningful behavioural units via the piecewise linear decomposition of complex continuous dynamics (Linderman et al., 2016). Furthermore, they model how the underlying continuous states drive these discrete mode switches. We propose that the rich representations formed by an rSLDS can provide useful abstractions for planning and control. We present a novel hierarchical model-based algorithm inspired by Active Inference in which a discrete MDP sits above a low-level linear-quadratic controller. The recurrent transition dynamics learned by the rSLDS allow us to (1) specify temporally-abstracted sub-goals in a method reminiscent of the options framework, (2) lift the exploration into discrete space allowing us to exploit information-theoretic exploration bonuses and (3) `cache' the approximate solutions to low-level problems in the discrete planner. We successfully apply our model to the sparse Continuous Mountain Car task, demonstrating fast system identification via enhanced exploration and non-trivial planning through the delineation of abstract sub-goals.

Understanding Tool Discovery and Tool Innovation Using Active Inference

Nov 07, 2023

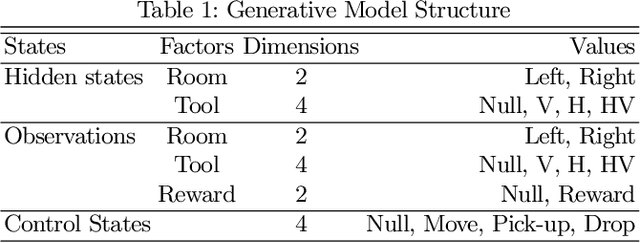

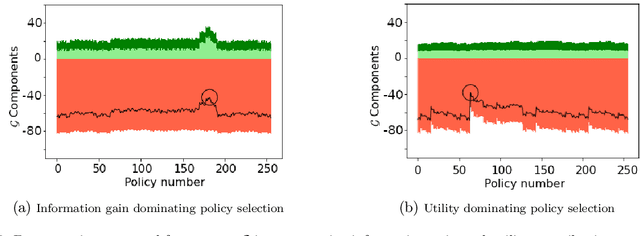

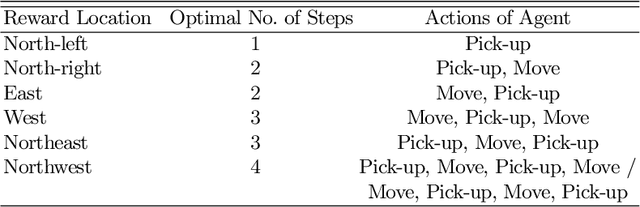

Abstract:The ability to invent new tools has been identified as an important facet of our ability as a species to problem solve in dynamic and novel environments. While the use of tools by artificial agents presents a challenging task and has been widely identified as a key goal in the field of autonomous robotics, far less research has tackled the invention of new tools by agents. In this paper, (1) we articulate the distinction between tool discovery and tool innovation by providing a minimal description of the two concepts under the formalism of active inference. We then (2) apply this description to construct a toy model of tool innovation by introducing the notion of tool affordances into the hidden states of the agent's probabilistic generative model. This particular state factorisation facilitates the ability to not just discover tools but invent them through the offline induction of an appropriate tool property. We discuss the implications of these preliminary results and outline future directions of research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge