Pitchaporn Rewatbowornwong

LUSD: Localized Update Score Distillation for Text-Guided Image Editing

Mar 14, 2025Abstract:While diffusion models show promising results in image editing given a target prompt, achieving both prompt fidelity and background preservation remains difficult. Recent works have introduced score distillation techniques that leverage the rich generative prior of text-to-image diffusion models to solve this task without additional fine-tuning. However, these methods often struggle with tasks such as object insertion. Our investigation of these failures reveals significant variations in gradient magnitude and spatial distribution, making hyperparameter tuning highly input-specific or unsuccessful. To address this, we propose two simple yet effective modifications: attention-based spatial regularization and gradient filtering-normalization, both aimed at reducing these variations during gradient updates. Experimental results show our method outperforms state-of-the-art score distillation techniques in prompt fidelity, improving successful edits while preserving the background. Users also preferred our method over state-of-the-art techniques across three metrics, and by 58-64% overall.

Zero-guidance Segmentation Using Zero Segment Labels

Mar 24, 2023Abstract:CLIP has enabled new and exciting joint vision-language applications, one of which is open-vocabulary segmentation, which can locate any segment given an arbitrary text query. In our research, we ask whether it is possible to discover semantic segments without any user guidance in the form of text queries or predefined classes, and label them using natural language automatically? We propose a novel problem zero-guidance segmentation and the first baseline that leverages two pre-trained generalist models, DINO and CLIP, to solve this problem without any fine-tuning or segmentation dataset. The general idea is to first segment an image into small over-segments, encode them into CLIP's visual-language space, translate them into text labels, and merge semantically similar segments together. The key challenge, however, is how to encode a visual segment into a segment-specific embedding that balances global and local context information, both useful for recognition. Our main contribution is a novel attention-masking technique that balances the two contexts by analyzing the attention layers inside CLIP. We also introduce several metrics for the evaluation of this new task. With CLIP's innate knowledge, our method can precisely locate the Mona Lisa painting among a museum crowd. Project page: https://zero-guide-seg.github.io/.

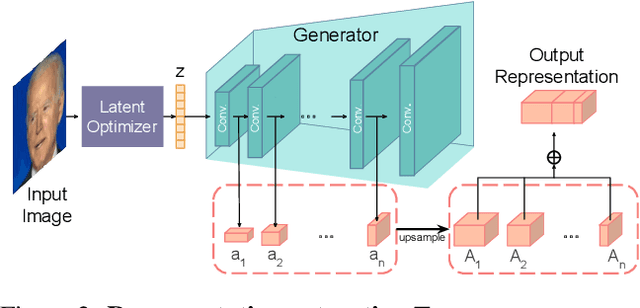

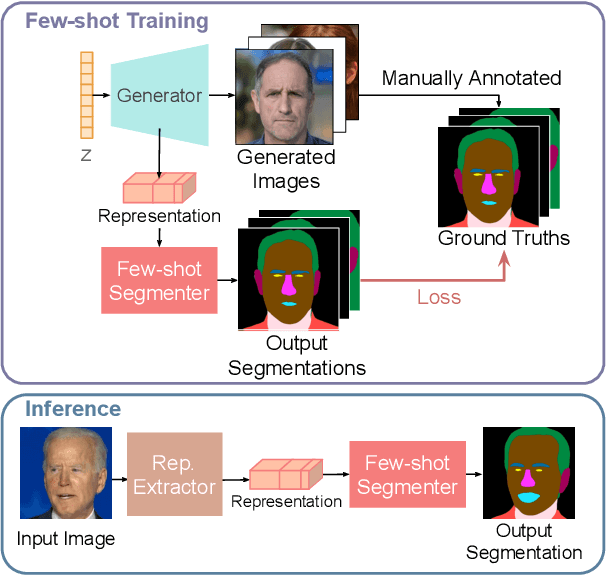

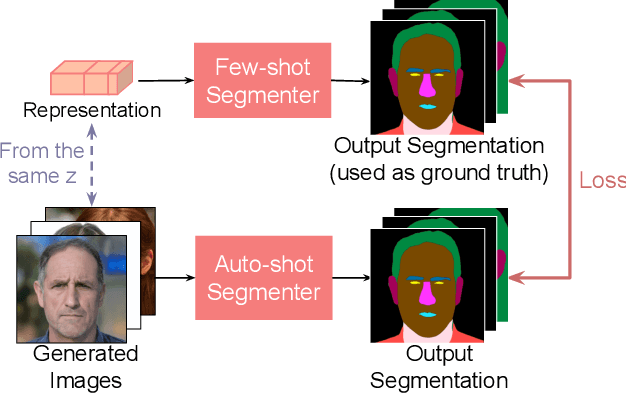

Repurposing GANs for One-shot Semantic Part Segmentation

Mar 24, 2021

Abstract:While GANs have shown success in realistic image generation, the idea of using GANs for other tasks unrelated to synthesis is underexplored. Do GANs learn meaningful structural parts of objects during their attempt to reproduce those objects? In this work, we test this hypothesis and propose a simple and effective approach based on GANs for semantic part segmentation that requires as few as one label example along with an unlabeled dataset. Our key idea is to leverage a trained GAN to extract pixel-wise representation from the input image and use it as feature vectors for a segmentation network. Our experiments demonstrate that GANs representation is "readily discriminative" and produces surprisingly good results that are comparable to those from supervised baselines trained with significantly more labels. We believe this novel repurposing of GANs underlies a new class of unsupervised representation learning that is applicable to many other tasks. More results are available at https://repurposegans.github.io/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge