Pierre-Etienne Martin

MPI-EVA

Dataset Generation and Bonobo Classification from Weakly Labelled Videos

Sep 07, 2023

Abstract:This paper presents a bonobo detection and classification pipeline built from the commonly used machine learning methods. Such application is motivated by the need to test bonobos in their enclosure using touch screen devices without human assistance. This work introduces a newly acquired dataset based on bonobo recordings generated semi-automatically. The recordings are weakly labelled and fed to a macaque detector in order to spatially detect the individual present in the video. Handcrafted features coupled with different classification algorithms and deep-learning methods using a ResNet architecture are investigated for bonobo identification. Performance is compared in terms of classification accuracy on the splits of the database using different data separation methods. We demonstrate the importance of data preparation and how a wrong data separation can lead to false good results. Finally, after a meaningful separation of the data, the best classification performance is obtained using a fine-tuned ResNet model and reaches 75% of accuracy.

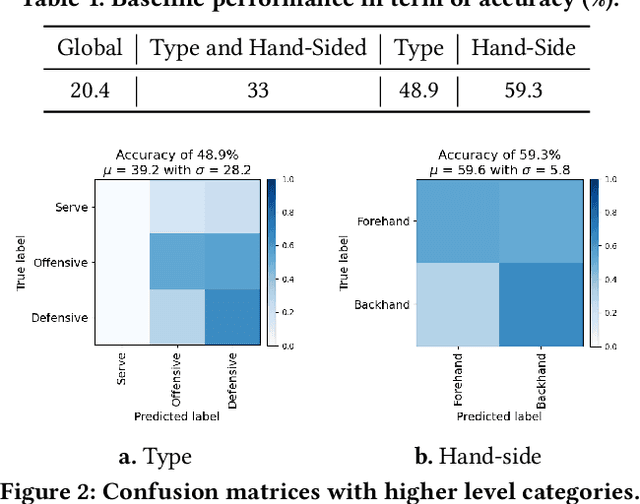

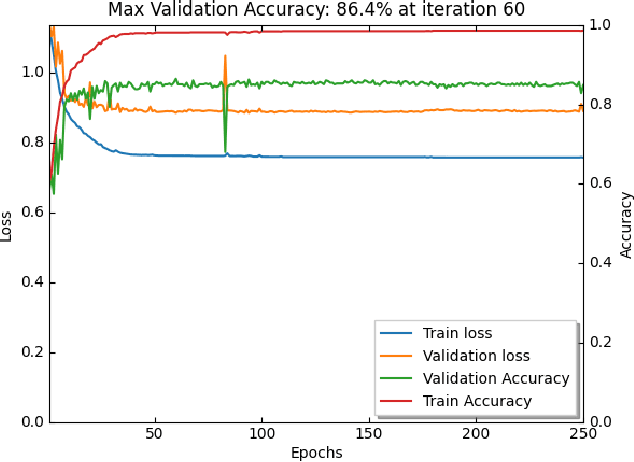

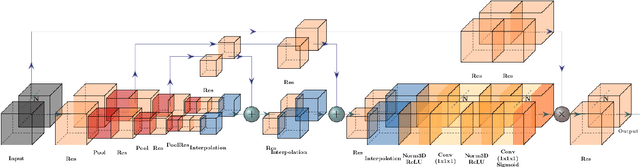

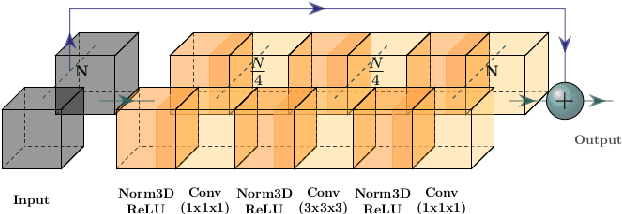

Baseline Method for the Sport Task of MediaEval 2022 with 3D CNNs using Attention Mechanisms

Feb 06, 2023Abstract:This paper presents the baseline method proposed for the Sports Video task part of the MediaEval 2022 benchmark. This task proposes two subtasks: stroke classification from trimmed videos, and stroke detection from untrimmed videos. This baseline addresses both subtasks. We propose two types of 3D-CNN architectures to solve the two subtasks. Both 3D-CNNs use Spatio-temporal convolutions and attention mechanisms. The architectures and the training process are tailored to solve the addressed subtask. This baseline method is shared publicly online to help the participants in their investigation and alleviate eventually some aspects of the task such as video processing, training method, evaluation and submission routine. The baseline method reaches 86.4% of accuracy with our v2 model for the classification subtask. For the detection subtask, the baseline reaches a mAP of 0.131 and IoU of 0.515 with our v1 model.

Fine-Grained Action Detection with RGB and Pose Information using Two Stream Convolutional Networks

Feb 06, 2023

Abstract:As participants of the MediaEval 2022 Sport Task, we propose a two-stream network approach for the classification and detection of table tennis strokes. Each stream is a succession of 3D Convolutional Neural Network (CNN) blocks using attention mechanisms. Each stream processes different 4D inputs. Our method utilizes raw RGB data and pose information computed from MMPose toolbox. The pose information is treated as an image by applying the pose either on a black background or on the original RGB frame it has been computed from. Best performance is obtained by feeding raw RGB data to one stream, Pose + RGB (PRGB) information to the other stream and applying late fusion on the features. The approaches were evaluated on the provided TTStroke-21 data sets. We can report an improvement in stroke classification, reaching 87.3% of accuracy, while the detection does not outperform the baseline but still reaches an IoU of 0.349 and mAP of 0.110.

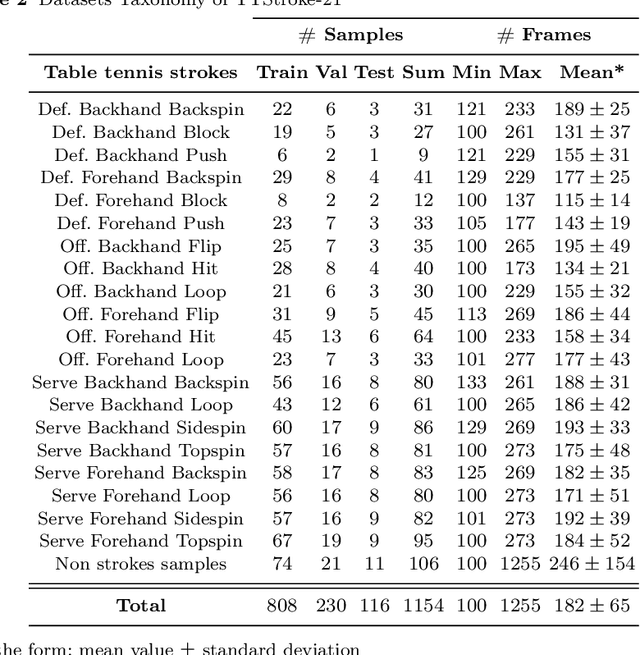

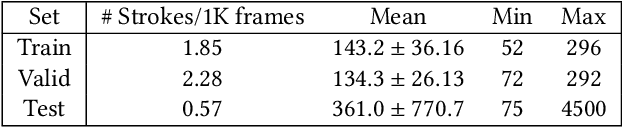

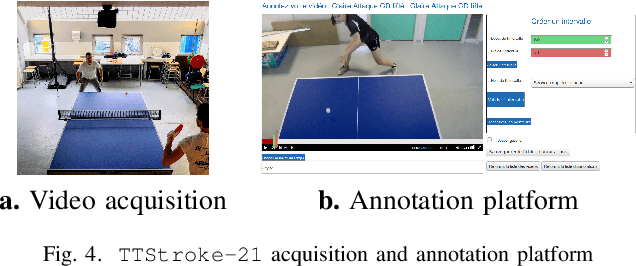

Sport Task: Fine Grained Action Detection and Classification of Table Tennis Strokes from Videos for MediaEval 2022

Jan 31, 2023

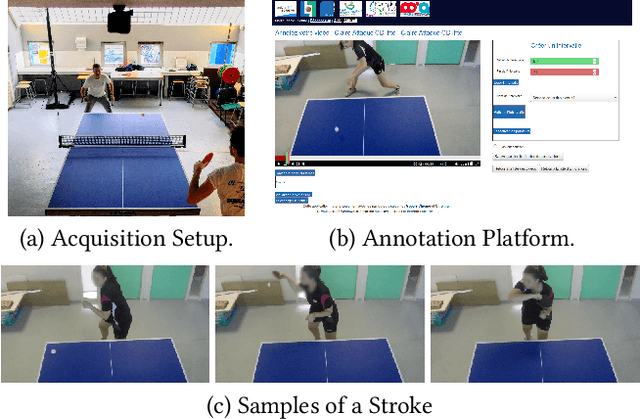

Abstract:Sports video analysis is a widespread research topic. Its applications are very diverse, like events detection during a match, video summary, or fine-grained movement analysis of athletes. As part of the MediaEval 2022 benchmarking initiative, this task aims at detecting and classifying subtle movements from sport videos. We focus on recordings of table tennis matches. Conducted since 2019, this task provides a classification challenge from untrimmed videos recorded under natural conditions with known temporal boundaries for each stroke. Since 2021, the task also provides a stroke detection challenge from unannotated, untrimmed videos. This year, the training, validation, and test sets are enhanced to ensure that all strokes are represented in each dataset. The dataset is now similar to the one used in [1, 2]. This research is intended to build tools for coaches and athletes who want to further evaluate their sport performances.

3D Convolutional Networks for Action Recognition: Application to Sport Gesture Recognition

Apr 13, 2022

Abstract:3D convolutional networks is a good means to perform tasks such as video segmentation into coherent spatio-temporal chunks and classification of them with regard to a target taxonomy. In the chapter we are interested in the classification of continuous video takes with repeatable actions, such as strokes of table tennis. Filmed in a free marker less ecological environment, these videos represent a challenge from both segmentation and classification point of view. The 3D convnets are an efficient tool for solving these problems with window-based approaches.

Spatio-Temporal CNN baseline method for the Sports Video Task of MediaEval 2021 benchmark

Dec 16, 2021

Abstract:This paper presents the baseline method proposed for the Sports Video task part of the MediaEval 2021 benchmark. This task proposes a stroke detection and a stroke classification subtasks. This baseline addresses both subtasks. The spatio-temporal CNN architecture and the training process of the model are tailored according to the addressed subtask. The method has the purpose of helping the participants to solve the task and is not meant to reach stateof-the-art performance. Still, for the detection task, the baseline is performing better than the other participants, which stresses the difficulty of such a task.

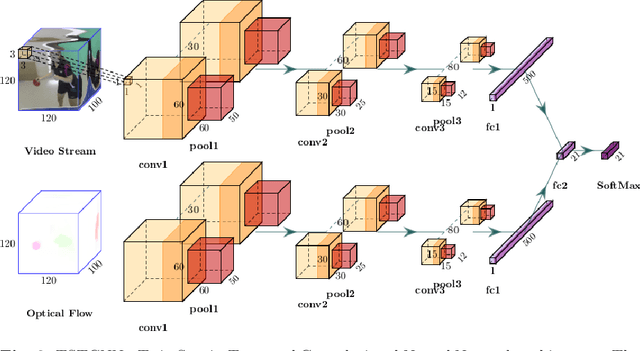

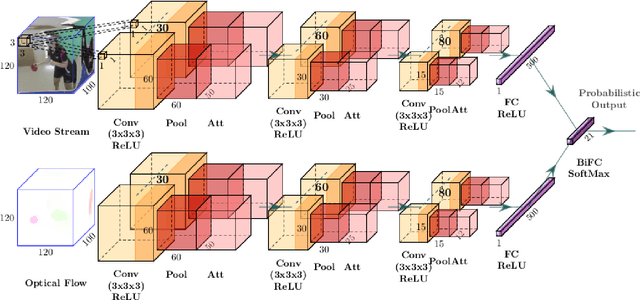

Two Stream Network for Stroke Detection in Table Tennis

Dec 16, 2021

Abstract:This paper presents a table tennis stroke detection method from videos. The method relies on a two-stream Convolutional Neural Network processing in parallel the RGB Stream and its computed optical flow. The method has been developed as part of the MediaEval 2021 benchmark for the Sport task. Our contribution did not outperform the provided baseline on the test set but has performed the best among the other participants with regard to the mAP metric.

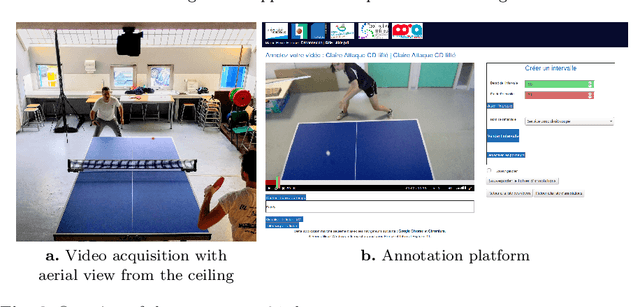

Sports Video: Fine-Grained Action Detection and Classification of Table Tennis Strokes from Videos for MediaEval 2021

Dec 16, 2021

Abstract:Sports video analysis is a prevalent research topic due to the variety of application areas, ranging from multimedia intelligent devices with user-tailored digests up to analysis of athletes' performance. The Sports Video task is part of the MediaEval 2021 benchmark. This task tackles fine-grained action detection and classification from videos. The focus is on recordings of table tennis games. Running since 2019, the task has offered a classification challenge from untrimmed video recorded in natural conditions with known temporal boundaries for each stroke. This year, the dataset is extended and offers, in addition, a detection challenge from untrimmed videos without annotations. This work aims at creating tools for sports coaches and players in order to analyze sports performance. Movement analysis and player profiling may be built upon such technology to enrich the training experience of athletes and improve their performance.

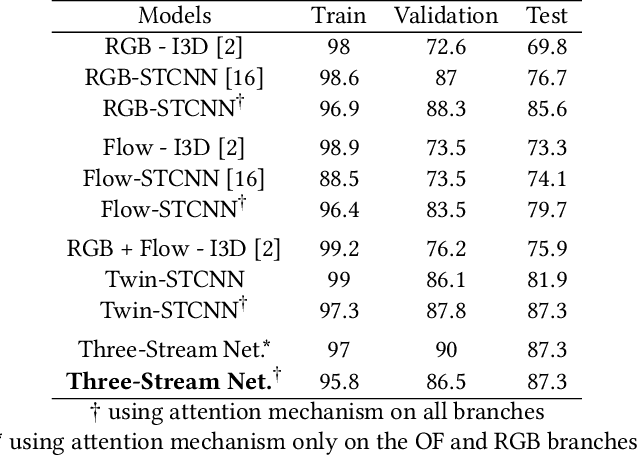

Three-Stream 3D/1D CNN for Fine-Grained Action Classification and Segmentation in Table Tennis

Sep 29, 2021

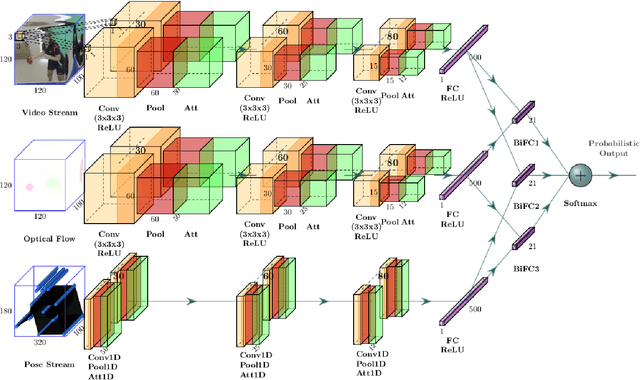

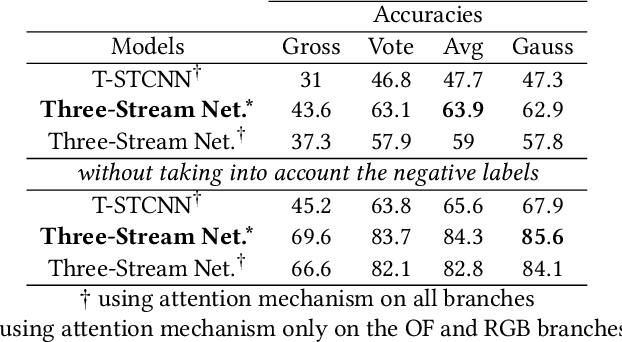

Abstract:This paper proposes a fusion method of modalities extracted from video through a three-stream network with spatio-temporal and temporal convolutions for fine-grained action classification in sport. It is applied to TTStroke-21 dataset which consists of untrimmed videos of table tennis games. The goal is to detect and classify table tennis strokes in the videos, the first step of a bigger scheme aiming at giving feedback to the players for improving their performance. The three modalities are raw RGB data, the computed optical flow and the estimated pose of the player. The network consists of three branches with attention blocks. Features are fused at the latest stage of the network using bilinear layers. Compared to previous approaches, the use of three modalities allows faster convergence and better performances on both tasks: classification of strokes with known temporal boundaries and joint segmentation and classification. The pose is also further investigated in order to offer richer feedback to the athletes.

3D attention mechanism for fine-grained classification of table tennis strokes using a Twin Spatio-Temporal Convolutional Neural Networks

Nov 20, 2020

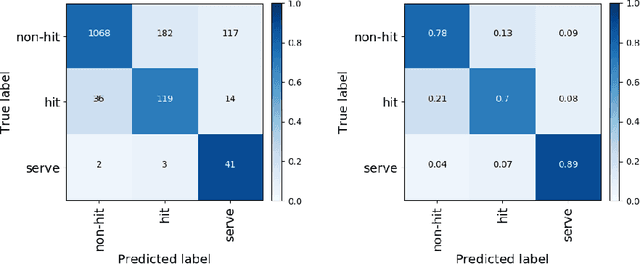

Abstract:The paper addresses the problem of recognition of actions in video with low inter-class variability such as Table Tennis strokes. Two stream, "twin" convolutional neural networks are used with 3D convolutions both on RGB data and optical flow. Actions are recognized by classification of temporal windows. We introduce 3D attention modules and examine their impact on classification efficiency. In the context of the study of sportsmen performances, a corpus of the particular actions of table tennis strokes is considered. The use of attention blocks in the network speeds up the training step and improves the classification scores up to 5% with our twin model. We visualize the impact on the obtained features and notice correlation between attention and player movements and position. Score comparison of state-of-the-art action classification method and proposed approach with attentional blocks is performed on the corpus. Proposed model with attention blocks outperforms previous model without them and our baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge