Piero Fraternali

Illegal Waste Detection in Remote Sensing Images: A Case Study

Feb 10, 2025

Abstract:Environmental crime currently represents the third largest criminal activity worldwide while threatening ecosystems as well as human health. Among the crimes related to this activity, improper waste management can nowadays be countered more easily thanks to the increasing availability and decreasing cost of Very-High-Resolution Remote Sensing images, which enable semi-automatic territory scanning in search of illegal landfills. This paper proposes a pipeline, developed in collaboration with professionals from a local environmental agency, for detecting candidate illegal dumping sites leveraging a classifier of Remote Sensing images. To identify the best configuration for such classifier, an extensive set of experiments was conducted and the impact of diverse image characteristics and training settings was thoroughly analyzed. The local environmental agency was then involved in an experimental exercise where outputs from the developed classifier were integrated in the experts' everyday work, resulting in time savings with respect to manual photo-interpretation. The classifier was eventually run with valuable results on a location outside of the training area, highlighting potential for cross-border applicability of the proposed pipeline.

Time Series Analysis in Compressor-Based Machines: A Survey

Feb 27, 2024

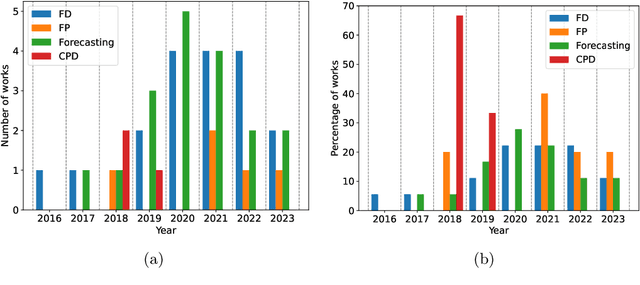

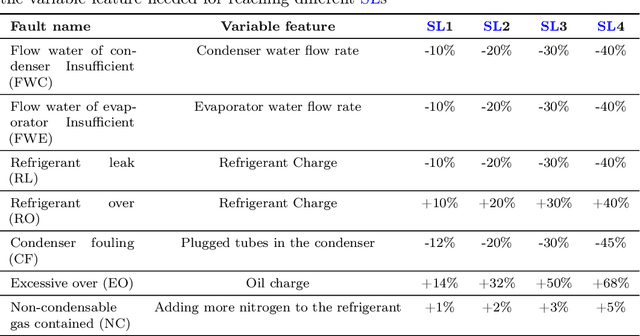

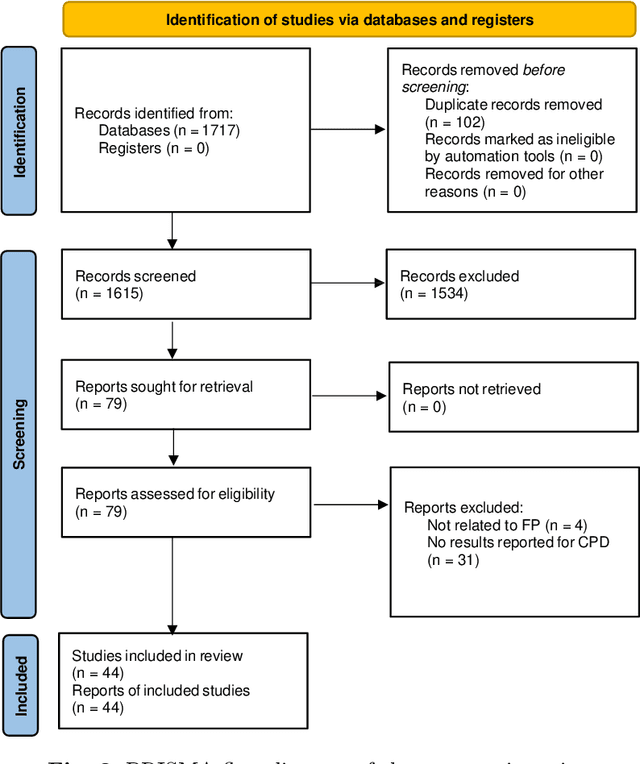

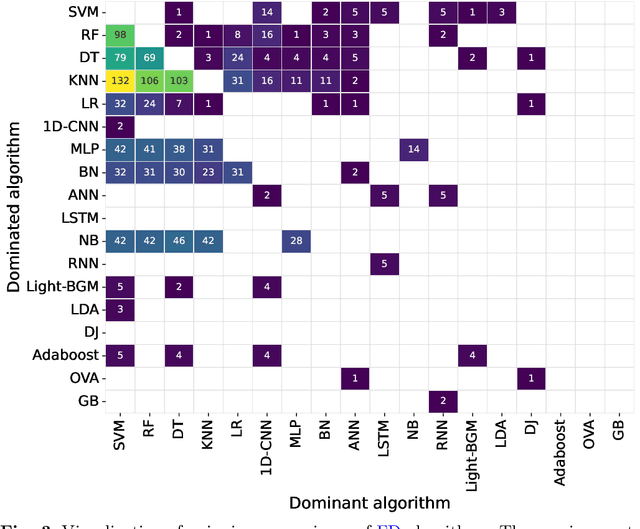

Abstract:In both industrial and residential contexts, compressor-based machines, such as refrigerators, HVAC systems, heat pumps and chillers, are essential to fulfil production and consumers' needs. The diffusion of sensors and IoT connectivity supports the development of monitoring systems able to detect and predict faults, identify behavioural shifts and forecast the operational status of machines and of their components. The focus of this paper is to survey the recent research on such tasks as Fault Detection, Fault Prediction, Forecasting and Change Point Detection applied to multivariate time series characterizing the operations of compressor-based machines. Specifically, Fault Detection detects and diagnoses faults, Fault Prediction predicts such occurrences, forecasting anticipates the future value of characteristic variables of machines and Change Point Detection identifies significant variations in the behaviour of the appliances, such as a change in the working regime. We identify and classify the approaches to the above-mentioned tasks, compare the algorithms employed, highlight the gaps in the current status of the art and discuss the most promising future research directions in the field.

Predicting machine failures from multivariate time series: an industrial case study

Feb 27, 2024Abstract:Non-neural Machine Learning (ML) and Deep Learning (DL) models are often used to predict system failures in the context of industrial maintenance. However, only a few researches jointly assess the effect of varying the amount of past data used to make a prediction and the extension in the future of the forecast. This study evaluates the impact of the size of the reading window and of the prediction window on the performances of models trained to forecast failures in three data sets concerning the operation of (1) an industrial wrapping machine working in discrete sessions, (2) an industrial blood refrigerator working continuously, and (3) a nitrogen generator working continuously. The problem is formulated as a binary classification task that assigns the positive label to the prediction window based on the probability of a failure to occur in such an interval. Six algorithms (logistic regression, random forest, support vector machine, LSTM, ConvLSTM, and Transformers) are compared using multivariate telemetry time series. The results indicate that, in the considered scenarios, the dimension of the prediction windows plays a crucial role and highlight the effectiveness of DL approaches at classifying data with diverse time-dependent patterns preceding a failure and the effectiveness of ML approaches at classifying similar and repetitive patterns preceding a failure.

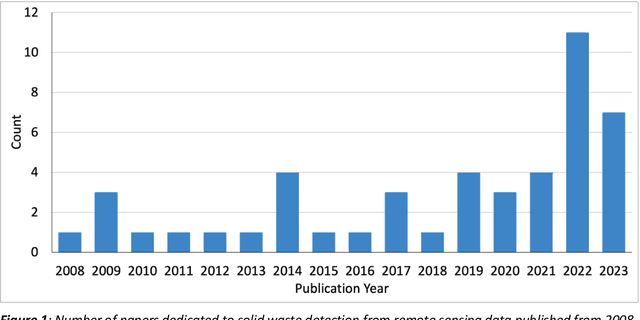

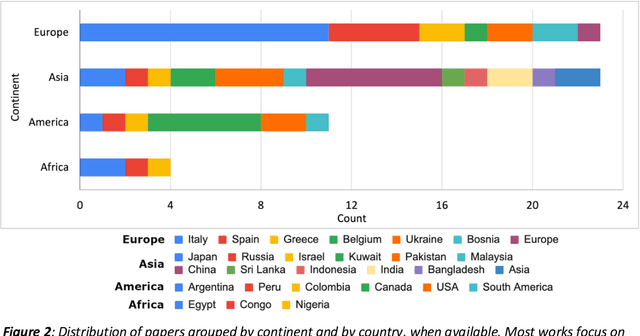

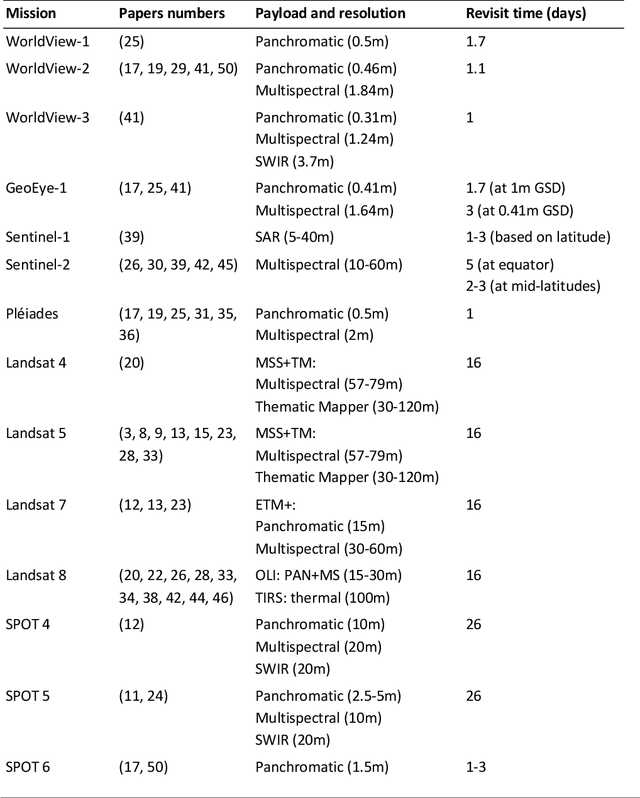

Solid Waste Detection in Remote Sensing Images: A Survey

Feb 14, 2024

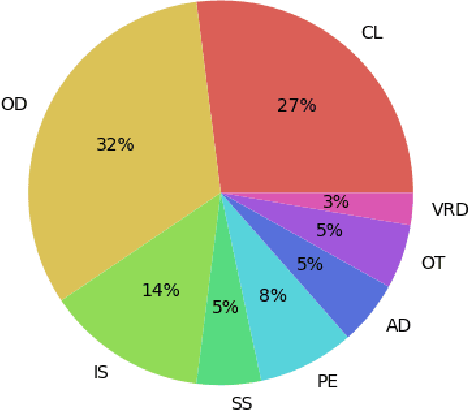

Abstract:The detection and characterization of illegal solid waste disposal sites are essential for environmental protection, particularly for mitigating pollution and health hazards. Improperly managed landfills contaminate soil and groundwater via rainwater infiltration, posing threats to both animals and humans. Traditional landfill identification approaches, such as on-site inspections, are time-consuming and expensive. Remote sensing is a cost-effective solution for the identification and monitoring of solid waste disposal sites that enables broad coverage and repeated acquisitions over time. Earth Observation (EO) satellites, equipped with an array of sensors and imaging capabilities, have been providing high-resolution data for several decades. Researchers proposed specialized techniques that leverage remote sensing imagery to perform a range of tasks such as waste site detection, dumping site monitoring, and assessment of suitable locations for new landfills. This review aims to provide a detailed illustration of the most relevant proposals for the detection and monitoring of solid waste sites by describing and comparing the approaches, the implemented techniques, and the employed data. Furthermore, since the data sources are of the utmost importance for developing an effective solid waste detection model, a comprehensive overview of the satellites and publicly available data sets is presented. Finally, this paper identifies the open issues in the state-of-the-art and discusses the relevant research directions for reducing the costs and improving the effectiveness of novel solid waste detection methods.

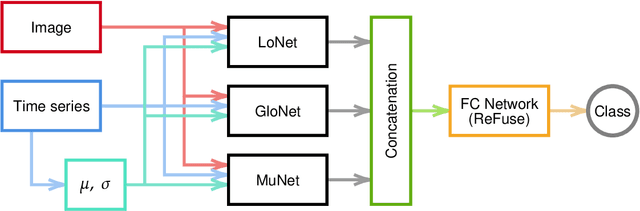

DeepGraviLens: a Multi-Modal Architecture for Classifying Gravitational Lensing Data

May 03, 2022

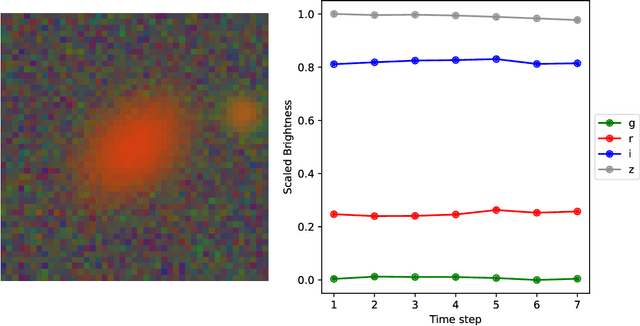

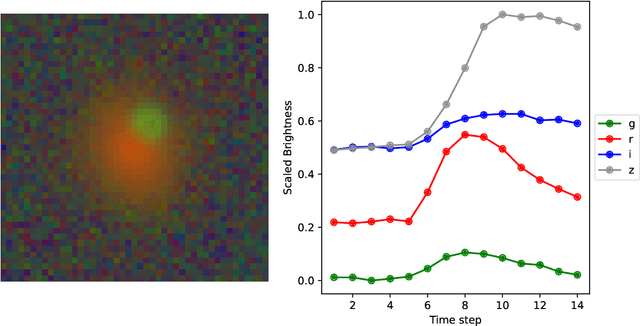

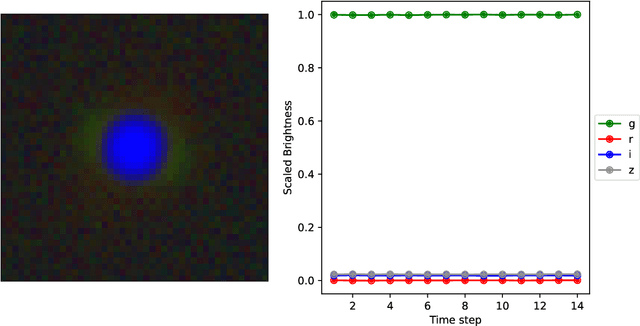

Abstract:Gravitational lensing is the relativistic effect generated by massive bodies, which bend the space-time surrounding them. It is a deeply investigated topic in astrophysics and allows validating theoretical relativistic results and studying faint astrophysical objects that would not be visible otherwise. In recent years Machine Learning methods have been applied to support the analysis of the gravitational lensing phenomena by detecting lensing effects in data sets consisting of images associated with brightness variation time series. However, the state-of-art approaches either consider only images and neglect time-series data or achieve relatively low accuracy on the most difficult data sets. This paper introduces DeepGraviLens, a novel multi-modal network that classifies spatio-temporal data belonging to one non-lensed system type and three lensed system types. It surpasses the current state of the art accuracy results by $\approx$ 19% to $\approx$ 43%, depending on the considered data set. Such an improvement will enable the acceleration of the analysis of lensed objects in upcoming astrophysical surveys, which will exploit the petabytes of data collected, e.g., from the Vera C. Rubin Observatory.

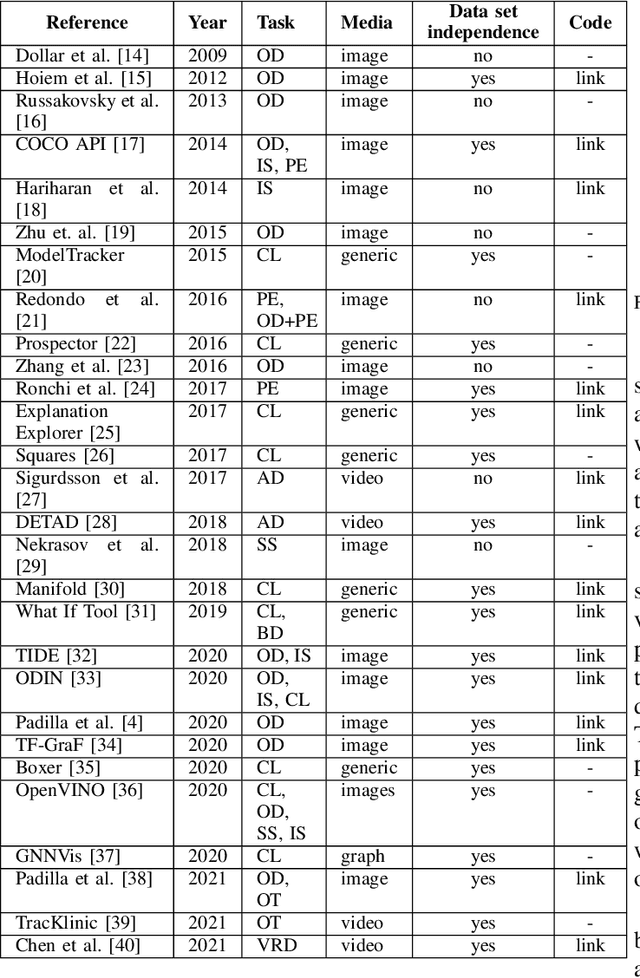

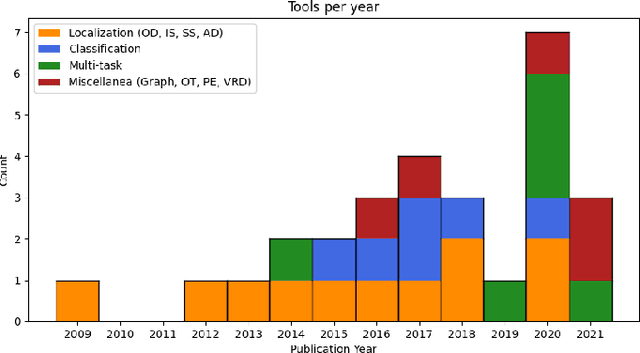

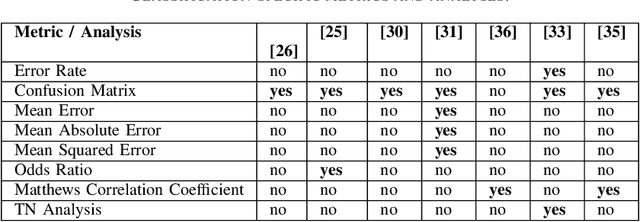

Black-box error diagnosis in deep neural networks: a survey of tools

Jan 17, 2022

Abstract:The application of Deep Neural Networks (DNNs) to a broad variety of tasks demands methods for coping with the complex and opaque nature of these architectures. The analysis of performance can be pursued in two ways. On one side, model interpretation techniques aim at "opening the box" to assess the relationship between the input, the inner layers, and the output. For example, saliency and attention models exploit knowledge of the architecture to capture the essential regions of the input that have the most impact on the inference process and output. On the other hand, models can be analysed as "black boxes", e.g., by associating the input samples with extra annotations that do not contribute to model training but can be exploited for characterizing the model response. Such performance-driven meta-annotations enable the detailed characterization of performance metrics and errors and help scientists identify the features of the input responsible for prediction failures and focus their model improvement efforts. This paper presents a structured survey of the tools that support the "black box" analysis of DNNs and discusses the gaps in the current proposals and the relevant future directions in this research field.

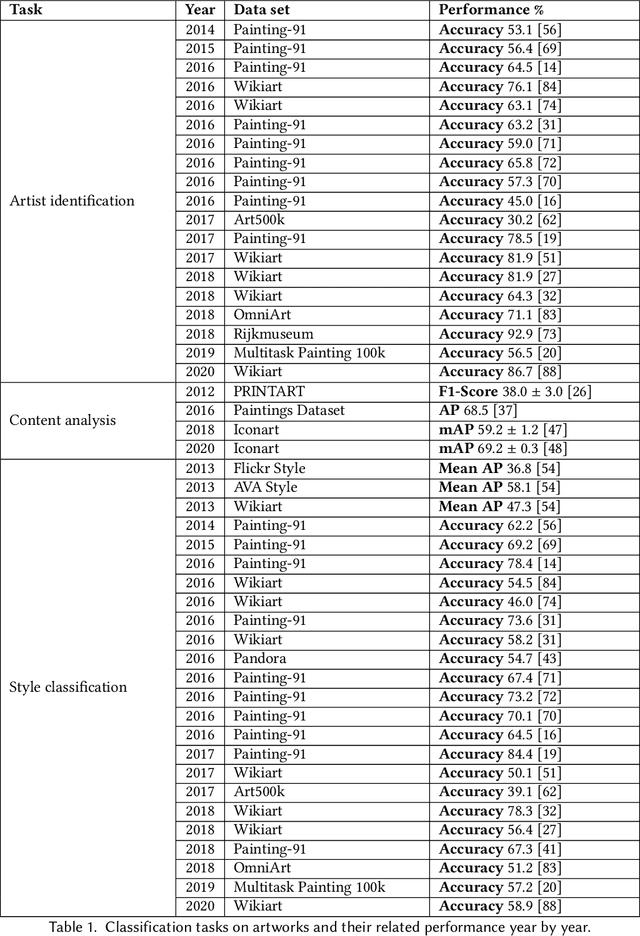

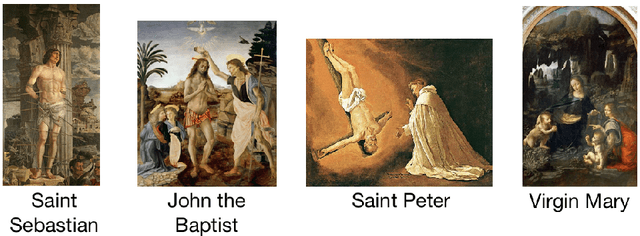

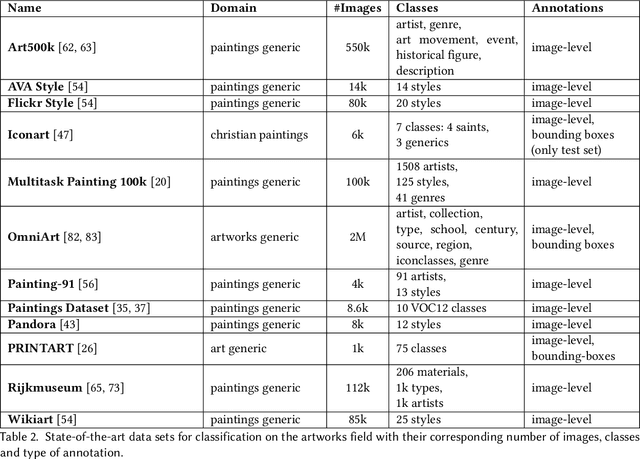

A Data Set and a Convolutional Model for Iconography Classification in Paintings

Oct 06, 2020

Abstract:Iconography in art is the discipline that studies the visual content of artworks to determine their motifs and themes andto characterize the way these are represented. It is a subject of active research for a variety of purposes, including the interpretation of meaning, the investigation of the origin and diffusion in time and space of representations, and the study of influences across artists and art works. With the proliferation of digital archives of art images, the possibility arises of applying Computer Vision techniques to the analysis of art images at an unprecedented scale, which may support iconography research and education. In this paper we introduce a novel paintings data set for iconography classification and present the quantitativeand qualitative results of applying a Convolutional Neural Network (CNN) classifier to the recognition of the iconography of artworks. The proposed classifier achieves good performances (71.17% Precision, 70.89% Recall, 70.25% F1-Score and 72.73% Average Precision) in the task of identifying saints in Christian religious paintings, a task made difficult by the presence of classes with very similar visual features. Qualitative analysis of the results shows that the CNN focuses on the traditional iconic motifs that characterize the representation of each saint and exploits such hints to attain correct identification. The ultimate goal of our work is to enable the automatic extraction, decomposition, and comparison of iconography elements to support iconographic studies and automatic art work annotation.

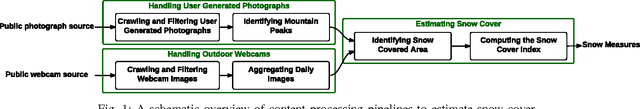

Estimating snow cover from publicly available images

Aug 05, 2015

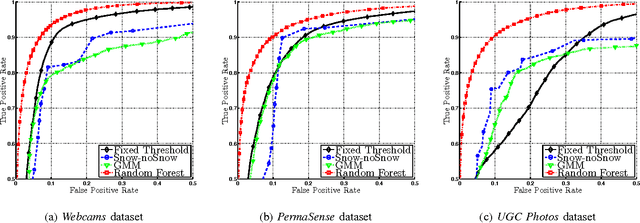

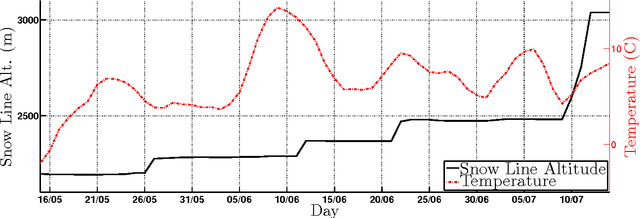

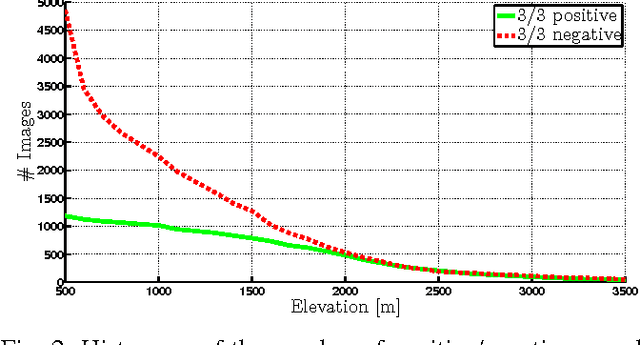

Abstract:In this paper we study the problem of estimating snow cover in mountainous regions, that is, the spatial extent of the earth surface covered by snow. We argue that publicly available visual content, in the form of user generated photographs and image feeds from outdoor webcams, can both be leveraged as additional measurement sources, complementing existing ground, satellite and airborne sensor data. To this end, we describe two content acquisition and processing pipelines that are tailored to such sources, addressing the specific challenges posed by each of them, e.g., identifying the mountain peaks, filtering out images taken in bad weather conditions, handling varying illumination conditions. The final outcome is summarized in a snow cover index, which indicates for a specific mountain and day of the year, the fraction of visible area covered by snow, possibly at different elevations. We created a manually labelled dataset to assess the accuracy of the image snow covered area estimation, achieving 90.0% precision at 91.1% recall. In addition, we show that seasonal trends related to air temperature are captured by the snow cover index.

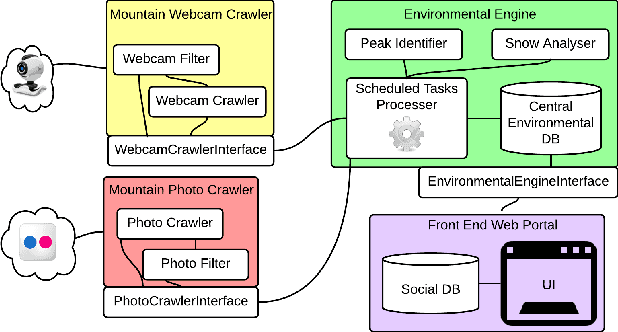

SnowWatch: Snow Monitoring through Acquisition and Analysis of User-Generated Content

Jul 31, 2015

Abstract:We present a system for complementing snow phenomena monitoring with virtual measurements extracted from public visual content. The proposed system integrates an automatic acquisition and analysis of photographs and webcam images depicting Alpine mountains. In particular, the technical demonstration consists in a web portal that interfaces the whole system with the population. It acts as an entertaining photo-sharing social web site, acquiring at the same time visual content necessary for environmental monitoring.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge