Philipp Weiß

Do Users Benefit From Interpretable Vision? A User Study, Baseline, And Dataset

Apr 25, 2022

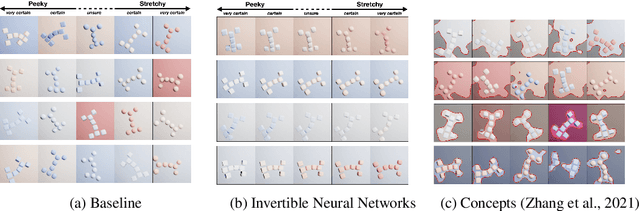

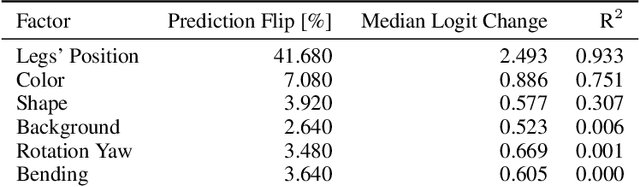

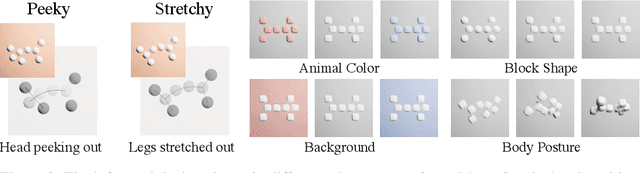

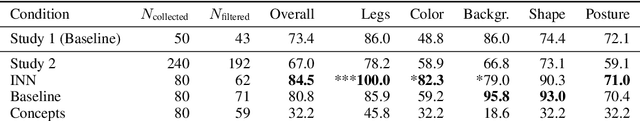

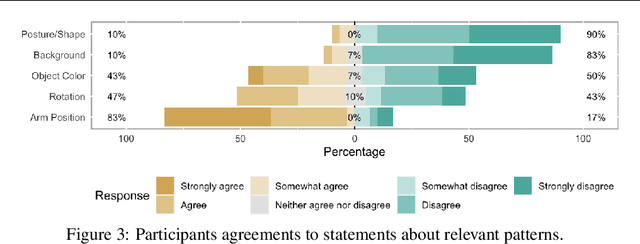

Abstract:A variety of methods exist to explain image classification models. However, whether they provide any benefit to users over simply comparing various inputs and the model's respective predictions remains unclear. We conducted a user study (N=240) to test how such a baseline explanation technique performs against concept-based and counterfactual explanations. To this end, we contribute a synthetic dataset generator capable of biasing individual attributes and quantifying their relevance to the model. In a study, we assess if participants can identify the relevant set of attributes compared to the ground-truth. Our results show that the baseline outperformed concept-based explanations. Counterfactual explanations from an invertible neural network performed similarly as the baseline. Still, they allowed users to identify some attributes more accurately. Our results highlight the importance of measuring how well users can reason about biases of a model, rather than solely relying on technical evaluations or proxy tasks. We open-source our study and dataset so it can serve as a blue-print for future studies. For code see, https://github.com/berleon/do_users_benefit_from_interpretable_vision

Two4Two: Evaluating Interpretable Machine Learning - A Synthetic Dataset For Controlled Experiments

May 06, 2021

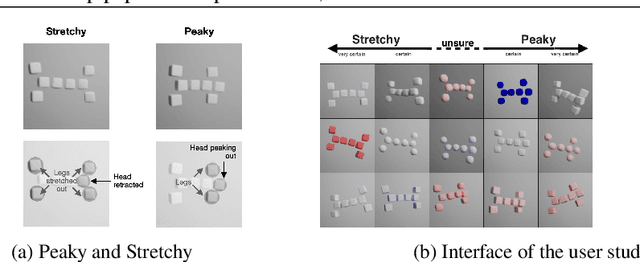

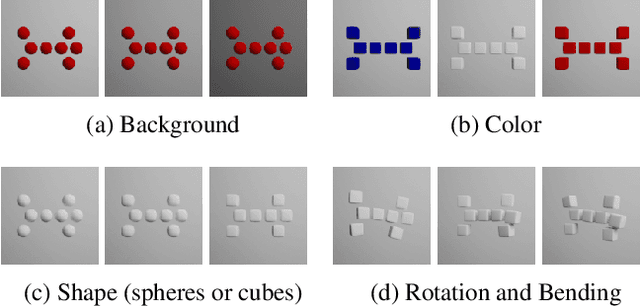

Abstract:A growing number of approaches exist to generate explanations for image classification. However, few of these approaches are subjected to human-subject evaluations, partly because it is challenging to design controlled experiments with natural image datasets, as they leave essential factors out of the researcher's control. With our approach, researchers can describe their desired dataset with only a few parameters. Based on these, our library generates synthetic image data of two 3D abstract animals. The resulting data is suitable for algorithmic as well as human-subject evaluations. Our user study results demonstrate that our method can create biases predictive enough for a classifier and subtle enough to be noticeable only to every second participant inspecting the data visually. Our approach significantly lowers the barrier for conducting human subject evaluations, thereby facilitating more rigorous investigations into interpretable machine learning. For our library and datasets see, https://github.com/mschuessler/two4two/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge