Philipp Teutsch

Slim multi-scale convolutional autoencoder-based reduced-order models for interpretable features of a complex dynamical system

Jan 06, 2025

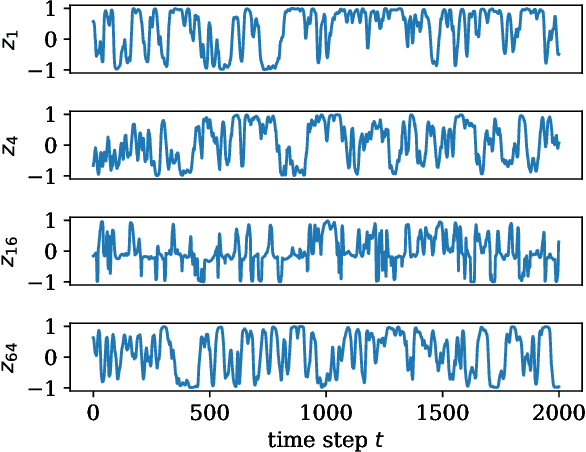

Abstract:In recent years, data-driven deep learning models have gained significant interest in the analysis of turbulent dynamical systems. Within the context of reduced-order models (ROMs), convolutional autoencoders (CAEs) pose a universally applicable alternative to conventional approaches. They can learn nonlinear transformations directly from data, without prior knowledge of the system. However, the features generated by such models lack interpretability. Thus, the resulting model is a black-box which effectively reduces the complexity of the system, but does not provide insights into the meaning of the latent features. To address this critical issue, we introduce a novel interpretable CAE approach for high-dimensional fluid flow data that maintains the reconstruction quality of conventional CAEs and allows for feature interpretation. Our method can be easily integrated into any existing CAE architecture with minor modifications of the training process. We compare our approach to Proper Orthogonal Decomposition (POD) and two existing methods for interpretable CAEs. We apply all methods to three different experimental turbulent Rayleigh-B\'enard convection datasets with varying complexity. Our results show that the proposed method is lightweight, easy to train, and achieves relative reconstruction performance improvements of up to 6.4% over POD for 64 modes. The relative improvement increases to up to 229.8% as the number of modes decreases. Additionally, our method delivers interpretable features similar to those of POD and is significantly less resource-intensive than existing CAE approaches, using less than 2% of the parameters. These approaches either trade interpretability for reconstruction performance or only provide interpretability to a limited extend.

Flipped Classroom: Effective Teaching for Time Series Forecasting

Oct 17, 2022

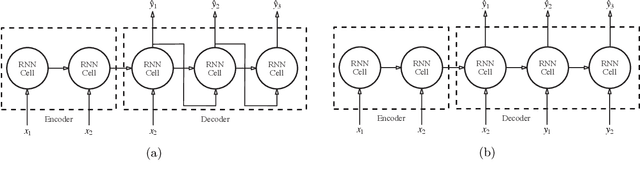

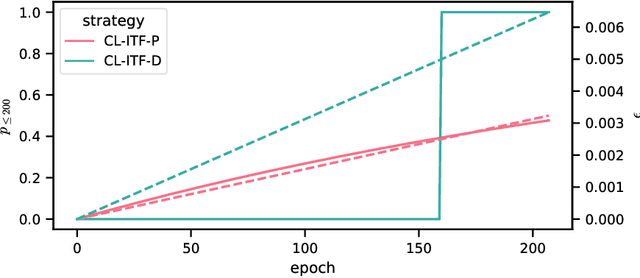

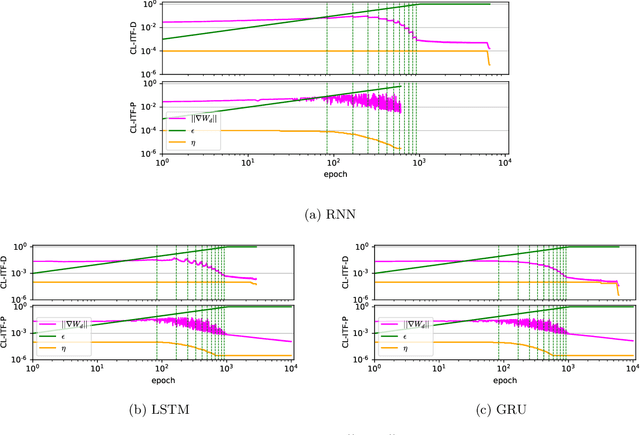

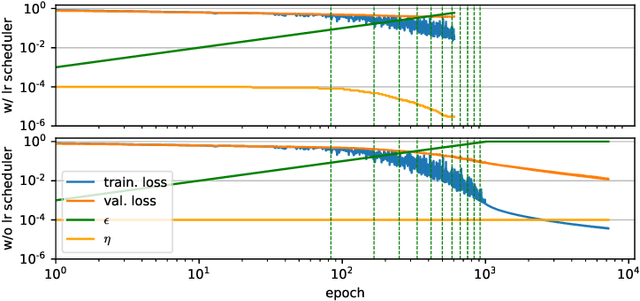

Abstract:Sequence-to-sequence models based on LSTM and GRU are a most popular choice for forecasting time series data reaching state-of-the-art performance. Training such models can be delicate though. The two most common training strategies within this context are teacher forcing (TF) and free running (FR). TF can be used to help the model to converge faster but may provoke an exposure bias issue due to a discrepancy between training and inference phase. FR helps to avoid this but does not necessarily lead to better results, since it tends to make the training slow and unstable instead. Scheduled sampling was the first approach tackling these issues by picking the best from both worlds and combining it into a curriculum learning (CL) strategy. Although scheduled sampling seems to be a convincing alternative to FR and TF, we found that, even if parametrized carefully, scheduled sampling may lead to premature termination of the training when applied for time series forecasting. To mitigate the problems of the above approaches we formalize CL strategies along the training as well as the training iteration scale. We propose several new curricula, and systematically evaluate their performance in two experimental sets. For our experiments, we utilize six datasets generated from prominent chaotic systems. We found that the newly proposed increasing training scale curricula with a probabilistic iteration scale curriculum consistently outperforms previous training strategies yielding an NRMSE improvement of up to 81% over FR or TF training. For some datasets we additionally observe a reduced number of training iterations. We observed that all models trained with the new curricula yield higher prediction stability allowing for longer prediction horizons.

Direct data-driven forecast of local turbulent heat flux in Rayleigh-Bénard convection

Feb 26, 2022

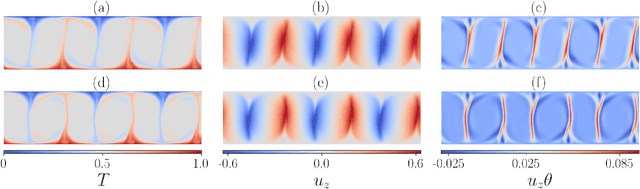

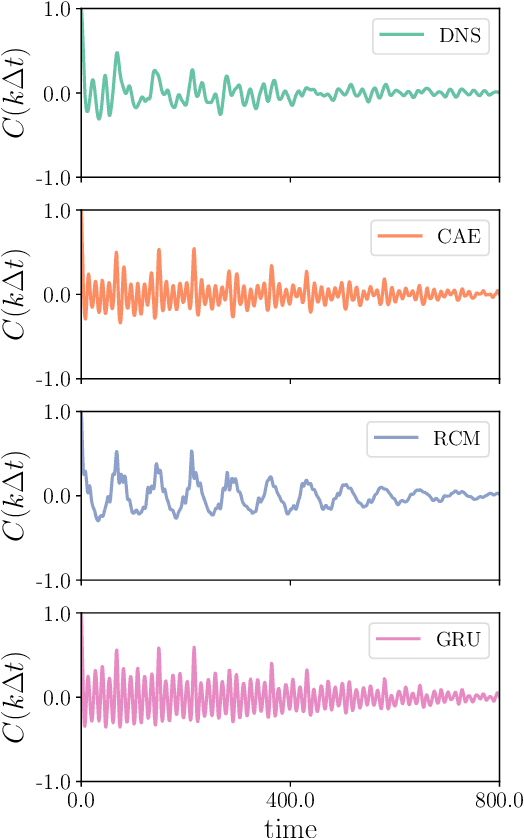

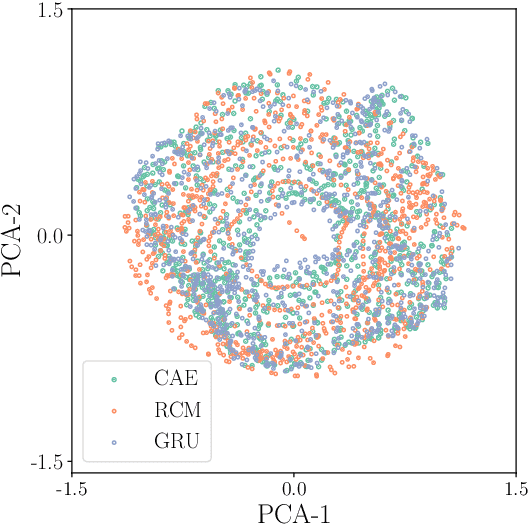

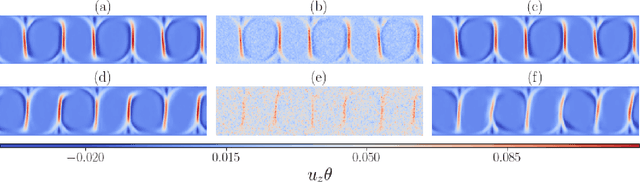

Abstract:A combined convolutional autoencoder-recurrent neural network machine learning model is presented to analyse and forecast the dynamics and low-order statistics of the local convective heat flux field in a two-dimensional turbulent Rayleigh-B\'{e}nard convection flow at Prandtl number ${\rm Pr}=7$ and Rayleigh number ${\rm Ra}=10^7$. Two recurrent neural networks are applied for the temporal advancement of flow data in the reduced latent data space, a reservoir computing model in the form of an echo state network and a recurrent gated unit. Thereby, the present work exploits the modular combination of three different machine learning algorithms to build a fully data-driven and reduced model for the dynamics of the turbulent heat transfer in a complex thermally driven flow. The convolutional autoencoder with 12 hidden layers is able to reduce the dimensionality of the turbulence data to about 0.2 \% of their original size. Our results indicate a fairly good accuracy in the first- and second-order statistics of the convective heat flux. The algorithm is also able to reproduce the intermittent plume-mixing dynamics at the upper edges of the thermal boundary layers with some deviations. The same holds for the probability density function of the local convective heat flux with differences in the far tails. Furthermore, we demonstrate the noise resilience of the framework which suggests the present model might be applicable as a reduced dynamical model that delivers transport fluxes and their variations to the coarse grid cells of larger-scale computational models, such as global circulation models for the atmosphere and ocean.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge