Philipp Flotho

Chair for Clinical Bioinformatics, Saarland Informatics Campus, Saarland University, Saarbrücken, Germany, Helmholtz Institute for Pharmaceutical Research Saarland

HOT-POT: Optimal Transport for Sparse Stereo Matching

Jan 18, 2026Abstract:Stereo vision between images faces a range of challenges, including occlusions, motion, and camera distortions, across applications in autonomous driving, robotics, and face analysis. Due to parameter sensitivity, further complications arise for stereo matching with sparse features, such as facial landmarks. To overcome this ill-posedness and enable unsupervised sparse matching, we consider line constraints of the camera geometry from an optimal transport (OT) viewpoint. Formulating camera-projected points as (half)lines, we propose the use of the classical epipolar distance as well as a 3D ray distance to quantify matching quality. Employing these distances as a cost function of a (partial) OT problem, we arrive at efficiently solvable assignment problems. Moreover, we extend our approach to unsupervised object matching by formulating it as a hierarchical OT problem. The resulting algorithms allow for efficient feature and object matching, as demonstrated in our numerical experiments. Here, we focus on applications in facial analysis, where we aim to match distinct landmarking conventions.

Site-Level Fine-Tuning with Progressive Layer Freezing: Towards Robust Prediction of Bronchopulmonary Dysplasia from Day-1 Chest Radiographs in Extremely Preterm Infants

Jul 16, 2025Abstract:Bronchopulmonary dysplasia (BPD) is a chronic lung disease affecting 35% of extremely low birth weight infants. Defined by oxygen dependence at 36 weeks postmenstrual age, it causes lifelong respiratory complications. However, preventive interventions carry severe risks, including neurodevelopmental impairment, ventilator-induced lung injury, and systemic complications. Therefore, early BPD prognosis and prediction of BPD outcome is crucial to avoid unnecessary toxicity in low risk infants. Admission radiographs of extremely preterm infants are routinely acquired within 24h of life and could serve as a non-invasive prognostic tool. In this work, we developed and investigated a deep learning approach using chest X-rays from 163 extremely low-birth-weight infants ($\leq$32 weeks gestation, 401-999g) obtained within 24 hours of birth. We fine-tuned a ResNet-50 pretrained specifically on adult chest radiographs, employing progressive layer freezing with discriminative learning rates to prevent overfitting and evaluated a CutMix augmentation and linear probing. For moderate/severe BPD outcome prediction, our best performing model with progressive freezing, linear probing and CutMix achieved an AUROC of 0.78 $\pm$ 0.10, balanced accuracy of 0.69 $\pm$ 0.10, and an F1-score of 0.67 $\pm$ 0.11. In-domain pre-training significantly outperformed ImageNet initialization (p = 0.031) which confirms domain-specific pretraining to be important for BPD outcome prediction. Routine IRDS grades showed limited prognostic value (AUROC 0.57 $\pm$ 0.11), confirming the need of learned markers. Our approach demonstrates that domain-specific pretraining enables accurate BPD prediction from routine day-1 radiographs. Through progressive freezing and linear probing, the method remains computationally feasible for site-level implementation and future federated learning deployments.

T-FAKE: Synthesizing Thermal Images for Facial Landmarking

Aug 27, 2024

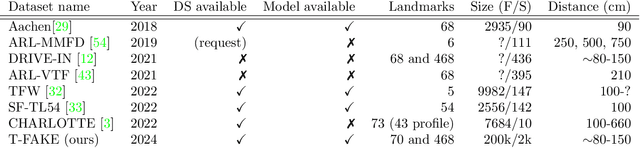

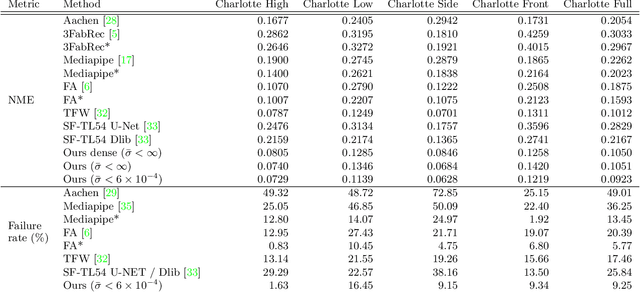

Abstract:Facial analysis is a key component in a wide range of applications such as security, autonomous driving, entertainment, and healthcare. Despite the availability of various facial RGB datasets, the thermal modality, which plays a crucial role in life sciences, medicine, and biometrics, has been largely overlooked. To address this gap, we introduce the T-FAKE dataset, a new large-scale synthetic thermal dataset with sparse and dense landmarks. To facilitate the creation of the dataset, we propose a novel RGB2Thermal loss function, which enables the transfer of thermal style to RGB faces. By utilizing the Wasserstein distance between thermal and RGB patches and the statistical analysis of clinical temperature distributions on faces, we ensure that the generated thermal images closely resemble real samples. Using RGB2Thermal style transfer based on our RGB2Thermal loss function, we create the T-FAKE dataset, a large-scale synthetic thermal dataset of faces. Leveraging our novel T-FAKE dataset, probabilistic landmark prediction, and label adaptation networks, we demonstrate significant improvements in landmark detection methods on thermal images across different landmark conventions. Our models show excellent performance with both sparse 70-point landmarks and dense 478-point landmark annotations. Our code and models are available at https://github.com/phflot/tfake.

Lagrangian Motion Magnification with Double Sparse Optical Flow Decomposition

Apr 15, 2022

Abstract:Motion magnification techniques aim at amplifying and hence revealing subtle motion in videos. There are basically two main approaches to reach this goal, namely via Eulerian or Lagrangian techniques. While the first one magnifies motion implicitly by operating directly on image pixels, the Lagrangian approach uses optical flow techniques to extract and amplify pixel trajectories. Microexpressions are fast and spatially small facial expressions that are difficult to detect. In this paper, we propose a novel approach for local Lagrangian motion magnification of facial micromovements. Our contribution is three-fold: first, we fine-tune the recurrent all-pairs field transforms for optical flows (RAFT) deep learning approach for faces by adding ground truth obtained from the variational dense inverse search (DIS) for optical flow algorithm applied to the CASME II video set of faces. This enables us to produce optical flows of facial videos in an efficient and sufficiently accurate way. Second, since facial micromovements are both local in space and time, we propose to approximate the optical flow field by sparse components both in space and time leading to a double sparse decomposition. Third, we use this decomposition to magnify micro-motions in specific areas of the face, where we introduce a new forward warping strategy using a triangular splitting of the image grid and barycentric interpolation of the RGB vectors at the corners of the transformed triangles. We demonstrate the very good performance of our approach by various examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge