Peter Langeberg

On the Effect of Low-Rank Weights on Adversarial Robustness of Neural Networks

Jan 29, 2019

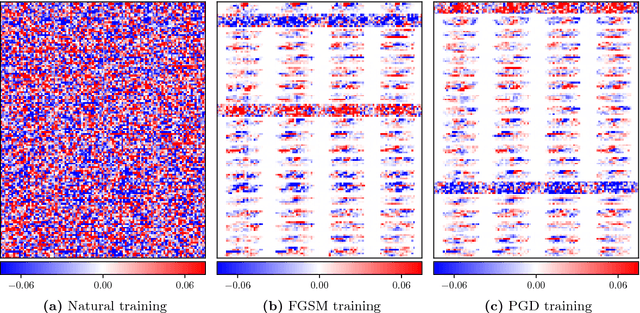

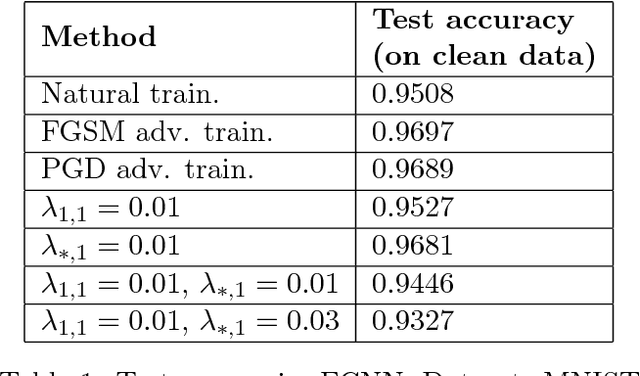

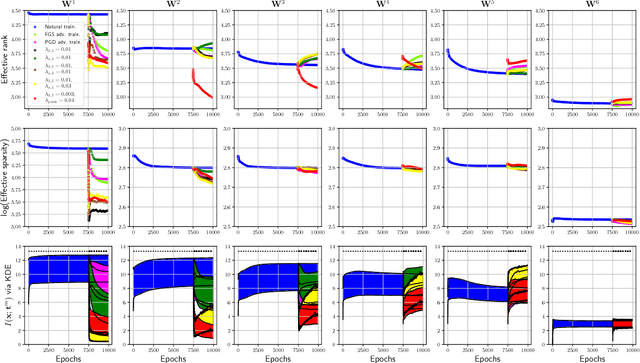

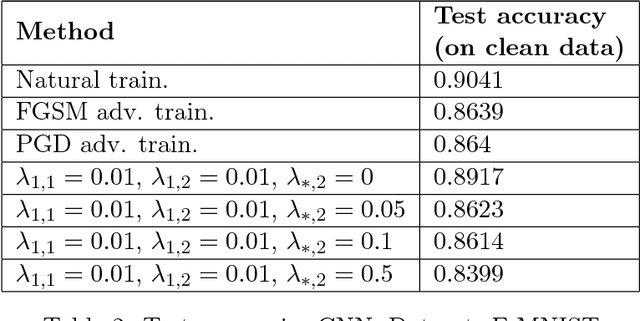

Abstract:Recently, there has been an abundance of works on designing Deep Neural Networks (DNNs) that are robust to adversarial examples. In particular, a central question is which features of DNNs influence adversarial robustness and, therefore, can be to used to design robust DNNs. In this work, this problem is studied through the lens of compression which is captured by the low-rank structure of weight matrices. It is first shown that adversarial training tends to promote simultaneously low-rank and sparse structure in the weight matrices of neural networks. This is measured through the notions of effective rank and effective sparsity. In the reverse direction, when the low rank structure is promoted by nuclear norm regularization and combined with sparsity inducing regularizations, neural networks show significantly improved adversarial robustness. The effect of nuclear norm regularization on adversarial robustness is paramount when it is applied to convolutional neural networks. Although still not competing with adversarial training, this result contributes to understanding the key properties of robust classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge