Pengfei Pei

UVL: A Unified Framework for Video Tampering Localization

Sep 28, 2023

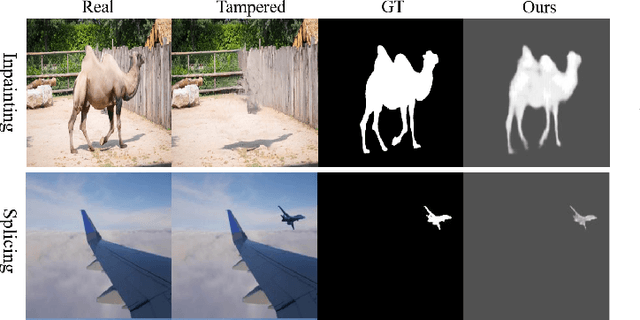

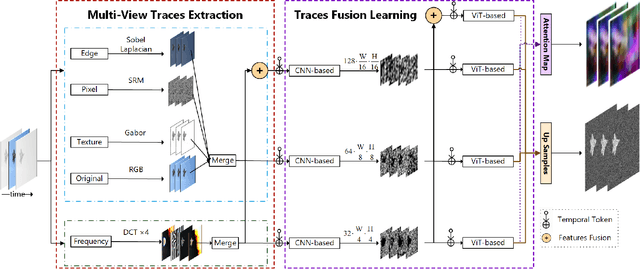

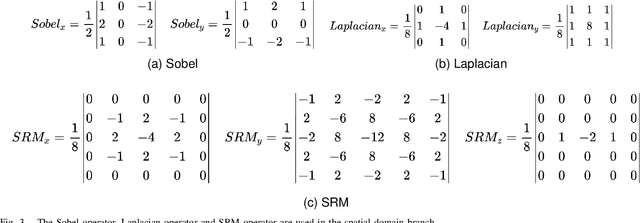

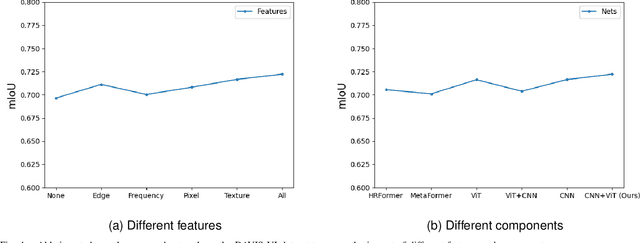

Abstract:With the development of deep learning technology, various forgery methods emerge endlessly. Meanwhile, methods to detect these fake videos have also achieved excellent performance on some datasets. However, these methods suffer from poor generalization to unknown videos and are inefficient for new forgery methods. To address this challenging problem, we propose UVL, a novel unified video tampering localization framework for synthesizing forgeries. Specifically, UVL extracts common features of synthetic forgeries: boundary artifacts of synthetic edges, unnatural distribution of generated pixels, and noncorrelation between the forgery region and the original. These features are widely present in different types of synthetic forgeries and help improve generalization for detecting unknown videos. Extensive experiments on three types of synthetic forgery: video inpainting, video splicing and DeepFake show that the proposed UVL achieves state-of-the-art performance on various benchmarks and outperforms existing methods by a large margin on cross-dataset.

Vision Transformer Based Video Hashing Retrieval for Tracing the Source of Fake Videos

Dec 15, 2021

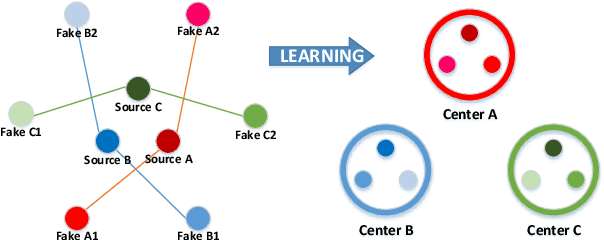

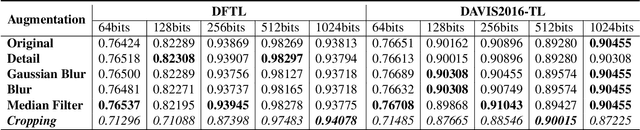

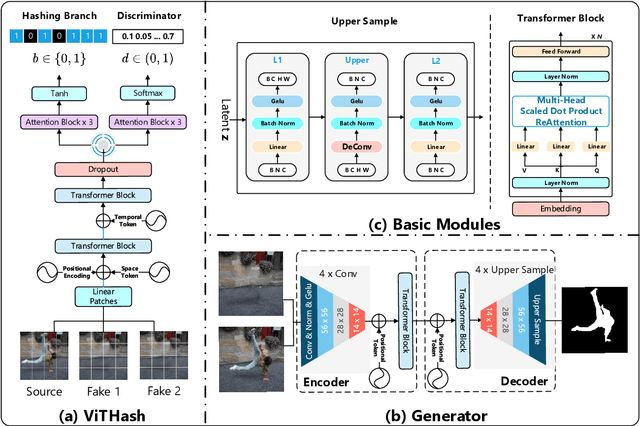

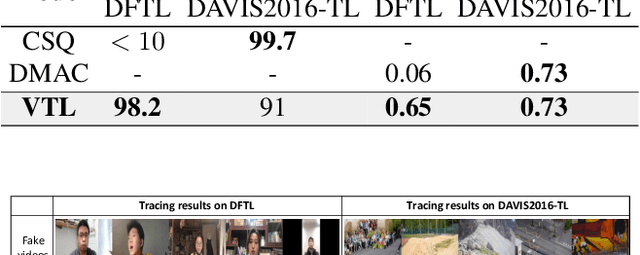

Abstract:Conventional fake video detection methods outputs a possibility value or a suspected mask of tampering images. However, such unexplainable results cannot be used as convincing evidence. So it is better to trace the sources of fake videos. The traditional hashing methods are used to retrieve semantic-similar images, which can't discriminate the nuances of the image. Specifically, the sources tracing compared with traditional video retrieval. It is a challenge to find the real one from similar source videos. We designed a novel loss Hash Triplet Loss to solve the problem that the videos of people are very similar: the same scene with different angles, similar scenes with the same person. We propose Vision Transformer based models named Video Tracing and Tampering Localization (VTL). In the first stage, we train the hash centers by ViTHash (VTL-T). Then, a fake video is inputted to ViTHash, which outputs a hash code. The hash code is used to retrieve the source video from hash centers. In the second stage, the source video and fake video are inputted to generator (VTL-L). Then, the suspect regions are masked to provide auxiliary information. Moreover, we constructed two datasets: DFTL and DAVIS2016-TL. Experiments on DFTL clearly show the superiority of our framework in sources tracing of similar videos. In particular, the VTL also achieved comparable performance with state-of-the-art methods on DAVIS2016-TL. Our source code and datasets have been released on GitHub: \url{https://github.com/lajlksdf/vtl}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge