Pedro Miranda Pinheiro

Trajectory Planning for Hybrid Unmanned Aerial Underwater Vehicles with Smooth Media Transition

Dec 27, 2021

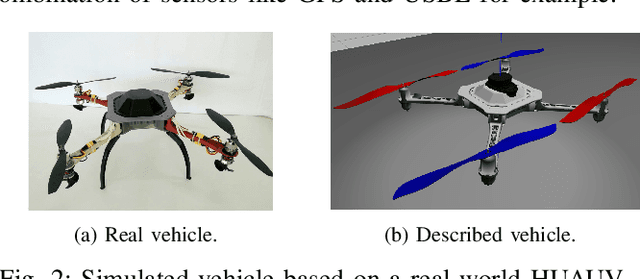

Abstract:In the last decade, a great effort has been employed in the study of Hybrid Unmanned Aerial Underwater Vehicles, robots that can easily fly and dive into the water with different levels of mechanical adaptation. However, most of this literature is concentrated on physical design, practical issues of construction, and, more recently, low-level control strategies. Little has been done in the context of high-level intelligence, such as motion planning and interactions with the real world. Therefore, we proposed in this paper a trajectory planning approach that allows collision avoidance against unknown obstacles and smooth transitions between aerial and aquatic media. Our method is based on a variant of the classic Rapidly-exploring Random Tree, whose main advantages are the capability to deal with obstacles, complex nonlinear dynamics, model uncertainties, and external disturbances. The approach uses the dynamic model of the \hydrone, a hybrid vehicle proposed with high underwater performance, but we believe it can be easily generalized to other types of aerial/aquatic platforms. In the experimental section, we present simulated results in environments filled with obstacles, where the robot is commanded to perform different media movements, demonstrating the applicability of our strategy.

Deep Reinforcement Learning for Mapless Navigation of a Hybrid Aerial Underwater Vehicle with Medium Transition

Mar 28, 2021

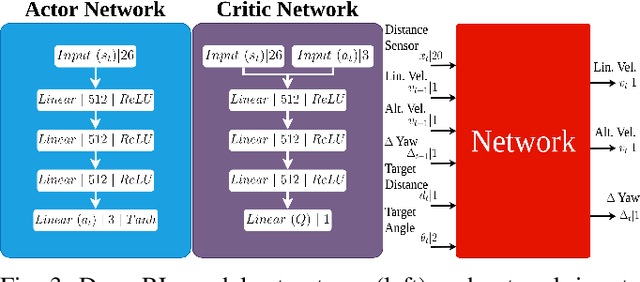

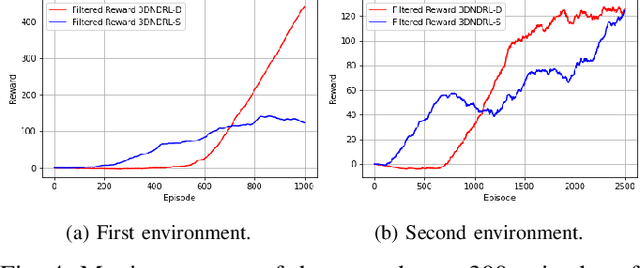

Abstract:Since the application of Deep Q-Learning to the continuous action domain in Atari-like games, Deep Reinforcement Learning (Deep-RL) techniques for motion control have been qualitatively enhanced. Nowadays, modern Deep-RL can be successfully applied to solve a wide range of complex decision-making tasks for many types of vehicles. Based on this context, in this paper, we propose the use of Deep-RL to perform autonomous mapless navigation for Hybrid Unmanned Aerial Underwater Vehicles (HUAUVs), robots that can operate in both, air or water media. We developed two approaches, one deterministic and the other stochastic. Our system uses the relative localization of the vehicle and simple sparse range data to train the network. We compared our approaches with a traditional geometric tracking controller for mapless navigation. Based on experimental results, we can conclude that Deep-RL-based approaches can be successfully used to perform mapless navigation and obstacle avoidance for HUAUVs. Our vehicle accomplished the navigation in two scenarios, being capable to achieve the desired target through both environments, and even outperforming the geometric-based tracking controller on the obstacle-avoidance capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge