Pedro H. T. Gama

Meta-Learners for Few-Shot Weakly-Supervised Medical Image Segmentation

May 11, 2023

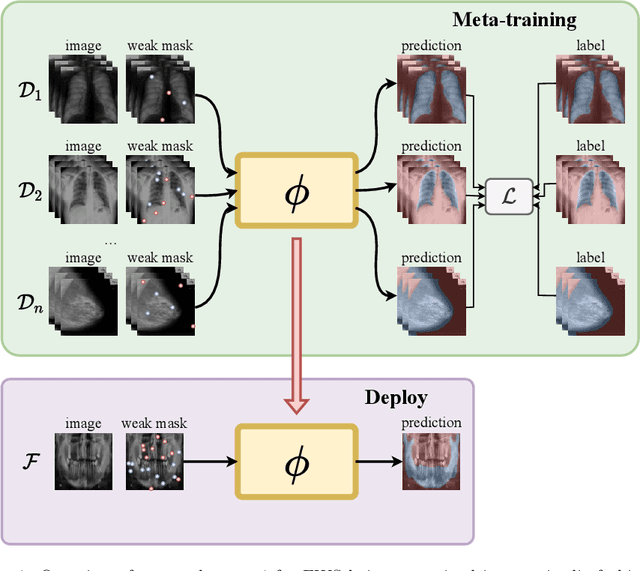

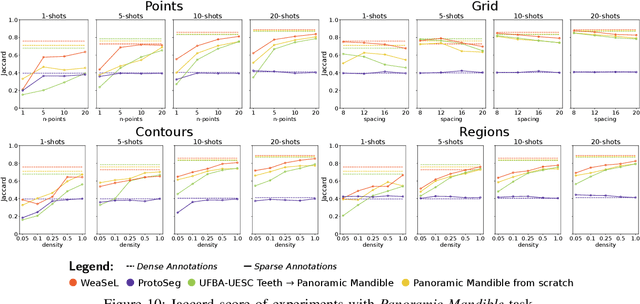

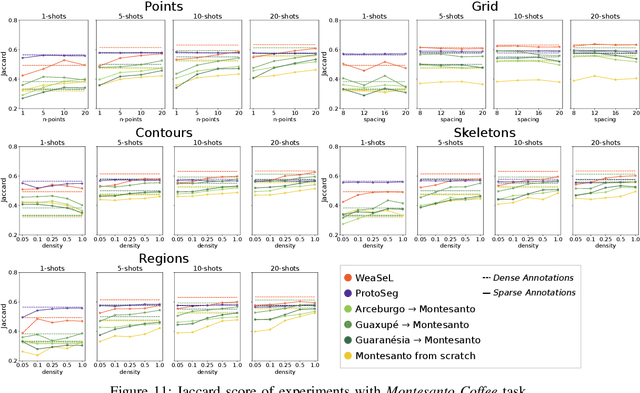

Abstract:Most uses of Meta-Learning in visual recognition are very often applied to image classification, with a relative lack of works in other tasks {such} as segmentation and detection. We propose a generic Meta-Learning framework for few-shot weakly-supervised segmentation in medical imaging domains. We conduct a comparative analysis of meta-learners from distinct paradigms adapted to few-shot image segmentation in different sparsely annotated radiological tasks. The imaging modalities include 2D chest, mammographic and dental X-rays, as well as 2D slices of volumetric tomography and resonance images. Our experiments consider a total of 9 meta-learners, 4 backbones and multiple target organ segmentation tasks. We explore small-data scenarios in radiology with varying weak annotation styles and densities. Our analysis shows that metric-based meta-learning approaches achieve better segmentation results in tasks with smaller domain shifts in comparison to the meta-training datasets, while some gradient- and fusion-based meta-learners are more generalizable to larger domain shifts.

Weakly Supervised Few-Shot Segmentation Via Meta-Learning

Sep 03, 2021

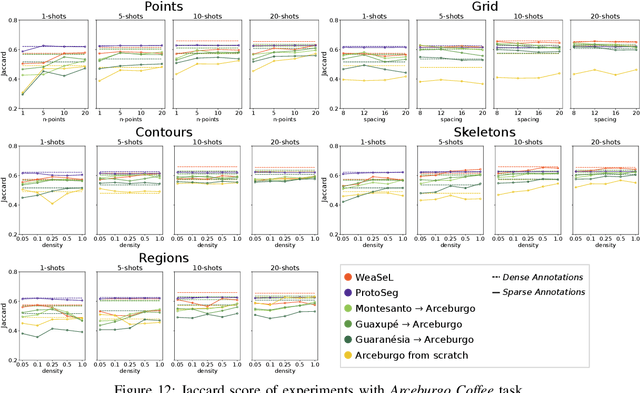

Abstract:Semantic segmentation is a classic computer vision task with multiple applications, which includes medical and remote sensing image analysis. Despite recent advances with deep-based approaches, labeling samples (pixels) for training models is laborious and, in some cases, unfeasible. In this paper, we present two novel meta learning methods, named WeaSeL and ProtoSeg, for the few-shot semantic segmentation task with sparse annotations. We conducted extensive evaluation of the proposed methods in different applications (12 datasets) in medical imaging and agricultural remote sensing, which are very distinct fields of knowledge and usually subject to data scarcity. The results demonstrated the potential of our method, achieving suitable results for segmenting both coffee/orange crops and anatomical parts of the human body in comparison with full dense annotation.

Learning to Segment Medical Images from Few-Shot Sparse Labels

Aug 22, 2021

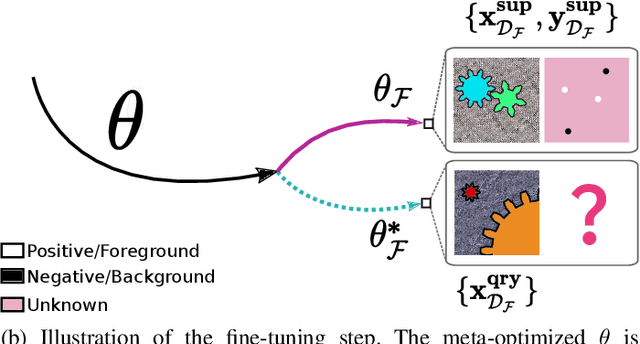

Abstract:In this paper, we propose a novel approach for few-shot semantic segmentation with sparse labeled images. We investigate the effectiveness of our method, which is based on the Model-Agnostic Meta-Learning (MAML) algorithm, in the medical scenario, where the use of sparse labeling and few-shot can alleviate the cost of producing new annotated datasets. Our method uses sparse labels in the meta-training and dense labels in the meta-test, thus making the model learn to predict dense labels from sparse ones. We conducted experiments with four Chest X-Ray datasets to evaluate two types of annotations (grid and points). The results show that our method is the most suitable when the target domain highly differs from source domains, achieving Jaccard scores comparable to dense labels, using less than 2% of the pixels of an image with labels in few-shot scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge