Pedro Ballester

Unsupervised domain adaptation for medical imaging segmentation with self-ensembling

Nov 14, 2018

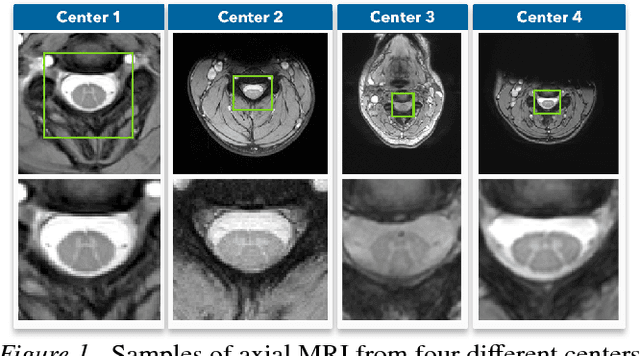

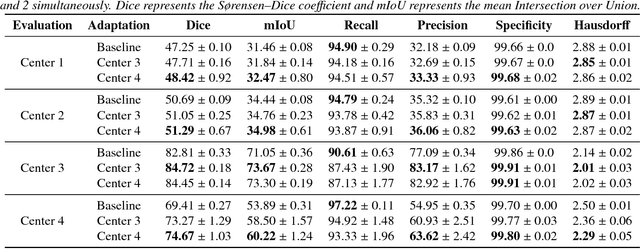

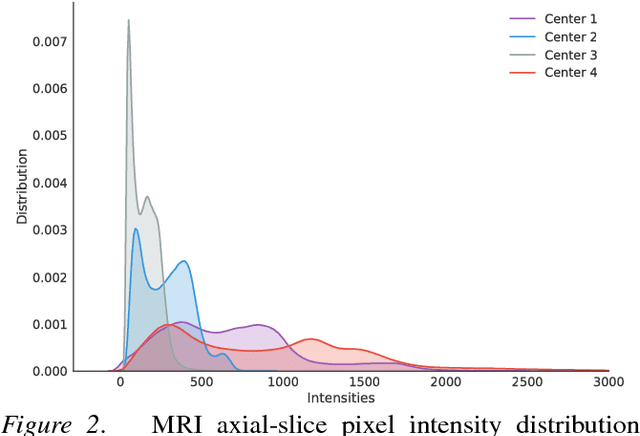

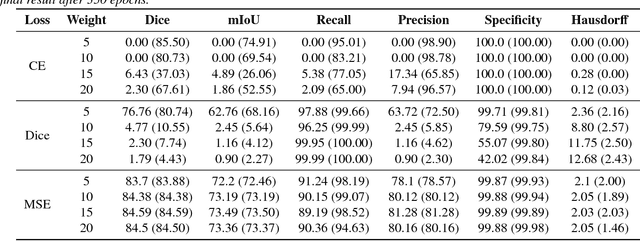

Abstract:Recent deep learning methods for the medical imaging domain have reached state-of-the-art results and even surpassed human judgment in several tasks. Those models, however, when trained to reduce the empirical risk on a single domain, fail to generalize when applied on other domains, a very common scenario on medical imaging due to the variability of images and anatomical structures, even across the same imaging modality. In this work, we extend the method of unsupervised domain adaptation using self-ensembling for the semantic segmentation task and explore multiple facets of the method on a realistic small data regime using a publicly available magnetic resonance (MRI) dataset. Through an extensive evaluation, we show that self-ensembling can indeed improve the generalization of the models even when using a small amount of unlabelled data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge