Paulo Padrao

Online Learning of Deceptive Policies under Intermittent Observation

Sep 19, 2025Abstract:In supervisory control settings, autonomous systems are not monitored continuously. Instead, monitoring often occurs at sporadic intervals within known bounds. We study the problem of deception, where an agent pursues a private objective while remaining plausibly compliant with a supervisor's reference policy when observations occur. Motivated by the behavior of real, human supervisors, we situate the problem within Theory of Mind: the representation of what an observer believes and expects to see. We show that Theory of Mind can be repurposed to steer online reinforcement learning (RL) toward such deceptive behavior. We model the supervisor's expectations and distill from them a single, calibrated scalar -- the expected evidence of deviation if an observation were to happen now. This scalar combines how unlike the reference and current action distributions appear, with the agent's belief that an observation is imminent. Injected as a state-dependent weight into a KL-regularized policy improvement step within an online RL loop, this scalar informs a closed-form update that smoothly trades off self-interest and compliance, thus sidestepping hand-crafted or heuristic policies. In real-world, real-time hardware experiments on marine (ASV) and aerial (UAV) navigation, our ToM-guided RL runs online, achieves high return and success with observed-trace evidence calibrated to the supervisor's expectations.

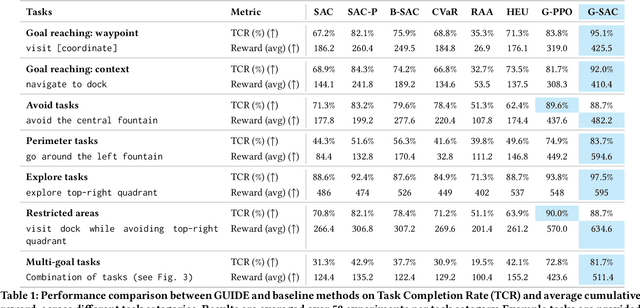

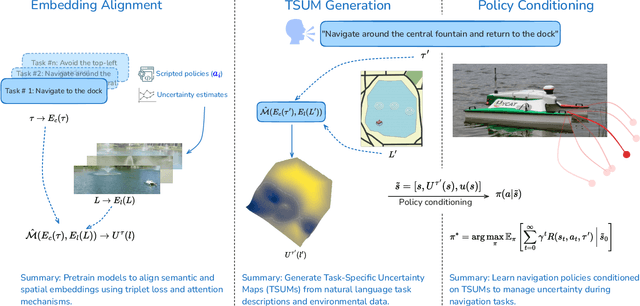

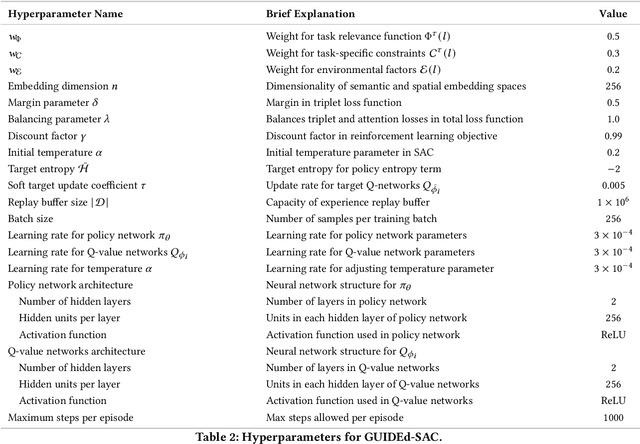

Enhancing Robot Navigation Policies with Task-Specific Uncertainty Management

Oct 19, 2024

Abstract:Robots performing navigation tasks in complex environments face significant challenges due to uncertainty in state estimation. Effectively managing this uncertainty is crucial, but the optimal approach varies depending on the specific details of the task: different tasks require varying levels of precision in different regions of the environment. For instance, a robot navigating a crowded space might need precise localization near obstacles but can operate effectively with less precise state estimates in open areas. This varying need for certainty in different parts of the environment, depending on the task, calls for policies that can adapt their uncertainty management strategies based on task-specific requirements. In this paper, we present a framework for integrating task-specific uncertainty requirements directly into navigation policies. We introduce Task-Specific Uncertainty Map (TSUM), which represents acceptable levels of state estimation uncertainty across different regions of the operating environment for a given task. Using TSUM, we propose Generalized Uncertainty Integration for Decision-Making and Execution (GUIDE), a policy conditioning framework that incorporates these uncertainty requirements into the robot's decision-making process. We find that conditioning policies on TSUMs provides an effective way to express task-specific uncertainty requirements and enables the robot to reason about the context-dependent value of certainty. We show how integrating GUIDE into reinforcement learning frameworks allows the agent to learn navigation policies without the need for explicit reward engineering to balance task completion and uncertainty management. We evaluate GUIDE on a variety of real-world navigation tasks and find that it demonstrates significant improvements in task completion rates compared to baselines. Evaluation videos can be found at https://guided-agents.github.io.

Towards Optimal Human-Robot Interface Design Applied to Underwater Robotics Teleoperation

Apr 04, 2023

Abstract:Efficient and intuitive Human-Robot interfaces are crucial for expanding the user base of operators and enabling new applications in critical areas such as precision agriculture, automated construction, rehabilitation, and environmental monitoring. In this paper, we investigate the design of human-robot interfaces for the teleoperation of dynamical systems. The proposed framework seeks to find an optimal interface that complies with key concepts such as user comfort, efficiency, continuity, and consistency. As a proof-of-concept, we introduce an innovative approach to teleoperating underwater vehicles, allowing the translation between human body movements into vehicle control commands. This method eliminates the need for divers to work in harsh underwater environments while taking into account comfort and communication constraints. We conducted a study with human subjects using a head-mounted display attached to a smartphone to control a simulated ROV. Also, numerical experiments have demonstrated that the optimal translation is often the most intuitive and natural one, aligning with users' expectations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge