Paul Goulart

University of Oxford

Approximate Uncertainty Propagation for Continuous Gaussian Process Dynamical Systems

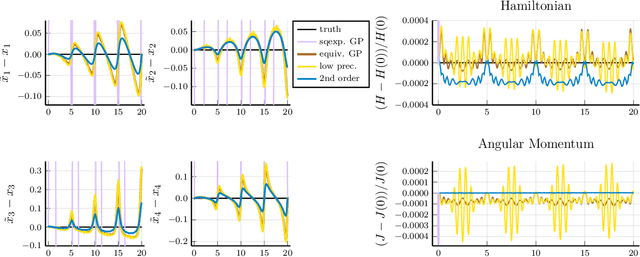

Nov 20, 2022Abstract:When learning continuous dynamical systems with Gaussian Processes, computing trajectories requires repeatedly mapping the distributions of uncertain states through the distribution of learned nonlinear functions, which is generally intractable. Since sampling-based approaches are computationally expensive, we consider approximations of the output and trajectory distributions. We show that existing methods make an incorrect implicit independence assumption and underestimate the model-induced uncertainty. We propose a piecewise linear approximation of the GP model yielding a class of numerical solvers for efficient uncertainty estimates matching sampling-based methods.

Learning ODE Models with Qualitative Structure Using Gaussian Processes

Nov 10, 2020

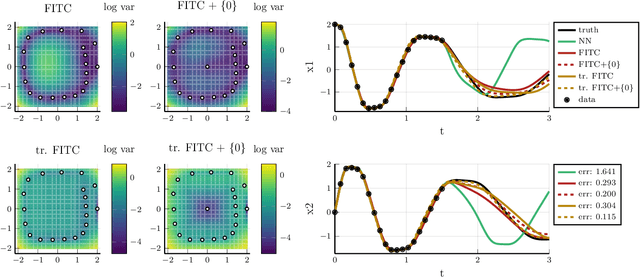

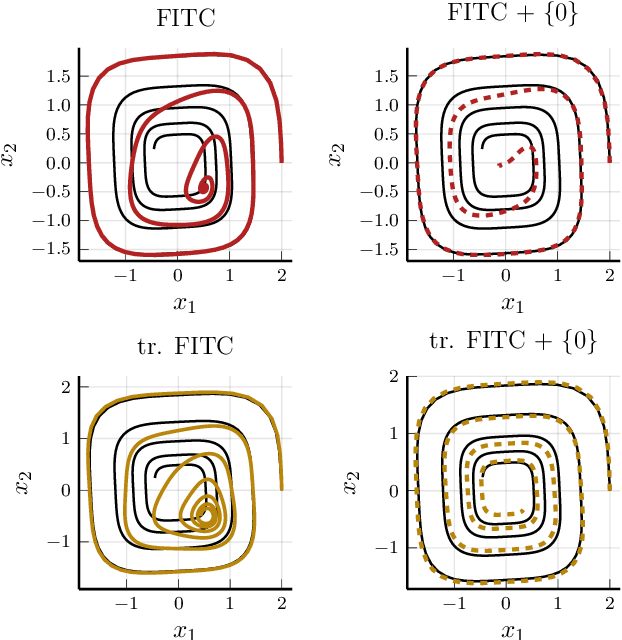

Abstract:Recent advances in learning techniques have enabled the modelling of dynamical systems for scientific and engineering applications directly from data. However, in many contexts, explicit data collection is expensive and learning algorithms must be data-efficient to be feasible. This suggests using additional qualitative information about the system, which is often available from prior experiments or domain knowledge. In this paper, we propose an approach to learning the vector field of differential equations using sparse Gaussian Processes that allows us to combine data and additional structural information, like Lie Group symmetries and fixed points, as well as known input transformations. We show that this combination improves extrapolation performance and long-term behaviour significantly, while also reducing the computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge