Paul E. Lehner

Inference Policies

Mar 27, 2013Abstract:It is suggested that an AI inference system should reflect an inference policy that is tailored to the domain of problems to which it is applied -- and furthermore that an inference policy need not conform to any general theory of rational inference or induction. We note, for instance, that Bayesian reasoning about the probabilistic characteristics of an inference domain may result in the specification of an nonBayesian procedure for reasoning within the inference domain. In this paper, the idea of an inference policy is explored in some detail. To support this exploration, the characteristics of some standard and nonstandard inference policies are examined.

When Should a Decision Maker Ignore the Advice of a Decision Aid?

Mar 27, 2013

Abstract:This paper argues that the principal difference between decision aids and most other types of information systems is the greater reliance of decision aids on fallible algorithms--algorithms that sometimes generate incorrect advice. It is shown that interactive problem solving with a decision aid that is based on a fallible algorithm can easily result in aided performance which is poorer than unaided performance, even if the algorithm, by itself, performs significantly better than the unaided decision maker. This suggests that unless certain conditions are satisfied, using a decision aid as an aid is counterproductive. Some conditions under which a decision aid is best used as an aid are derived.

Robust Inference Policies

Mar 27, 2013

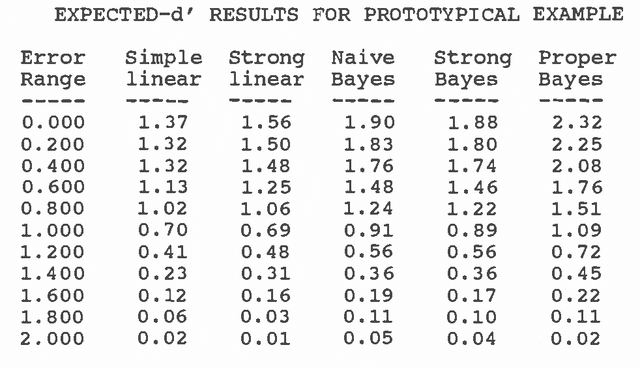

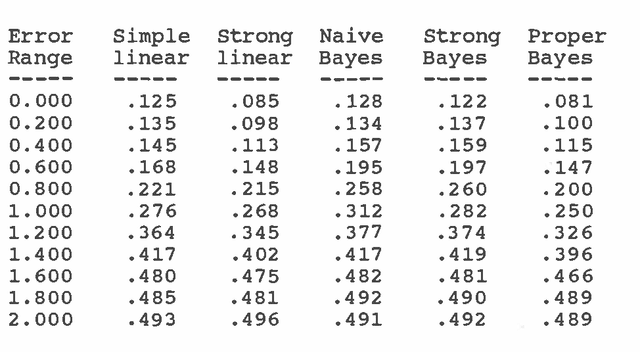

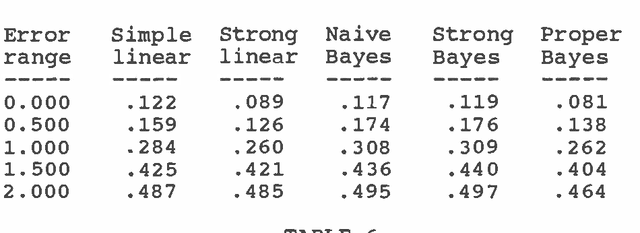

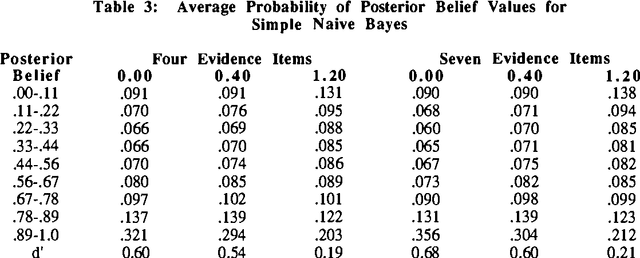

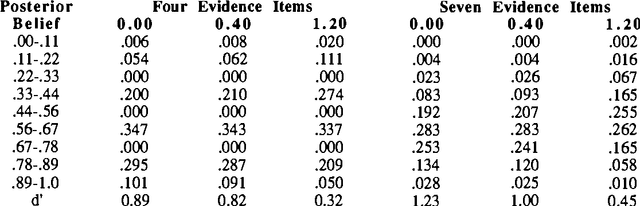

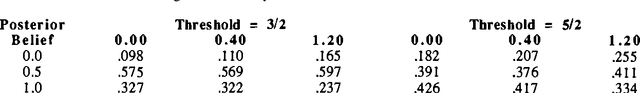

Abstract:A series of monte carlo studies were performed to assess the extent to which different inference procedures robustly output reasonable belief values in the context of increasing levels of judgmental imprecision. It was found that, when compared to an equal-weights linear model, the Bayesian procedures are more likely to deduce strong support for a hypothesis. But, the Bayesian procedures are also more likely to strongly support the wrong hypothesis. Bayesian techniques are more powerful, but are also more error prone.

Reasoning under Uncertainty: Some Monte Carlo Results

Mar 20, 2013

Abstract:A series of monte carlo studies were performed to compare the behavior of some alternative procedures for reasoning under uncertainty. The behavior of several Bayesian, linear model and default reasoning procedures were examined in the context of increasing levels of calibration error. The most interesting result is that Bayesian procedures tended to output more extreme posterior belief values (posterior beliefs near 0.0 or 1.0) than other techniques, but the linear models were relatively less likely to output strong support for an erroneous conclusion. Also, accounting for the probabilistic dependencies between evidence items was important for both Bayesian and linear updating procedures.

Two Procedures for Compiling Influence Diagrams

Mar 06, 2013

Abstract:Two algorithms are presented for "compiling" influence diagrams into a set of simple decision rules. These decision rules define simple-to-execute, complete, consistent, and near-optimal decision procedures. These compilation algorithms can be used to derive decision procedures for human teams solving time constrained decision problems.

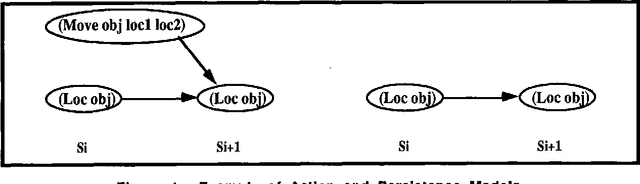

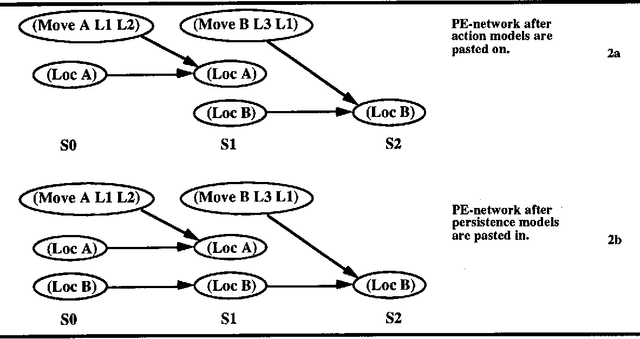

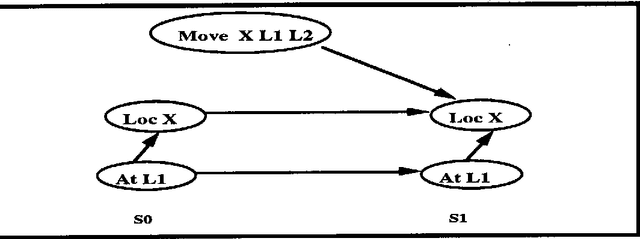

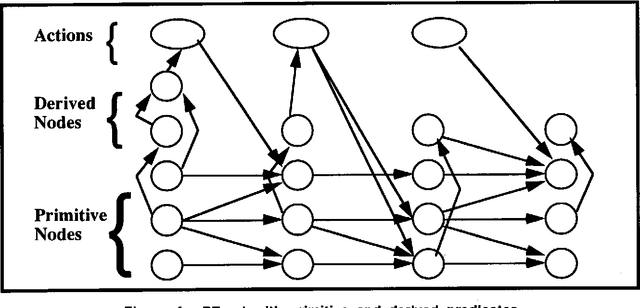

Constructing Belief Networks to Evaluate Plans

Feb 27, 2013

Abstract:This paper examines the problem of constructing belief networks to evaluate plans produced by an knowledge-based planner. Techniques are presented for handling various types of complicating plan features. These include plans with context-dependent consequences, indirect consequences, actions with preconditions that must be true during the execution of an action, contingencies, multiple levels of abstraction multiple execution agents with partially-ordered and temporally overlapping actions, and plans which reference specific times and time durations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge