Azar Sadigh

Reasoning under Uncertainty: Some Monte Carlo Results

Mar 20, 2013

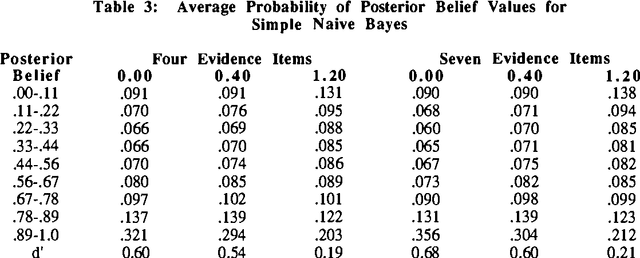

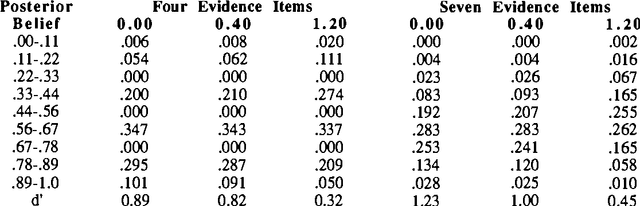

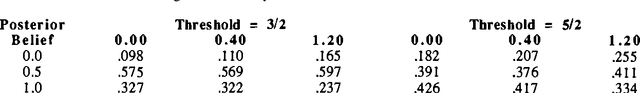

Abstract:A series of monte carlo studies were performed to compare the behavior of some alternative procedures for reasoning under uncertainty. The behavior of several Bayesian, linear model and default reasoning procedures were examined in the context of increasing levels of calibration error. The most interesting result is that Bayesian procedures tended to output more extreme posterior belief values (posterior beliefs near 0.0 or 1.0) than other techniques, but the linear models were relatively less likely to output strong support for an erroneous conclusion. Also, accounting for the probabilistic dependencies between evidence items was important for both Bayesian and linear updating procedures.

Two Procedures for Compiling Influence Diagrams

Mar 06, 2013

Abstract:Two algorithms are presented for "compiling" influence diagrams into a set of simple decision rules. These decision rules define simple-to-execute, complete, consistent, and near-optimal decision procedures. These compilation algorithms can be used to derive decision procedures for human teams solving time constrained decision problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge