Marvin S. Cohen

A Framework for Non-Monotonic Reasoning About Probabilistic Assumptions

Mar 27, 2013

Abstract:Attempts to replicate probabilistic reasoning in expert systems have typically overlooked a critical ingredient of that process. Probabilistic analysis typically requires extensive judgments regarding interdependencies among hypotheses and data, and regarding the appropriateness of various alternative models. The application of such models is often an iterative process, in which the plausibility of the results confirms or disconfirms the validity of assumptions made in building the model. In current expert systems, by contrast, probabilistic information is encapsulated within modular rules (involving, for example, "certainty factors"), and there is no mechanism for reviewing the overall form of the probability argument or the validity of the judgments entering into it.

An Application of Non-Monotonic Probabilistic Reasoning to Air Force Threat Correlation

Mar 27, 2013

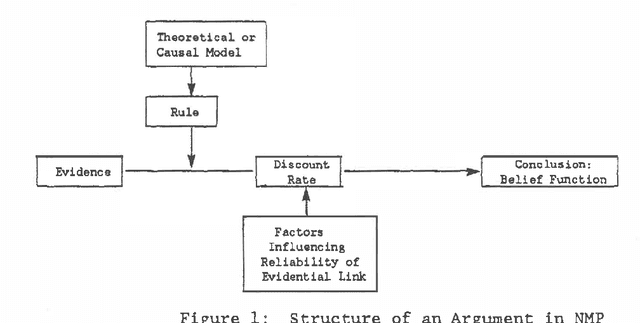

Abstract:Current approaches to expert systems' reasoning under uncertainty fail to capture the iterative revision process characteristic of intelligent human reasoning. This paper reports on a system, called the Non-monotonic Probabilist, or NMP (Cohen, et al., 1985). When its inferences result in substantial conflict, NMP examines and revises the assumptions underlying the inferences until conflict is reduced to acceptable levels. NMP has been implemented in a demonstration computer-based system, described below, which supports threat correlation and in-flight route replanning by Air Force pilots.

When Should a Decision Maker Ignore the Advice of a Decision Aid?

Mar 27, 2013

Abstract:This paper argues that the principal difference between decision aids and most other types of information systems is the greater reliance of decision aids on fallible algorithms--algorithms that sometimes generate incorrect advice. It is shown that interactive problem solving with a decision aid that is based on a fallible algorithm can easily result in aided performance which is poorer than unaided performance, even if the algorithm, by itself, performs significantly better than the unaided decision maker. This suggests that unless certain conditions are satisfied, using a decision aid as an aid is counterproductive. Some conditions under which a decision aid is best used as an aid are derived.

Decision Making "Biases" and Support for Assumption-Based Higher-Order Reasoning

Mar 27, 2013Abstract:Unaided human decision making appears to systematically violate consistency constraints imposed by normative theories; these biases in turn appear to justify the application of formal decision-analytic models. It is argued that both claims are wrong. In particular, we will argue that the "confirmation bias" is premised on an overly narrow view of how conflicting evidence is and ought to be handled. Effective decision aiding should focus on supporting the contral processes by means of which knowledge is extended into novel situations and in which assumptions are adopted, utilized, and revised. The Non- Monotonic Probabilist represents initial work toward such an aid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge