An Application of Non-Monotonic Probabilistic Reasoning to Air Force Threat Correlation

Paper and Code

Mar 27, 2013

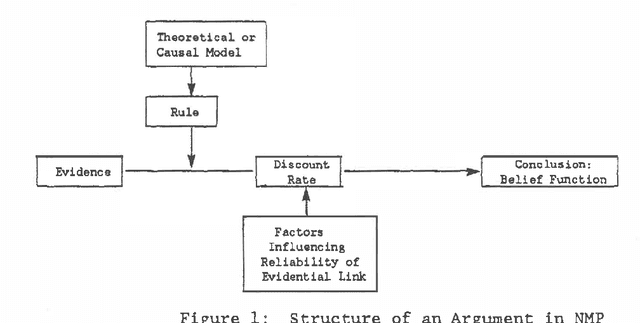

Current approaches to expert systems' reasoning under uncertainty fail to capture the iterative revision process characteristic of intelligent human reasoning. This paper reports on a system, called the Non-monotonic Probabilist, or NMP (Cohen, et al., 1985). When its inferences result in substantial conflict, NMP examines and revises the assumptions underlying the inferences until conflict is reduced to acceptable levels. NMP has been implemented in a demonstration computer-based system, described below, which supports threat correlation and in-flight route replanning by Air Force pilots.

* Appears in Proceedings of the Second Conference on Uncertainty in

Artificial Intelligence (UAI1986)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge