Christopher Elsaesser

Explanation of Probabilistic Inference for Decision Support Systems

Mar 27, 2013

Abstract:An automated explanation facility for Bayesian conditioning aimed at improving user acceptance of probability-based decision support systems has been developed. The domain-independent facility is based on an information processing perspective on reasoning about conditional evidence that accounts both for biased and normative inferences. Experimental results indicate that the facility is both acceptable to naive users and effective in improving understanding.

How Much More Probable is "Much More Probable"? Verbal Expressions for Probability Updates

Mar 27, 2013

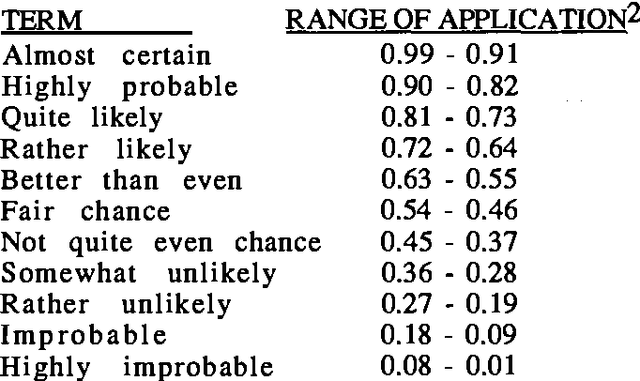

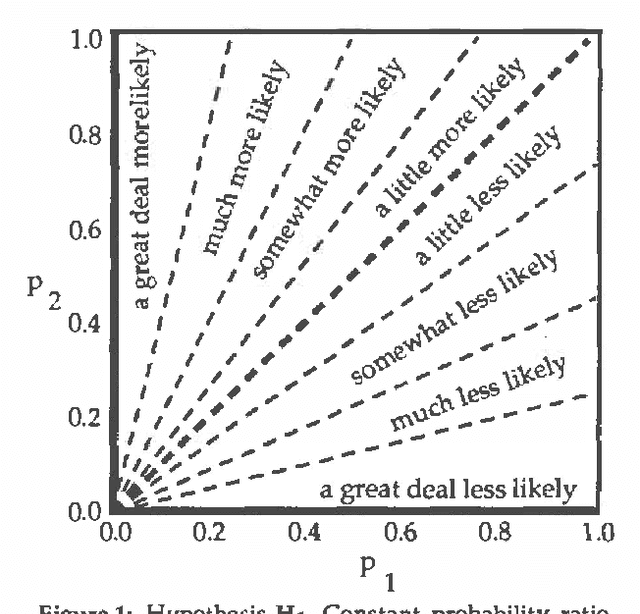

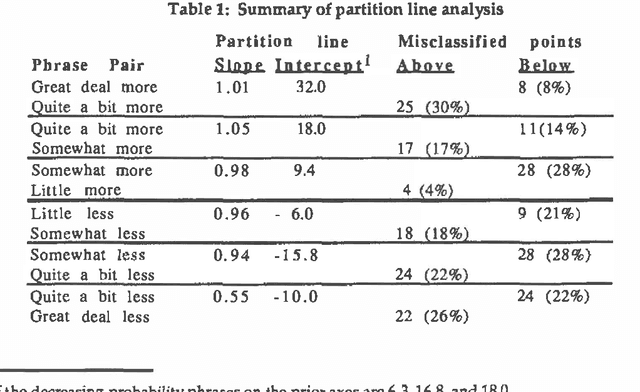

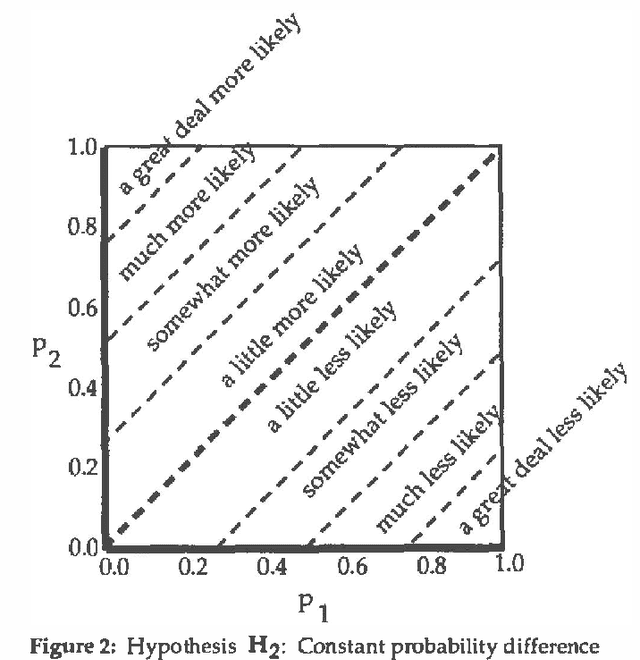

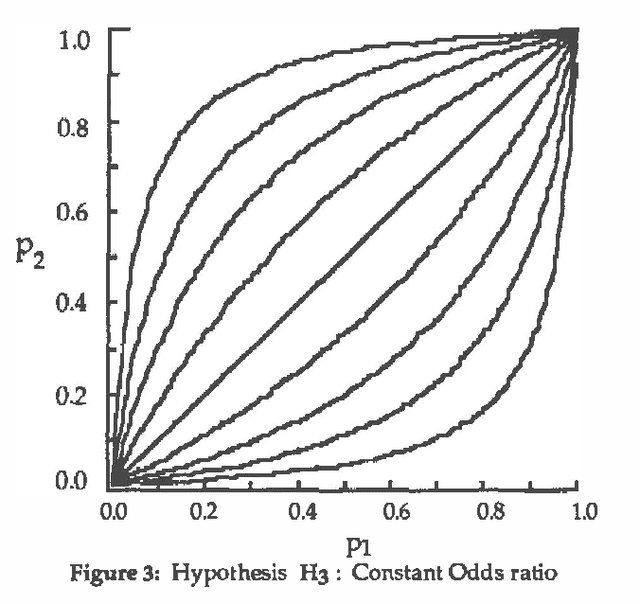

Abstract:Bayesian inference systems should be able to explain their reasoning to users, translating from numerical to natural language. Previous empirical work has investigated the correspondence between absolute probabilities and linguistic phrases. This study extends that work to the correspondence between changes in probabilities (updates) and relative probability phrases, such as "much more likely" or "a little less likely." Subjects selected such phrases to best describe numerical probability updates. We examined three hypotheses about the correspondence, and found the most descriptively accurate of these three to be that each such phrase corresponds to a fixed difference in probability (rather than fixed ratio of probabilities or of odds). The empirically derived phrase selection function uses eight phrases and achieved a 72% accuracy in correspondence with the subjects' actual usage.

Constructing Belief Networks to Evaluate Plans

Feb 27, 2013

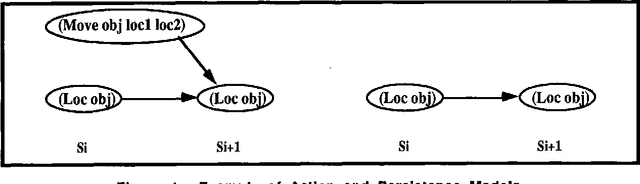

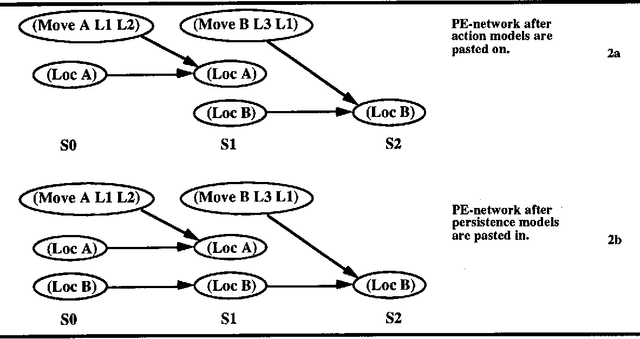

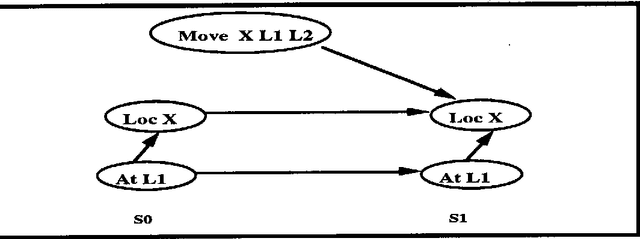

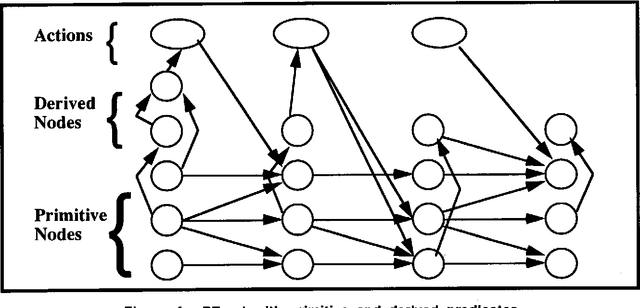

Abstract:This paper examines the problem of constructing belief networks to evaluate plans produced by an knowledge-based planner. Techniques are presented for handling various types of complicating plan features. These include plans with context-dependent consequences, indirect consequences, actions with preconditions that must be true during the execution of an action, contingencies, multiple levels of abstraction multiple execution agents with partially-ordered and temporally overlapping actions, and plans which reference specific times and time durations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge