Patrick Schlachter

Deep One-Class Classification Using Intra-Class Splitting

Mar 12, 2019

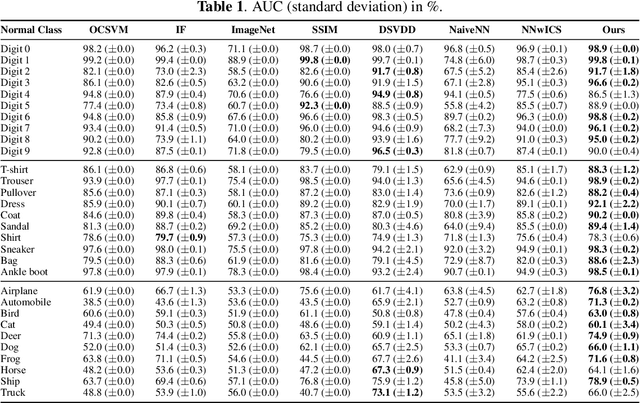

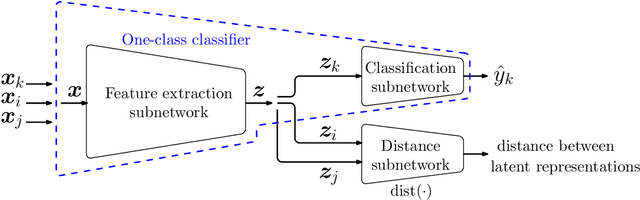

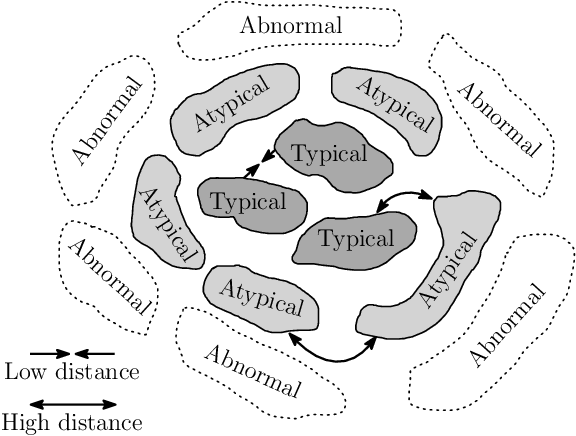

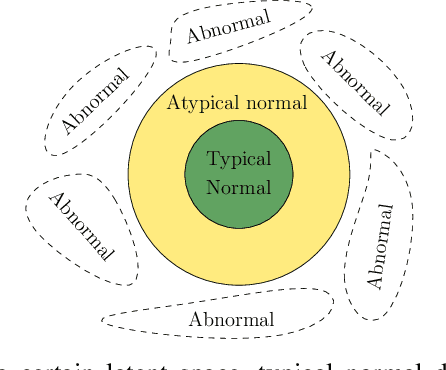

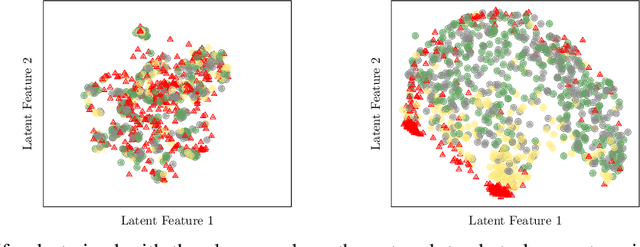

Abstract:This paper introduces a generic method which enables to use conventional deep neural networks as end-to-end one-class classifiers. The method is based on splitting given data from one class into two subsets. In one-class classification, only samples of one normal class are available for training. During inference, a closed and tight decision boundary around the training samples is sought which conventional binary or multi-class neural networks are not able to provide. By splitting data into typical and atypical normal subsets, the proposed method can use a binary loss and defines an auxiliary subnetwork for distance constraints in the latent space. Various experiments on three well-known image datasets showed the effectiveness of the proposed method which outperformed seven baselines and had a better or comparable performance to the state-of-the-art.

Open-Set Recognition Using Intra-Class Splitting

Mar 12, 2019

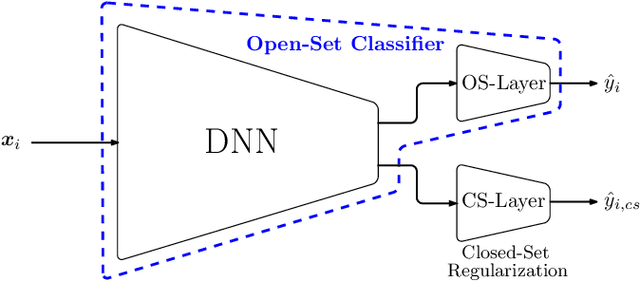

Abstract:This paper proposes a method to use deep neural networks as end-to-end open-set classifiers. It is based on intra-class data splitting. In open-set recognition, only samples from a limited number of known classes are available for training. During inference, an open-set classifier must reject samples from unknown classes while correctly classifying samples from known classes. The proposed method splits given data into typical and atypical normal subsets by using a closed-set classifier. This enables to model the abnormal classes by atypical normal samples. Accordingly, the open-set recognition problem is reformulated into a traditional classification problem. In addition, a closed-set regularization is proposed to guarantee a high closed-set classification performance. Intensive experiments on five well-known image datasets showed the effectiveness of the proposed method which outperformed the baselines and achieved a distinct improvement over the state-of-the-art methods.

Active Learning for One-Class Classification Using Two One-Class Classifiers

Jan 10, 2019

Abstract:This paper introduces a novel, generic active learning method for one-class classification. Active learning methods play an important role to reduce the efforts of manual labeling in the field of machine learning. Although many active learning approaches have been proposed during the last years, most of them are restricted on binary or multi-class problems. One-class classifiers use samples from only one class, the so-called target class, during training and hence require special active learning strategies. The few strategies proposed for one-class classification either suffer from their limitation on specific one-class classifiers or their performance depends on particular assumptions about datasets like imbalance. Our proposed method bases on using two one-class classifiers, one for the desired target class and one for the so-called outlier class. It allows to invent new query strategies, to use binary query strategies and to define simple stopping criteria. Based on the new method, two query strategies are proposed. The provided experiments compare the proposed approach with known strategies on various datasets and show improved results in almost all situations.

One-Class Feature Learning Using Intra-Class Splitting

Dec 20, 2018

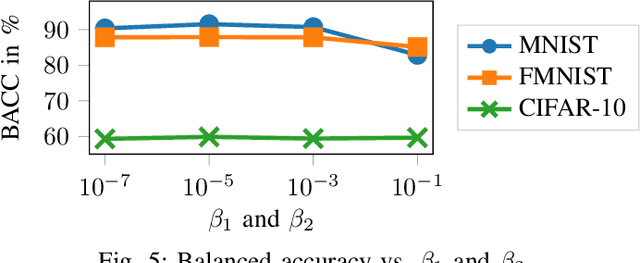

Abstract:This paper proposes a novel generic one-class feature learning method which is based on intra-class splitting. In one-class classification, feature learning is challenging, because only samples of one class are available during training. Hence, state-of-the-art methods require reference multi-class datasets to pretrain feature extractors. In contrast, the proposed method realizes feature learning by splitting the given normal class into typical and atypical normal samples. By introducing closeness loss and dispersion loss, an intra-class joint training procedure between the two subsets after splitting enables the extraction of valuable features for one-class classification. Various experiments on three well-known image classification datasets demonstrate the effectiveness of our method which outperformed other baseline models in 25 of 30 experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge