Patrick Mesana

On the Usage of Gaussian Process for Efficient Data Valuation

Jun 04, 2025

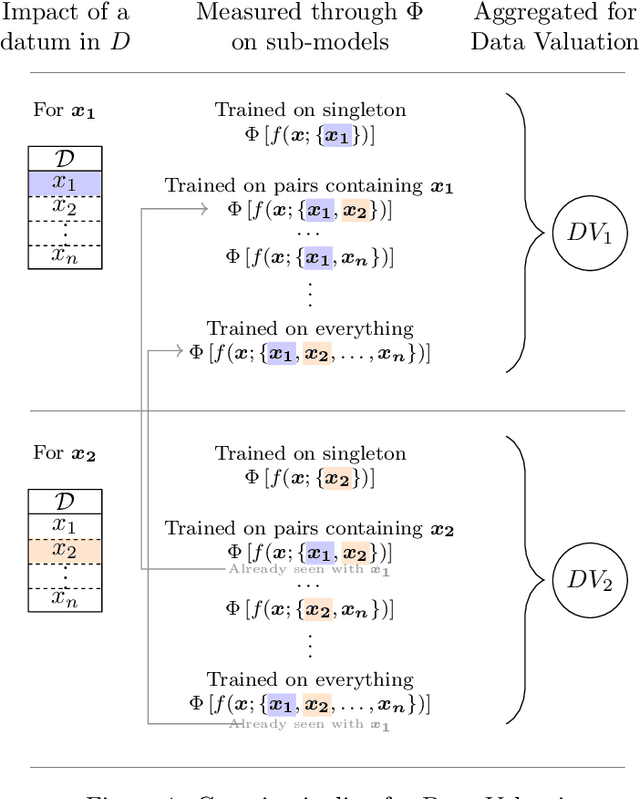

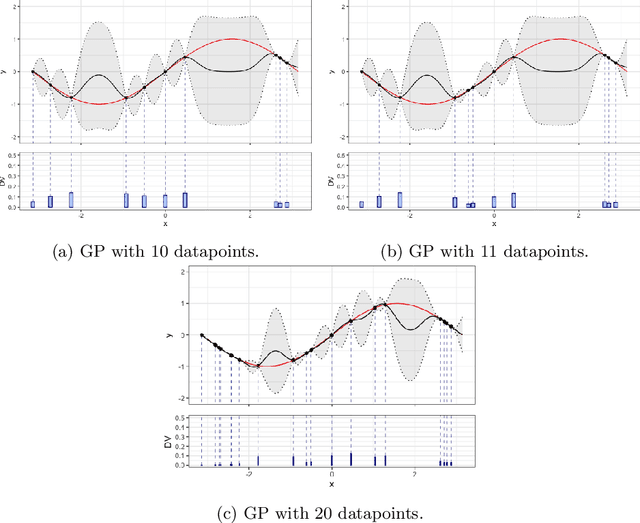

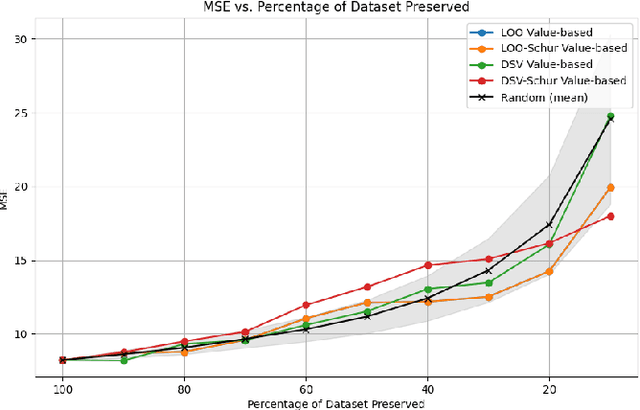

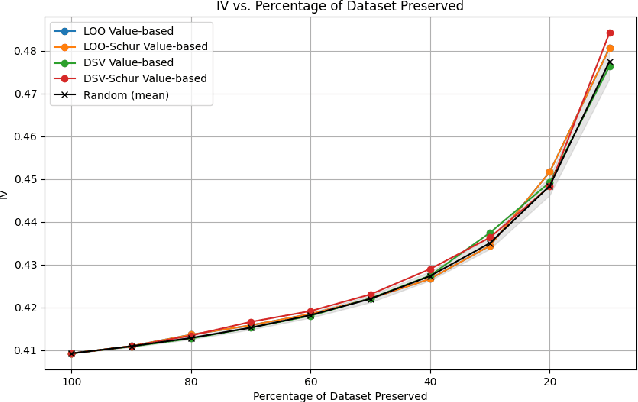

Abstract:In machine learning, knowing the impact of a given datum on model training is a fundamental task referred to as Data Valuation. Building on previous works from the literature, we have designed a novel canonical decomposition allowing practitioners to analyze any data valuation method as the combination of two parts: a utility function that captures characteristics from a given model and an aggregation procedure that merges such information. We also propose to use Gaussian Processes as a means to easily access the utility function on ``sub-models'', which are models trained on a subset of the training set. The strength of our approach stems from both its theoretical grounding in Bayesian theory, and its practical reach, by enabling fast estimation of valuations thanks to efficient update formulae.

WaKA: Data Attribution using K-Nearest Neighbors and Membership Privacy Principles

Nov 02, 2024

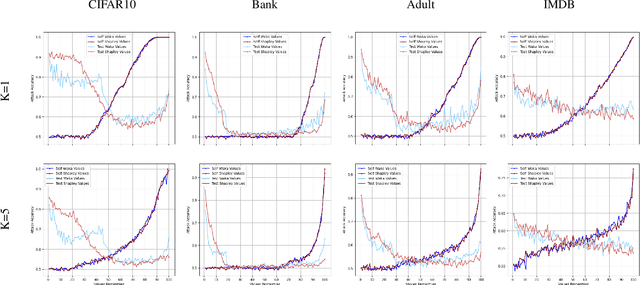

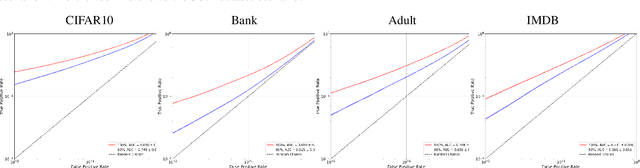

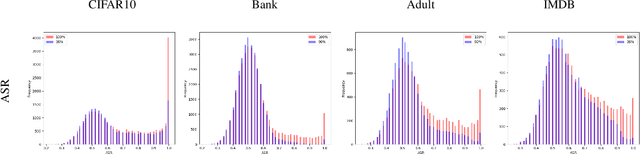

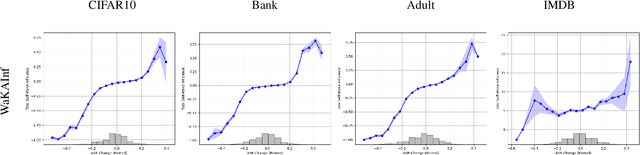

Abstract:In this paper, we introduce WaKA (Wasserstein K-nearest neighbors Attribution), a novel attribution method that leverages principles from the LiRA (Likelihood Ratio Attack) framework and applies them to \( k \)-nearest neighbors classifiers (\( k \)-NN). WaKA efficiently measures the contribution of individual data points to the model's loss distribution, analyzing every possible \( k \)-NN that can be constructed using the training set, without requiring sampling or shadow model training. WaKA can be used \emph{a posteriori} as a membership inference attack (MIA) to assess privacy risks, and \emph{a priori} for data minimization and privacy influence measurement. Thus, WaKA can be seen as bridging the gap between data attribution and membership inference attack (MIA) literature by distinguishing between the value of a data point and its privacy risk. For instance, we show that self-attribution values are more strongly correlated with the attack success rate than the contribution of a point to model generalization. WaKA's different usages were also evaluated across diverse real-world datasets, demonstrating performance very close to LiRA when used as an MIA on \( k \)-NN classifiers, but with greater computational efficiency.

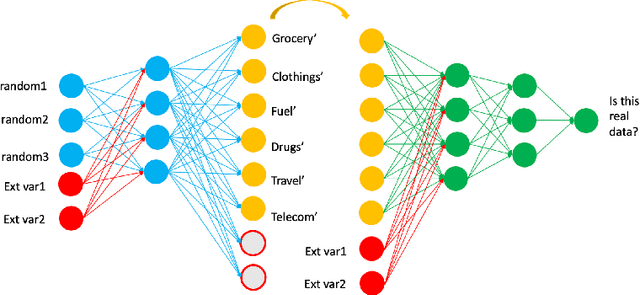

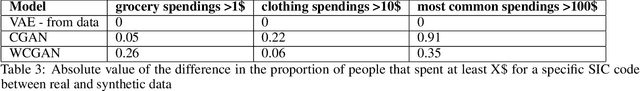

Generating synthetic transactional profiles

Oct 28, 2021

Abstract:Financial institutions use clients' payment transactions in numerous banking applications. Transactions are very personal and rich in behavioural patterns, often unique to individuals, which make them equivalent to personally identifiable information in some cases. In this paper, we generate synthetic transactional profiles using machine learning techniques with the goal to preserve both data utility and privacy. A challenge we faced was to deal with sparse vectors due to the few spending categories a client uses compared to all the ones available. We measured data utility by calculating common insights used by the banking industry on both the original and the synthetic data-set. Our approach shows that neural network models can generate valuable synthetic data in such context. Finally, we tried privacy-preserving techniques and observed its effect on models' performances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge