Patrick Mania

Imagination-enabled Robot Perception

Nov 27, 2020

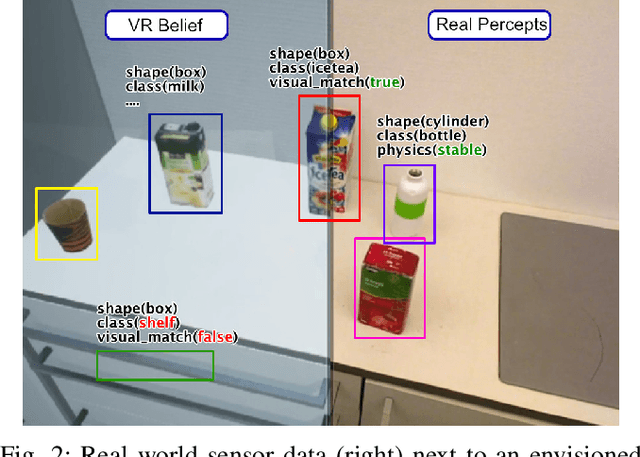

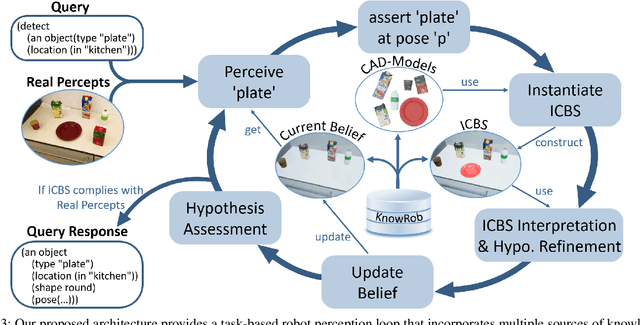

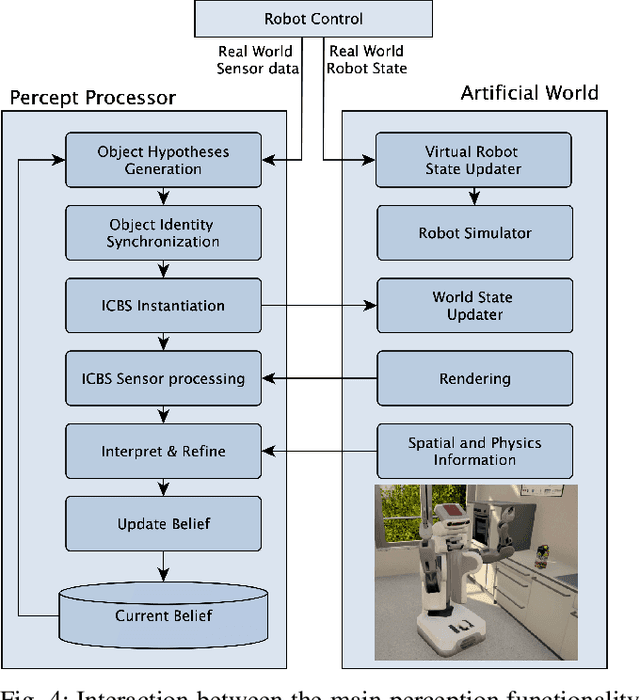

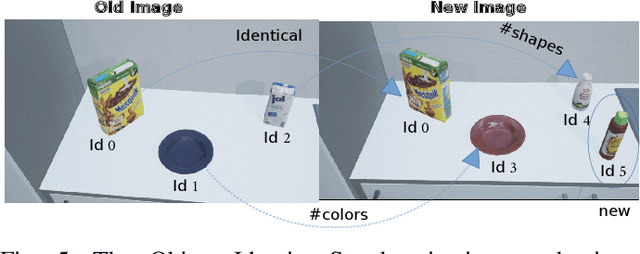

Abstract:Many of today's robot perception systems aim at accomplishing perception tasks that are too simplistic and too hard. They are too simplistic because they do not require the perception systems to provide all the information needed to accomplish manipulation tasks. Typically the perception results do not include information about the part structure of objects, articulation mechanisms and other attributes needed for adapting manipulation behavior. On the other hand, the perception problems stated are also too hard because -- unlike humans -- the perception systems cannot leverage the expectations about what they will see to their full potential. Therefore, we investigate a variation of robot perception tasks suitable for robots accomplishing everyday manipulation tasks, such as household robots or a robot in a retail store. In such settings it is reasonable to assume that robots know most objects and have detailed models of them. We propose a perception system that maintains its beliefs about its environment as a scene graph with physics simulation and visual rendering. When detecting objects, the perception system retrieves the model of the object and places it at the corresponding place in a VR-based environment model. The physics simulation ensures that object detections that are physically not possible are rejected and scenes can be rendered to generate expectations at the image level. The result is a perception system that can provide useful information for manipulation tasks.

RoboSherlock: Cognition-enabled Robot Perception for Everyday Manipulation Tasks

Nov 22, 2019

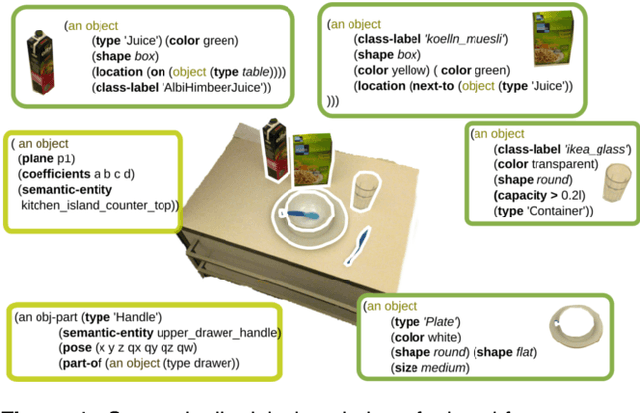

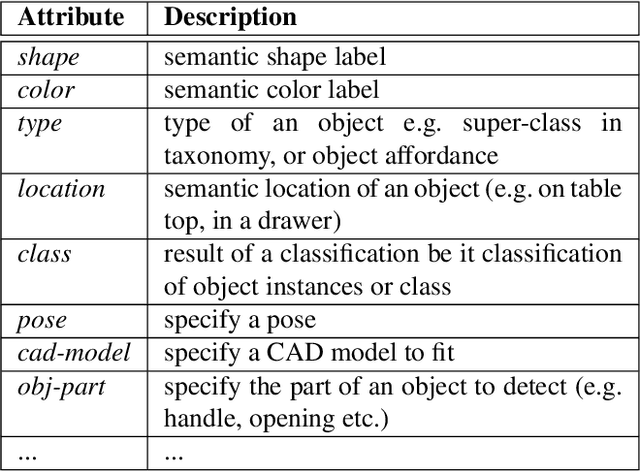

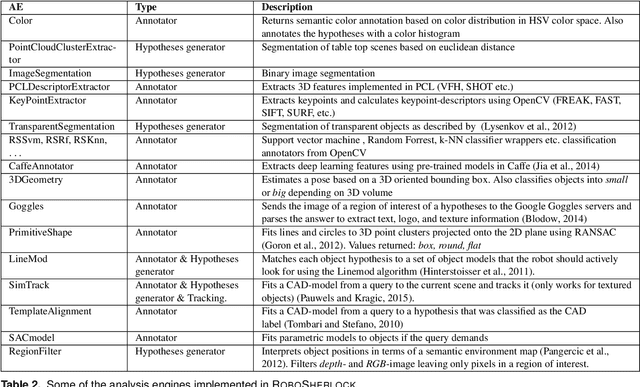

Abstract:A pressing question when designing intelligent autonomous systems is how to integrate the various subsystems concerned with complementary tasks. More specifically, robotic vision must provide task-relevant information about the environment and the objects in it to various planning related modules. In most implementations of the traditional Perception-Cognition-Action paradigm these tasks are treated as quasi-independent modules that function as black boxes for each other. It is our view that perception can benefit tremendously from a tight collaboration with cognition. We present RoboSherlock, a knowledge-enabled cognitive perception systems for mobile robots performing human-scale everyday manipulation tasks. In RoboSherlock, perception and interpretation of realistic scenes is formulated as an unstructured information management(UIM) problem. The application of the UIM principle supports the implementation of perception systems that can answer task-relevant queries about objects in a scene, boost object recognition performance by combining the strengths of multiple perception algorithms, support knowledge-enabled reasoning about objects and enable automatic and knowledge-driven generation of processing pipelines. We demonstrate the potential of the proposed framework through feasibility studies of systems for real-world scene perception that have been built on top of the framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge